Gaussian Processes

Summary

TLDRThis video provides an in-depth exploration of Gaussian Processes (GPs) in machine learning, emphasizing their ability to model uncertainty through probabilistic predictions. The video explains the foundational concepts, such as kernels and hyperparameters, and their role in shaping the predictions. It also covers practical challenges like computational complexity and tuning GPs effectively. The presenter highlights the advantages of GPs in applications where uncertainty is critical, and points to valuable resources, including the GPyTorch library, for further learning. Overall, GPs are presented as a powerful, though intricate, tool for modeling in various domains.

Takeaways

- 😀 Gaussian Processes (GPs) are powerful tools for modeling uncertainty in functions.

- 😀 GPs provide a flexible framework for regression and classification, helping optimize hyperparameters in machine learning models.

- 😀 One of the key features of GPs is the ability to calculate gradients with respect to hyperparameters, which aids in optimization.

- 😀 The posterior calculation in GPs requires inverting a kernel matrix, which scales like n^3, making it computationally expensive for large datasets.

- 😀 Despite the computational challenge, research and software developments aim to reduce the computational cost of GPs.

- 😀 Designing GPs effectively can be challenging, as they require choosing appropriate kernels and tuning hyperparameters.

- 😀 High-dimensional data can cause issues with GPs, especially when using kernels like RBF, which struggle with high-dimensional spaces.

- 😀 GPs excel when uncertainty is important, and a strong understanding of the problem being modeled is necessary for their successful application.

- 😀 Resources like GPytorch provide efficient implementations of Gaussian Processes, making it easier to use them with frameworks like PyTorch.

- 😀 There is ongoing research in automating GP kernel learning using neural networks to make GPs more user-friendly and applicable in various domains.

- 😀 While GPs are a powerful tool for spatial statistics, time series forecasting, and experimental design, they require patience and learning to use effectively.

Q & A

What are Gaussian Processes (GPs) used for in machine learning?

-Gaussian Processes (GPs) are used for modeling uncertainty in machine learning. They allow for the prediction of distributions over functions, providing both predictions and associated uncertainty, which is crucial for tasks like regression and classification in situations where uncertainty matters.

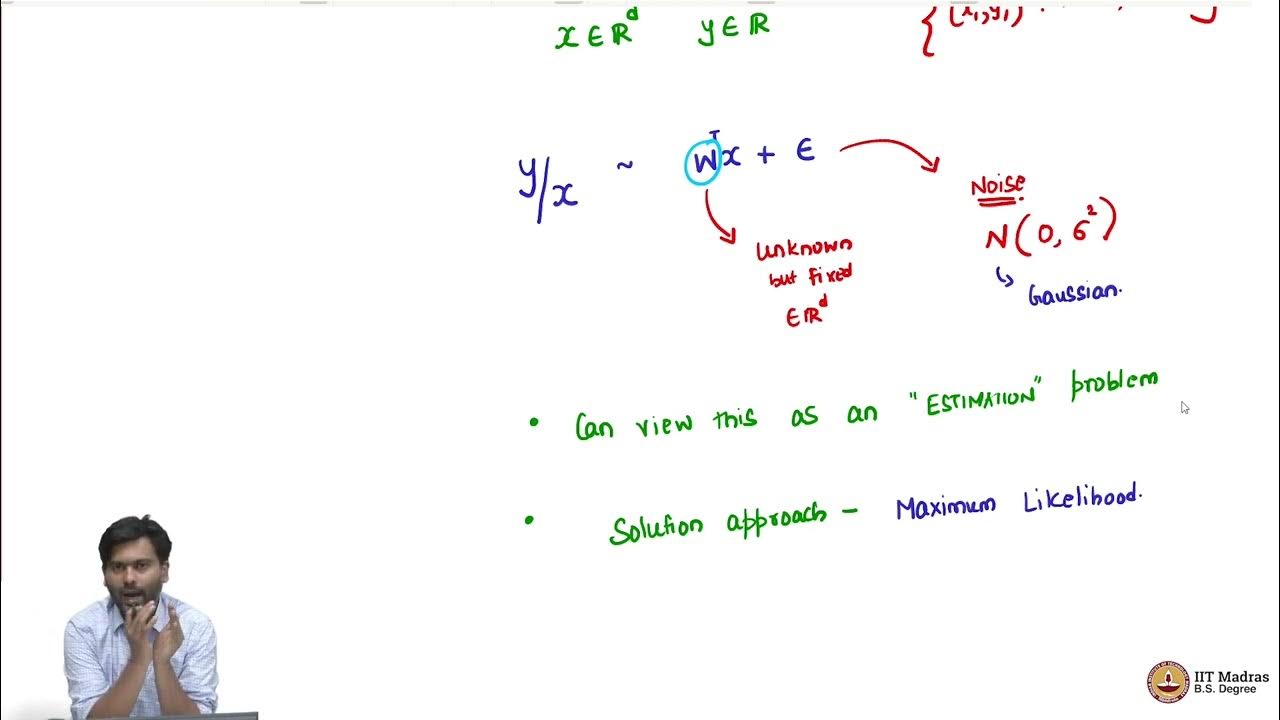

How do GPs differ from traditional linear regression?

-GPs extend linear regression by providing a probabilistic approach, allowing for predictions to include a measure of uncertainty. Unlike linear regression, which assumes fixed parameters, GPs learn a distribution over functions, giving a more flexible and detailed model.

What is a kernel in the context of GPs?

-A kernel in GPs is a function that measures the similarity between two input points. It defines the covariance structure of the process and determines the shape of the sampled functions, allowing the GP to model relationships between inputs and outputs effectively.

Why is the RBF kernel often used in Gaussian Processes?

-The Radial Basis Function (RBF) kernel is commonly used because it helps model smooth, continuous relationships between inputs. It is particularly effective for situations where the data is assumed to be similar for nearby points, which is often the case in real-world problems.

What is the significance of hyperparameter optimization in GPs?

-Hyperparameter optimization is crucial in GPs because the performance of the model heavily depends on the choice of kernel and the values of hyperparameters. Optimizing these hyperparameters ensures that the GP fits the data accurately, balancing model complexity and fit.

What computational challenges do GPs face?

-GPs face significant computational challenges, particularly when it comes to inverting the kernel matrix, which scales cubically with the number of data points. This makes GPs computationally expensive for large datasets, requiring specialized techniques to reduce this cost.

How does the optimization of the kernel matrix work in GPs?

-The optimization of the kernel matrix in GPs involves adjusting hyperparameters to maximize the likelihood of the data given the model. This process requires calculating the gradient of the likelihood with respect to the hyperparameters, allowing the GP to learn the best fit for the data.

What are some common difficulties when using Gaussian Processes?

-Some common difficulties include choosing the right kernel, tuning the hyperparameters, and dealing with computational limitations. GPs may also struggle with high-dimensional data, especially if the kernel used doesn't scale well, such as the RBF kernel in very high dimensions.

What resources are available to help learn about Gaussian Processes?

-There are many resources available to help learn about GPs, including academic research, tutorials, and software libraries. One notable library is GPyTorch, which offers an efficient implementation of GPs, especially for large datasets. Research papers on spatial statistics, time series forecasting, and experimental design also provide in-depth knowledge.

How does GPyTorch help with the computational challenges of Gaussian Processes?

-GPyTorch helps reduce the computational cost of Gaussian Processes by implementing efficient algorithms and leveraging GPU acceleration. This makes it possible to work with large datasets and complex models that would otherwise be computationally prohibitive.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

SKLearn 13 | Naive Bayes Classification | Belajar Machine Learning Dasar

10.3 Probabilistic Principal Component Analysis (UvA - Machine Learning 1 - 2020)

Bayesian Estimation in Machine Learning - Training and Uncertainties

Probabilistic view of linear regression

What is Vertex AI?

What Is Transfer Learning? | Transfer Learning in Deep Learning | Deep Learning Tutorial|Simplilearn

5.0 / 5 (0 votes)