What Is Transfer Learning? | Transfer Learning in Deep Learning | Deep Learning Tutorial|Simplilearn

Summary

TLDRThis video introduces transfer learning, a powerful technique in machine learning where a pre-trained model is used to improve predictions on new tasks. It covers the benefits, such as reduced training time and the ability to work with limited data, and explains how transfer learning works, especially in areas like computer vision and natural language processing. The video also highlights popular pre-trained models like VGG16, InceptionV3, BERT, and GPT. Viewers are encouraged to explore more through certification programs in AI, machine learning, and other cutting-edge domains to advance their careers.

Takeaways

- 📚 Transfer learning allows using a pre-trained model to improve predictions on a new, related task.

- ⚙️ It is commonly used in fields like computer vision and natural language processing (NLP).

- 🚀 Transfer learning reduces training time and improves performance, even with limited data.

- 🔄 Retraining only the later layers of a neural network can tailor it for a new task, while keeping early layers intact.

- ⏱️ Using pre-trained models saves time compared to training from scratch, especially for deep learning models.

- 🔍 Feature extraction identifies important patterns in data at different neural network layers.

- 📊 Popular models used in transfer learning include VGG16, Inception V3 for image recognition, and BERT, GPT for NLP.

- 💻 Transfer learning helps leverage large datasets like ImageNet or extensive text collections for new tasks.

- 🎓 Simply Learn offers an AI and ML program in collaboration with IBM and Purdue University for upskilling.

- 🎯 The program covers topics like machine learning, deep learning, NLP, computer vision, and more.

Q & A

What is transfer learning in machine learning?

-Transfer learning is a technique in machine learning where a pre-trained model, which has been trained on a related task, is reused to improve predictions on a new task. It leverages knowledge gained from a previous assignment to solve similar problems more efficiently.

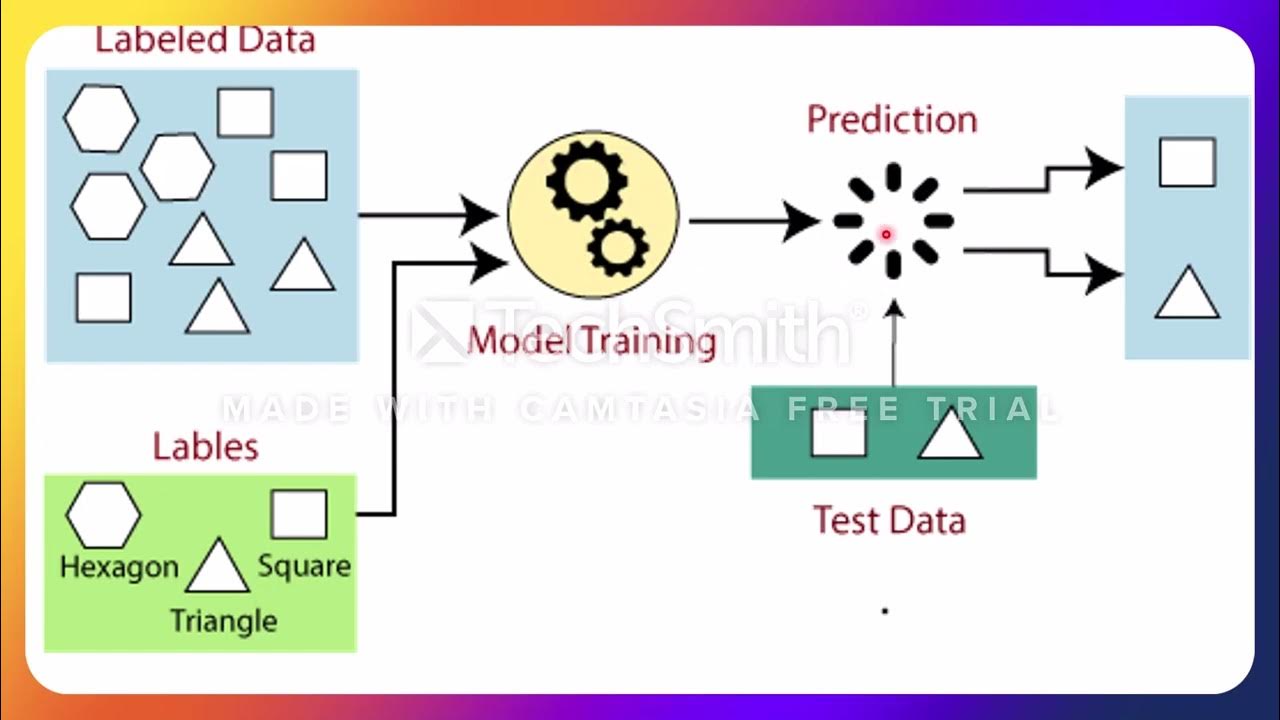

How does transfer learning work in computer vision?

-In computer vision, neural networks detect edges in the early layers, identify forms in the middle layers, and capture task-specific features in the later layers. Transfer learning reuses the early and middle layers of a pre-trained model and retrains the later layers for a new, related task.

What are some benefits of using transfer learning?

-Transfer learning reduces training time, improves neural network performance, and allows models to work well with limited data. This is especially helpful when large datasets are unavailable, as the pre-trained model has already learned general features.

Why is transfer learning useful for tasks like NLP?

-Transfer learning is beneficial for NLP tasks because obtaining large labeled datasets in this domain can be challenging. Pre-trained models, which have been trained on vast amounts of text, allow efficient use of limited data and reduce training time.

What are the steps involved in using transfer learning?

-The steps include: 1) Training a model to reuse for a related task, 2) Using a pre-trained model, 3) Extracting features from the data, and 4) Refining the pre-trained model to suit the specific new task.

What is the role of feature extraction in transfer learning?

-Feature extraction involves identifying meaningful patterns in the input data. In neural networks, the early layers extract basic features like edges, while deeper layers capture more complex patterns. These extracted features are used to improve predictions.

Which popular models have been trained using transfer learning?

-Some popular models include VGG16 and VGG19 (trained on ImageNet for image classification), InceptionV3 (known for object detection), BERT (used in NLP tasks like sentiment analysis), and the GPT series (used in a wide range of NLP tasks).

How does transfer learning speed up the training process?

-By using a pre-trained model, the network has already learned general features, so the training starts from an advanced point. This reduces the need for extensive training time, which is especially beneficial for complex tasks that would otherwise take days or weeks.

What is the difference between training a model from scratch and using a pre-trained model?

-Training a model from scratch requires feeding raw data and requires extensive computation and time. Using a pre-trained model allows you to build on knowledge it already gained, requiring less data and computation, while achieving faster and more accurate results.

Why is transfer learning effective in domains like computer vision and NLP?

-Transfer learning is effective in these domains because the models can reuse fundamental features such as edges in images or sentence structures in text. This allows them to adapt to new tasks more quickly and with less data, while still performing effectively.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What is Transfer Learning? | With code in Keras

[Easy! 딥러닝] 1-3강. 자기 지도 학습 (Self-Supervised Learning) | 딱 10분만 투자해보세요!

What is Transfer Learning? [Explained in 3 minutes]

What is Federated Learning?

Lecture 3.4 | KNN Algorithm In Machine Learning | K Nearest Neighbor | Classification | #mlt #knn

L8 Part 02 Jenis Jenis Learning

5.0 / 5 (0 votes)