How indexes work in Distributed Databases, their trade-offs, and challenges

Summary

TLDRThe transcript discusses the importance of indexing in database management, particularly in the context of distributed data stores. It explains how traditional indexing accelerates lookups by creating indexes on secondary attributes. The concept of sharding and partitioning databases is introduced to handle large volumes of data across multiple nodes. The script delves into practical examples, such as using an author ID as a partition key in a blogging platform like Medium, and how this affects querying. It further explores the use of global secondary indexes (GSI) to efficiently query data across shards based on secondary attributes like blog categories, contrasting this with local secondary indexes that are more efficient for queries that include the partition key. The summary also touches on the trade-offs between storing only primary key references versus the entire object in GSIs, the challenges of maintaining GSIs, and the limitations of local secondary indexes. The speaker encourages viewers to explore the fascinating domain of distributed databases and to prototype their understanding.

Takeaways

- 🔎 Indexes are used to speed up database lookups and are typically created on secondary attributes.

- 📚 When databases are sharded and partitioned, data is spread across multiple nodes to handle large volumes efficiently.

- 📈 Practical examples, like a blogs database, illustrate how indexing works in distributed data stores.

- 🔑 A partition key is essential for determining which node will handle specific data in a sharded database.

- 🗃️ Hash functions are used to map data to the appropriate shard based on the partition key.

- 🔍 Queries on the partitioning key are efficient because they can be directed to the correct shard without needing to search all nodes.

- 📚 For queries not involving the partition key, such as searching by category, the database must fan out requests to all shards, which is slower.

- 🌐 Global secondary indexes provide a solution by maintaining a separate index for secondary attributes, improving query efficiency.

- 📈 Global secondary indexes can either store references to primary keys or entire objects, offering a trade-off between space and performance.

- 🔄 Keeping global secondary indexes in sync with the main data can be challenging and expensive, especially with frequent updates.

- 📚 Local secondary indexes, on the other hand, are limited to a shard and are useful when queries always include the partition key, ensuring strong consistency.

Q & A

What is the primary purpose of creating an index in a database?

-The primary purpose of creating an index in a database is to speed up the lookup process, especially on secondary attributes, which can significantly improve the performance of queries.

How does sharding and partitioning a database help with handling large volumes of data?

-Sharding and partitioning a database involve splitting the data and distributing it across multiple data nodes. This helps to manage large volumes of data and distribute the load, ensuring that no single node is overwhelmed by the data it needs to handle.

What is a partition key and why is it important in a distributed data store?

-A partition key is a unique identifier used to determine which shard or data node will store a particular piece of data. It is crucial because it dictates the distribution of data across the database shards, ensuring efficient data retrieval and storage.

How does a hash function play a role in determining the shard for a specific piece of data?

-A hash function is used to process the partition key, such as an author ID, to determine which shard the data should be stored in. It does this by generating a hash value that corresponds to a specific shard, ensuring that data with the same partition key is stored together.

What is the main challenge when querying for data based on a non-partitioning key in a sharded database?

-The main challenge is that the database proxy must fan out the request to all nodes, execute the query on each node, and then merge the results before sending them back to the user. This process can be slow, inefficient, and can lead to incomplete results or timeouts if a shard is slow or unavailable.

What is a global secondary index and how does it help with querying on a secondary attribute?

-A global secondary index is a separate index that is partitioned by a secondary attribute, such as a category. It allows for efficient querying on that attribute without the need to fan out requests across all shards. This is particularly useful when the query does not involve the partition key.

How does storing the entire blog object in a global secondary index affect query performance?

-Storing the entire blog object in a global secondary index can improve query performance because it reduces the need for additional lookups in the data shards. However, it also increases the index size, which can lead to a tradeoff between space efficiency and query speed.

What is the difference between a global secondary index and a local secondary index?

-A global secondary index is a separate index that is partitioned by a secondary attribute and can be queried across all shards. A local secondary index, on the other hand, is specific to a shard and is used when the query includes the partition key. It allows for efficient querying without the need for a global index.

Why are global secondary indexes considered expensive to manage and maintain?

-Global secondary indexes are expensive to manage and maintain because they require synchronization with the main data. Any update to the main data must also be reflected in the index, which can be resource-intensive, especially if there are many indexes or a high volume of updates.

What is the typical limit on the number of global secondary indexes that can be created in a distributed database?

-Many distributed databases limit the number of global secondary indexes that can be created to manage the cost of maintenance and synchronization. The typical limit is between 5 to 7 global secondary indexes.

How does the concept of strong consistency affect the implementation of secondary indexes?

-Strong consistency requires that all updates to the data are immediately reflected in the indexes, ensuring that the data and indexes are always in sync. This can be challenging to achieve, especially with a large number of global secondary indexes, and is one of the reasons why these indexes can be expensive to maintain.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

How do Databases work? Understand the internal architecture in simplest way possible!

Sistem Manajemen Database Ke 2

SISTEM BASIS DATA 1 (PART 1) - KONSEP DASAR, PRINSIP DAN TUJUAN BASIS DATA

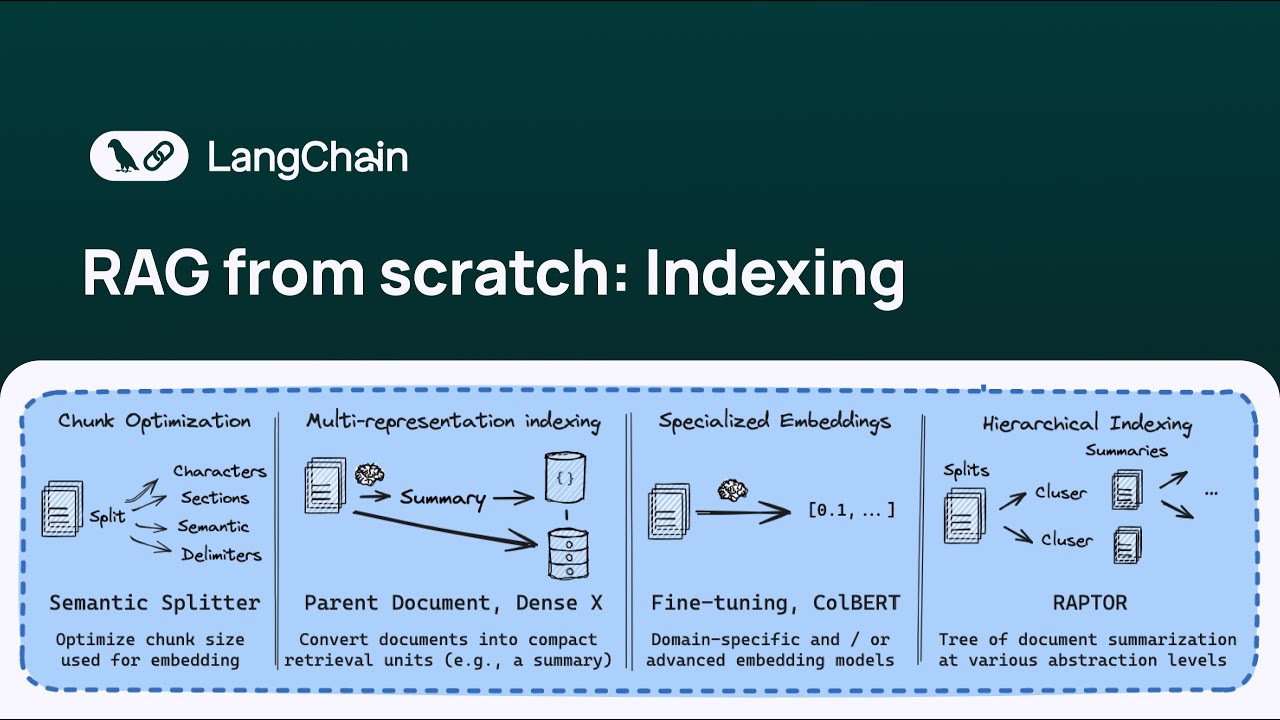

RAG from scratch: Part 12 (Multi-Representation Indexing)

Tutorial 7- Python Sets, Dictionaries And Tuples And Its Inbuilt Functions In Hindi

Транзакции | Введение | ACID | CAP | Обработка ошибок

5.0 / 5 (0 votes)