Lecture 1.3 — Some simple models of neurons — [ Deep Learning | Geoffrey Hinton | UofT ]

Summary

TLDR视频脚本介绍了几种相对简单的神经元模型,从简单的线性和阈值神经元开始,逐步过渡到更复杂的模型。这些模型虽然比真实的神经元简化,但足以构建能够进行有趣机器学习任务的神经网络。视频中强调了理想化的重要性,即通过简化复杂细节,以便更好地理解和应用数学,同时避免去除关键特性。介绍了几种不同类型的神经元,包括线性神经元、二元阈值神经元、整流线性神经元、S型神经元和随机二元神经元。每种神经元模型都有其特定的计算方式和输出特性,这些特性对于理解神经网络的工作原理至关重要。视频还讨论了逻辑函数在神经元模型中的应用,以及如何通过这些模型来模拟大脑处理信息的方式。

Takeaways

- 🧠 简化模型有助于理解复杂系统:通过简化神经元模型,可以更容易地应用数学和类比,从而理解大脑的工作原理。

- 📈 线性神经元的局限性:线性神经元虽然简单,但在计算上受限,可能无法完全反映真实神经元的复杂性。

- 🔄 阈值神经元的二元输出:McCulloch-Pitts阈值神经元通过计算加权和,超过阈值时输出1,否则输出0,模拟了逻辑命题的真值。

- 📊 激活函数的重要性:激活函数如sigmoid函数提供了平滑且有界的输出,这对于机器学习中的学习过程至关重要。

- 🔢 逻辑神经元与大脑计算:早期认为大脑计算类似于逻辑运算,但后来更关注于大脑如何结合多种不可靠证据。

- ⚡️ 阈值神经元的两种等价表达:总输入Z可以包括或不包括偏置项,两种表达方式在数学上是等价的。

- 📉 ReLU(修正线性单元)的非线性特性:ReLU在输入低于0时输出0,高于0时线性增长,这种特性在神经网络中非常常见。

- 🎢 Sigmoid神经元的平滑导数:Sigmoid神经元的导数连续且平滑,这使得它们在学习算法中表现良好。

- 🎰 随机二进制神经元的概率决策:这些神经元使用逻辑函数计算输出概率,然后基于这个概率进行随机的1或0输出。

- 🔁 ReLU的随机性:ReLU可以决定输出的速率,但实际的尖峰产生时间是随机的,模拟了Poisson过程。

- 🤖 神经网络的实用性:即使某些神经元模型与真实神经元行为不完全一致,它们在机器学习实践中仍然非常有用。

- 🚀 理解简化模型的价值:即使知道某些模型可能是错误的,理解它们仍然有助于我们构建更复杂的模型,更接近现实。

Q & A

视频中提到的最简单的神经元模型是什么?

-视频中提到的最简单的神经元模型是线性神经元(linear neuron),它的输出Y是神经元偏置B和所有输入连接活动的加权和的函数。

McCulloch和Pitts提出的二元阈值神经元模型有哪些特点?

-McCulloch和Pitts提出的二元阈值神经元模型首先计算输入的加权和,然后如果这个加权和超过阈值,就发出一个活动脉冲。它们将脉冲视为命题的真值,每个神经元结合来自其他神经元的真值,产生自己的真值。

什么是修正线性神经元(rectified linear neuron)?

-修正线性神经元首先计算输入的线性加权和,然后根据这个加权和的值给出输出。如果加权和Z小于零,则输出为零;如果大于零,则输出等于Z。它结合了线性神经元和二元阈值神经元的特点。

sigmoid神经元的输出是什么类型的函数?

-sigmoid神经元的输出是一个连续且有界的实数值函数,通常使用logistic函数,其总输入是偏置加上输入线上的加权活动。

为什么sigmoid神经元在机器学习中很有用?

-sigmoid神经元在机器学习中很有用,因为它们的导数是连续变化的,这使得它们在进行学习时表现良好,尤其是在梯度下降等算法中。

随机二元神经元是如何工作的?

-随机二元神经元使用与logistic单元相同的方程计算总输入,并使用logistic函数计算一个实数值,该值是它们输出脉冲的概率。然后,它们不是输出这个概率作为实数,而是进行一个概率决策,实际输出是1或0,具有内在的随机性。

为什么在理解复杂系统时需要理想化?

-理想化是为了简化复杂系统,移除非本质的复杂细节,使我们能够应用数学并将其与其他熟悉的系统进行类比。这有助于我们理解主要原理,并在理解了基本原理后,逐步增加复杂性,使模型更接近现实。

在机器学习中,即使知道某些模型是错误的,为什么仍然值得理解它们?

-即使知道某些模型是错误的,理解这些模型仍然有价值,因为它们可以帮助我们理解基本原理,并且这些简化的模型在实践中可能非常有用,尤其是在机器学习的某些应用中。

如何理解线性神经元的输出Y与输入的关系?

-在线性神经元中,输出Y是输入的加权和与偏置的函数。如果我们将偏置加上加权输入活动作为x轴,输出Y作为y轴,那么可以得到一条通过原点的直线。

null

-null

二元阈值神经元的输入输出函数如何表示?

-二元阈值神经元的输入输出函数可以表示为:如果加权输入Z超过阈值,则输出为1;否则输出为0。也可以表示为包含偏置项的总输入,如果总输入超过零,则输出为1,否则为0。

修正线性神经元的输入输出曲线有什么特点?

-修正线性神经元的输入输出曲线在Z小于零时输出为零,当Z大于零时输出等于Z,因此它在零点处有一个硬决策,但在Z大于零时是线性的。

为什么说逻辑可能不再是大脑工作的最佳范式?

-因为现代的神经科学研究认为大脑在处理信息时,更多地是结合了多种不同来源的不可靠证据,而不仅仅是逻辑运算。这意味着大脑的工作方式可能更复杂,涉及概率和不确定性的处理。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Ilya Sutskever | AI will be omnipotent in the future | Everything is impossible becomes possible

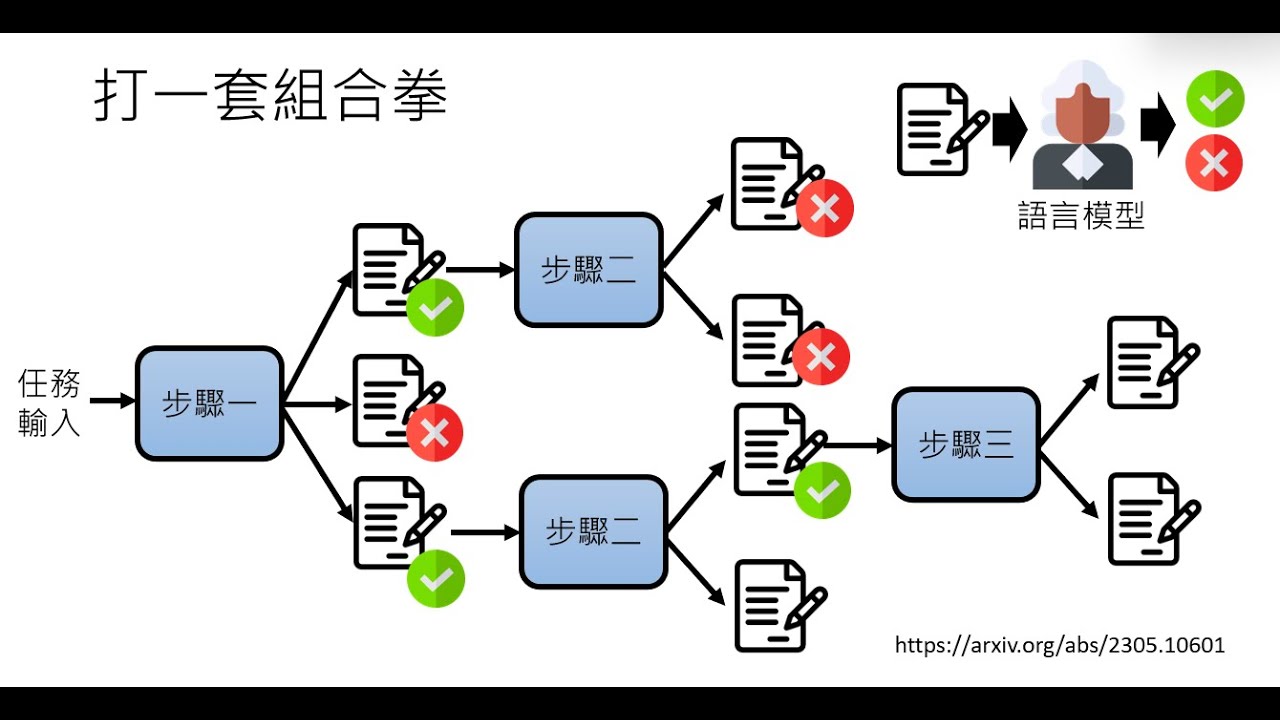

Understand DSPy: Programming AI Pipelines

【漫画・小説・シナリオ】プロのシナリオライターが教える! 超簡単なストーリーの作り方【創作論】

The SECRET to Clean Unity GameObject Communication

【生成式AI導論 2024】第4講:訓練不了人工智慧?你可以訓練你自己 (中) — 拆解問題與使用工具

I Built a Sports Betting Bot with ChatGPT

5.0 / 5 (0 votes)