Lecture 1.2 — What are neural networks — [ Deep Learning | Geoffrey Hinton | UofT ]

Summary

TLDRThis video explores the real workings of neurons in the human brain, which inspire artificial neural networks. It discusses the brain's parallel computational abilities, the structure and function of cortical neurons, and how synapses adapt to enable learning. While the course primarily focuses on algorithms rather than the brain's mechanics, it highlights the importance of understanding neural networks for practical problem-solving. The video also touches on the modularity of the cortex and its capacity for flexible learning, illustrating how different brain regions adapt to various functions based on experience.

Takeaways

- 🧠 Understanding the brain's functioning is crucial for developing artificial neural networks, which are inspired by real neurons.

- 🔄 Computer simulations are necessary for studying complex brain mechanisms, as direct experimentation can be invasive and impractical.

- ⚡ The brain computes using a large, parallel network of relatively slow neurons, differing significantly from conventional serial processors.

- 📊 Learning algorithms derived from neural processes can effectively solve practical problems, even if they do not mirror biological processes.

- 🔗 A typical cortical neuron consists of a cell body, an axon, and dendritic trees, allowing communication through synapses.

- 📉 Synapses adapt their strength based on local signals, which is essential for learning and performing various computations.

- 🌍 The cortex is modular, with different areas specialized for distinct functions, and damage to specific areas can result in loss of abilities.

- 🔄 If brain damage occurs early in life, functions can often relocate to different regions, showcasing the brain's flexibility.

- 🔍 The cortex comprises general-purpose neurons that can adapt to perform specialized tasks based on experience.

- ⚙️ Unlike conventional computers, which depend on sequential programming for flexibility, the brain's architecture allows for dynamic and adaptable learning.

Q & A

What is the primary purpose of studying real neurons in the brain?

-The primary purpose is to understand how the brain functions, which can inform the development of artificial neural networks and improve our understanding of computation and learning.

Why are computer simulations necessary for understanding brain function?

-Computer simulations are necessary because the brain is complex, and physical experiments can damage it or are difficult to conduct. Simulations help interpret findings from empirical studies.

How does the brain's parallel processing differ from conventional serial processors?

-The brain uses a vast network of slower neurons to process information in parallel, while conventional serial processors execute tasks sequentially, making the brain more efficient for certain tasks like vision.

What role do synapses play in neuronal communication?

-Synapses are contact points between neurons where neurotransmitters are released, facilitating communication by allowing charge to be injected into the postsynaptic neuron, which can lead to the generation of action potentials.

How do neurotransmitters affect synaptic weights?

-Neurotransmitters can either increase (positive weights) or decrease (negative weights) the likelihood of the postsynaptic neuron firing, thus adapting synaptic weights and influencing learning.

What is the significance of synaptic adaptation in learning?

-Synaptic adaptation is crucial for learning, as it allows synapses to change in strength based on local signals, enabling the brain to perform complex computations and learn new information.

What does modularity in the cortex refer to?

-Modularity in the cortex refers to the brain's organization into distinct regions that specialize in different functions, allowing for efficient processing and specific responses to sensory inputs.

How does the brain demonstrate functional plasticity?

-Functional plasticity is shown when damaged areas of the brain can sometimes have their functions taken over by other regions, especially if the damage occurs early in development, highlighting the brain's adaptability.

What evidence supports the idea that the cortex is made of general-purpose components?

-Experiments with baby ferrets demonstrate that when sensory inputs are altered, the cortex can reorganize to accommodate new types of information processing, suggesting that its structure is flexible and adaptable.

How does the brain's learning process compare to traditional computing methods?

-The brain's learning process relies on the adaptation of synaptic weights based on experience, which differs from traditional computing methods that typically depend on fixed, sequential programming and fast central processing.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

How Neural Networks Work

Introduction to Neural Networks with Example in HINDI | Artificial Intelligence

Jaringan Syaraf Tiruan [1] : Konsep Dasar JST

Deep Learning(CS7015): Lec 2.1 Motivation from Biological Neurons

1. Pengantar Jaringan Saraf Tiruan

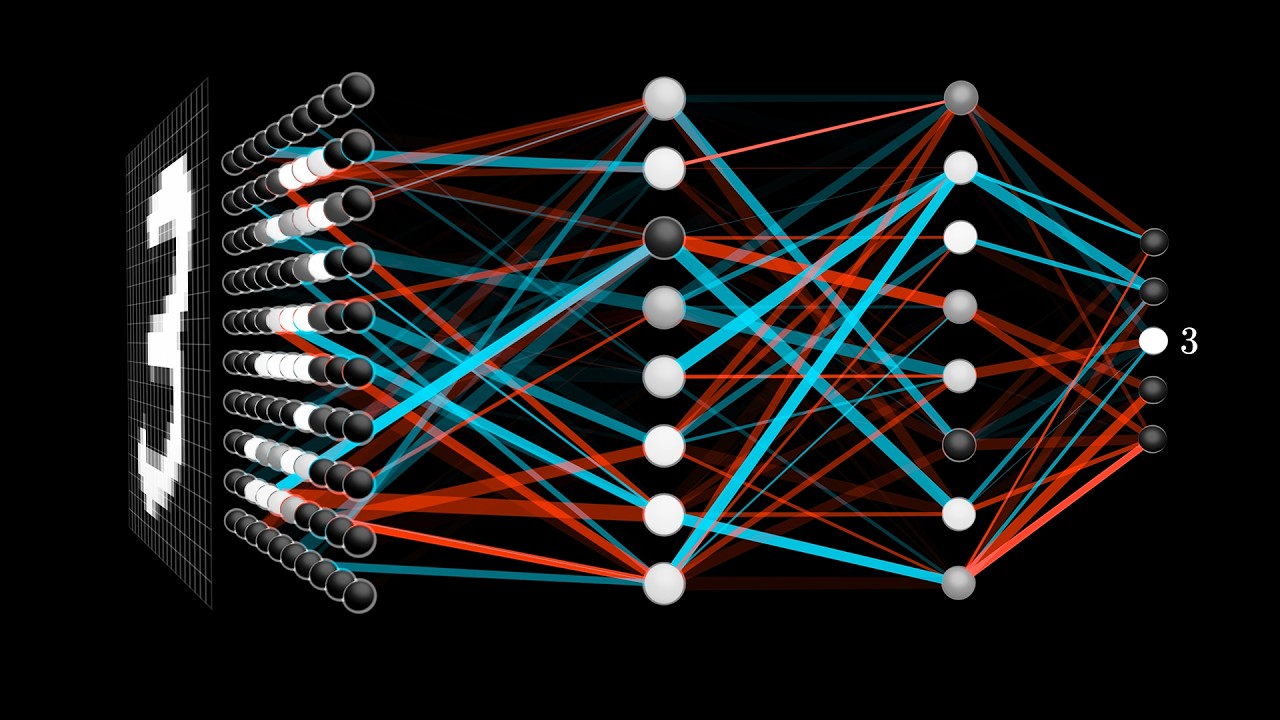

But what is a neural network? | Chapter 1, Deep learning

5.0 / 5 (0 votes)