0625 Independencia de variables aleatorias

Summary

TLDRThis script explores the concept of independence in random variables, detailing the mathematical foundation for defining independence through joint and marginal distribution functions. It covers discrete and continuous cases and provides examples to demonstrate how to verify independence. The script extends the definition to infinite collections of random variables, explaining the importance of independence in probability theory, particularly in key results like the Law of Large Numbers and the Central Limit Theorem. It also discusses the practical applications and intuitive justification for assuming independence in various models.

Takeaways

- 😀 Independence of random variables is defined by the equality between the joint distribution function and the product of marginal distribution functions for each random variable.

- 😀 The definition of independence applies to random variables of any type (discrete, continuous, etc.) and is valid for any real values of the variables.

- 😀 If the condition of independence is violated for a specific set of real numbers, we can conclude that the random variables are not independent.

- 😀 For discrete random variables, the independence condition can be written as the product of the probabilities of the individual events, i.e., P(X1 = x1, XN = XN) = P(X1 = x1) * P(XN = XN).

- 😀 The condition of independence makes the calculation of probabilities simpler and is often used as a hypothesis in probability results.

- 😀 In cases where joint probability densities or mass functions exist, the independence condition can be expressed as the product of the individual probability densities or mass functions.

- 😀 The independence condition for continuous random variables can be expressed as f(x1, x2, ..., XN) = f(x1) * f(x2) * ... * f(XN).

- 😀 In a first example, for discrete variables, independence was not satisfied because the product of the marginal probabilities did not match the joint probability.

- 😀 In a second example with continuous variables, independence was satisfied because the joint distribution function equaled the product of the marginal distribution functions.

- 😀 The concept of independence can be extended to collections of an infinite number of random variables, stating that a collection is independent if any finite subset of it is independent.

- 😀 The concept of independence for random vectors can also be defined analogously to that of individual random variables and extended to both finite and infinite collections of vectors.

Q & A

What is the definition of independence for random variables in the context of this script?

-Independence of random variables means that the joint distribution of a collection of random variables equals the product of their marginal distributions. Mathematically, for random variables X1, X2, ..., Xn, they are independent if: F(x1, x2, ..., xn) = F1(x1) * F2(x2) * ... * Fn(xn), where Fi are the marginal distribution functions.

What does the script say about the conditions for independence of random variables?

-The script emphasizes that for random variables to be independent, there should be no specific conditions required on the type of random variables (e.g., discrete, continuous), and the key condition is that the joint distribution must factorize into the product of the marginal distributions.

How does the concept of independence apply to discrete random variables?

-For discrete random variables, the joint probability of two or more variables is equal to the product of their individual probabilities. For example, the probability of X1 = x1 and X2 = x2 is: P(X1 = x1, X2 = x2) = P(X1 = x1) * P(X2 = x2), which must hold for independence.

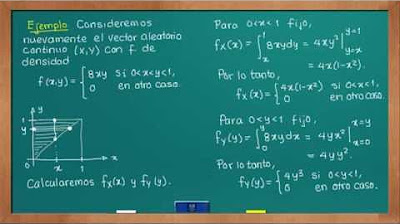

What is the definition of independence for continuous random variables according to the script?

-For continuous random variables, the independence condition is that the joint probability density function equals the product of the marginal probability density functions. If the joint density function f(x1, x2, ..., xn) is equal to the product of the individual marginal densities, then the random variables are independent.

How does the script describe how to check for independence using joint and marginal distributions?

-To check for independence, the script explains that you first calculate the joint distribution and the marginal distributions. If the joint distribution is equal to the product of the marginal distributions for all possible values, the random variables are independent.

What does the first example in the script demonstrate about the independence of random variables?

-The first example demonstrates that the random variables X and Y are not independent. The joint probability of X = 0 and Y = 0 was found to be different from the product of their marginal probabilities, indicating that the variables are not independent.

What conclusion can be drawn from the second example in the script, which deals with continuous random variables?

-The second example shows that for continuous random variables X and Y, the joint distribution is the product of their marginal distributions. Since this condition holds, the variables X and Y are independent.

How does the script extend the concept of independence to infinite collections of random variables?

-The script extends the concept of independence to infinite collections of random variables by stating that an infinite collection of random variables is independent if every finite subset of variables is independent.

Why is the concept of independence important in probability theory, as mentioned in the script?

-Independence is crucial in probability theory because it simplifies calculations and is widely used in important results such as the Law of Large Numbers and the Central Limit Theorem.

How is the independence concept extended to vectors of random variables?

-The concept of independence can be extended to vectors of random variables in the same way as it is extended to individual random variables, meaning that two or more vectors are independent if the joint distribution of the vectors factorizes into the product of their marginal distributions, either in a finite or infinite collection.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

0625 Función de densidad conjunta, marginal y condicional

Random Variable, Probability Density Function, Cumulative Distribution Function

Basic probability: Joint, marginal and conditional probability | Independence

Marginal and conditional distributions | Analyzing categorical data | AP Statistics | Khan Academy

Random variables | Probability and Statistics | Khan Academy

Introduction to Random Variables Probability Distribution

5.0 / 5 (0 votes)