Using AI in Software Design: How ChatGPT Can Help With Creating a Solution Architecture | R. Müller

Summary

TLDRRal Miller, an expert in software and architecture, discusses the utilization of AI in software design, specifically focusing on the capabilities of GPT models. He highlights the importance of context in AI interactions and shares practical tips for effectively using chatbots like GPT for complex tasks such as preparing for the iSAQB Advanced exam. Miller emphasizes the iterative process of refining prompts and the potential of AI as a collaborative tool in architectural decision-making, while also addressing considerations around data protection and the ethical use of AI.

Takeaways

- 🚀 Ral Miller is a renowned software and architecture expert, author, and accredited trainer known for initiating the open-source project doc toolchain.

- 📈 Ral discusses the effective use of AI, specifically chatbots like CPT, in software design and creating solution architectures.

- 💡 The importance of data protection and copyright is highlighted when using AI, emphasizing the need to handle personal and company information with care.

- 📚 Ral shares his experience and tips on using AI for preparing for the iSAQB Advanced exam, noting the differences between GPT-3 and GPT-4 models.

- 🌟 GPT-4 is recognized for its superior performance in a medical exam study, showcasing its ability to provide higher quality responses compared to GPT-3.

- 🧠 The core functionality of AI models like GPT-4 is likened to an all-knowing monkey rather than a statistical parrot, indicating a more complex and knowledgeable system.

- 🔍 Ral introduces the concept of 'embeddings' and their role in enhancing AI models, allowing them to access and utilize additional information beyond the core neural network.

- 🔗 The significance of context in AI interactions is discussed, with strategies provided for maintaining context and ensuring effective communication with AI.

- 📝 Tips for priming AI models are shared, including the use of custom instructions to establish long-term memory and refine the AI's responses.

- 🛠️ Ral demonstrates the practical application of AI in solving complex tasks, such as the iSAQB Advanced exam, by iteratively working with the AI to generate and refine solutions.

- 📈 The potential of AI as an architectural co-pilot is emphasized, with Ral noting that while AI can provide a good starting point, human guidance and review are still necessary.

Q & A

What is Ral Miller known for in the field of software and architecture?

-Ral Miller is a well-known software and architecture expert, author, and accredited trainer. He started the open-source project doc toolchain, which focuses on the effective documentation of software architecture. He is also responsible for creating various formats of the arc 42 template.

What is the main topic of Ral Miller's session?

-The main topic of Ral Miller's session is 'Using AI in software design', specifically discussing how chat can assist in creating a solution architecture.

What are the potential dangers of using CCK GPT mentioned in the transcript?

-The potential dangers of using CCK GPT include issues with data protection, both for personal data and company information, and copyright concerns when working with non-public files.

How does Ral Miller describe the core functionality of large language models like GPT?

-Ral Miller describes the core functionality of large language models like GPT as being based on a neural network that performs auto-completion, similar to how a mobile phone predicts text based on probabilities.

What is the difference in performance between GPT-3 and GPT-4 as discussed in the transcript?

-The transcript mentions a study where GPT-4 scored an 82% accuracy rate on a medical exam with roughly 1,000 questions, compared to GPT-3.5 which scored 65% on average and GPT-3 with 75%. This indicates that GPT-4 has a higher quality and more capabilities than GPT-3.

How does Ral Miller suggest maintaining context when interacting with chatbots like GPT?

-Ral Miller suggests starting every session with a prompt that includes a special character like a greater sign (>) to help identify whether the system is still within the context. As long as the context is active, the first paragraph of each output will include the dash. Once the context is lost, the system will no longer display the quote character.

What is the role of embeddings in enhancing the capabilities of a neural network like GPT?

-Embeddings are used to extend the neural network with additional data. They are created from text fragments and stored in a vector database. When interacting with the system, it may query this database for text fragments that fit the prompt, pulling them into the context and enhancing the system's understanding and responses.

How does Ral Miller prepare the chatbot for a specific task?

-Ral Miller prepares the chatbot by providing it with a detailed context, or 'priming', which includes information about his background, the goal of the session, and any specific requirements or constraints. He also uses custom instructions to guide the chatbot's responses and to create a 'long-term memory' for the chatbot.

What is the significance of the context size for GPT models?

-The context size determines how much of the chat history the model takes into account when generating responses. GPT-3 has a context size of 2,000 tokens, while GPT-4 has increased this to 128,000 tokens. A larger context size allows the model to consider more information, potentially leading to more accurate and relevant responses.

How does Ral Miller use chat GPT to assist with an advanced certification exam?

-Ral Miller uses chat GPT to generate solutions for the tasks in the exam by providing detailed prompts and context. He also uses it to understand the task better by asking the chatbot questions and refining the prompts based on the responses. The chatbot helps in creating documents, diagrams, and strategies as part of the exam.

What are Ral Miller's views on the future capabilities of chatbots like GPT?

-Ral Miller believes that chatbots like GPT can serve as an architectural body co-pilot, helping to generate ideas and make decisions. He expects that advancements in the model will lead to an even larger context size, which will help to overcome current limitations. He also sees potential in using GPT for passing oral exams by integrating it with virtual avatars and video technology.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Using AI in Software Design: How ChatGPT Can Help With Creating a Solution Arch. by Ralf D. Müller

Debunking Devin: "First AI Software Engineer" Upwork lie exposed!

Mistral's Devstral: NEW Opensource Coding LLM! 1# On SWE Bench! (Fully Tested)

What is Software Architecture?

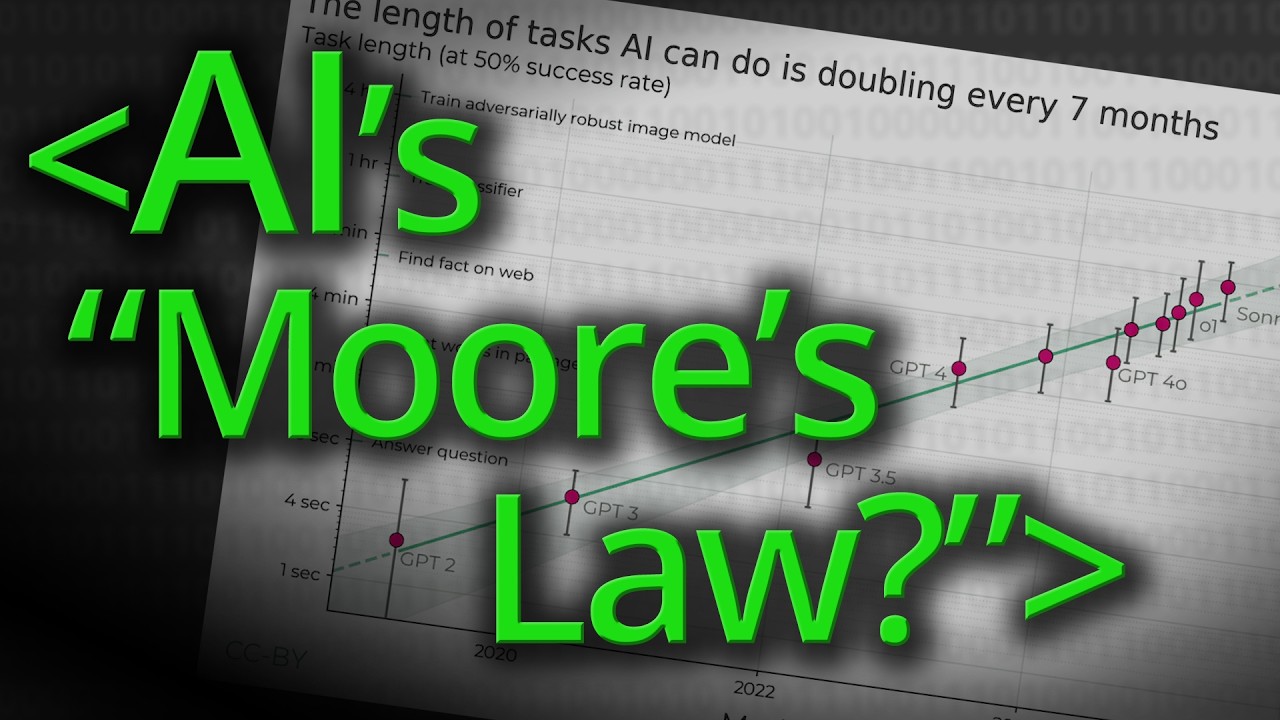

AI's Version of Moore's Law? - Computerphile

EfficientML.ai Lecture 1 - Introduction (MIT 6.5940, Fall 2024)

5.0 / 5 (0 votes)