New OPEN SOURCE Software ENGINEER Agent Outperforms ALL! (Open Source DEVIN!)

Summary

TLDR该视频脚本介绍了一款先进的开源软件工程代理,它能够在GitHub仓库中自主解决问题,与Devon这款软件工程基准的准确性相似,平均用时93秒。该代理完全开源,允许GPT-4轻松编辑和运行代码。视频还讨论了开源模型与闭源模型的比较、代理的工作方式、新设计的界面、信息限制、未来研究的可扩展性、成本效益以及开源模型的潜在使用。

Takeaways

- 🚀 开源软件工程代理的出现标志着在GitHub仓库自动解决问题的新系统,与Devon相似的准确性但开源且平均耗时仅93秒。

- 🔍 开源代理在软件工程基准测试中的表现与Devon相近,仅低1.55%,这表明开源项目在短时间内能取得显著成果。

- 💡 软件工程代理通过与专门终端交互来工作,支持文件浏览、编辑、语法检查和测试执行,强调了为GPT-4设计的友好界面的重要性。

- 🌟 通过限制AI系统查看文件的行数(如每次仅100行),可以提高其效率和准确性,这可能有助于模型更好地处理和理解任务。

- 🔧 开源软件工程代理的设计允许易于配置和扩展,促进了未来软件工程代理研究的发展。

- 🔗 提供了一个演示链接,通过它可以直观地了解软件工程代理的工作流程和内部机制。

- 📜 预计将在4月10日发布一篇论文,详细介绍技术细节、基准测试、模型微调和有效性实验。

- 💰 尽管AI系统执行复杂任务的成本较高,但该软件工程代理将每个任务的成本限制在平均$4以下。

- ⏱️ 软件工程代理平均在93秒内解决问题,显示出其高效的性能。

- 📈 尽管开源模型具有隐私和本地运行的优势,但目前闭源模型由于其强大的性能和巨额投资,仍然是首选。

Q & A

开源软件工程代理的发布有何重要性?

-这个开源软件工程代理的发布非常重要,因为它在性能上与之前发布的Devon相当,但使用了更少的资本和时间。这表明开源社区能够快速地取得显著的技术进步,并且有可能在未来超过商业封闭源代码的解决方案。

开源代理与Devon在性能上有何差异?

-开源代理在软件工程基准测试中的准确率与Devon相近,Devon为13.84%,而开源代理为12.29%。这表明开源代理的性能几乎与Devon相同,但开源代理的开发成本更低,时间更短。

开源代理是如何工作的?

-开源代理通过与专门设计的终端交互来工作,这个终端允许它打开、滚动和编辑文件,还能进行语法检查和编写执行测试。这个为GPT-4优化的接口对提高代理性能至关重要。

为什么需要为语言模型设计友好的代理计算机接口?

-为了让语言模型更有效地工作,需要为其设计一个友好的代理计算机接口。类似于人类需要好的用户界面设计,代理计算机接口可以帮助模型更好地理解任务,并提供及时反馈,从而避免错误并提高效率。

限制AI系统查看文件行数的策略有何影响?

-限制AI系统一次只查看100行代码,而不是200行或300行,甚至整个文件,可以提高模型处理任务的效率。这可能是因为较少的信息量降低了模型处理的复杂性,使其能够更专注和有效地执行任务。

开源软件工程代理如何促进未来的研究和发展?

-由于这个软件工程代理是完全开源的,任何人都可以对其进行实验和改进,为代理与计算机的交互方式贡献新的想法。这种开放性可能会吸引更多的开发者和公司参与,从而加速代理技术的发展。

开源代理的演示链接在哪里可以找到?

-开源代理的演示链接可以在相关的网页中找到。通过这个链接,用户可以实际看到代理是如何解决软件工程问题的,包括它在工作区的步骤和终端的操作。

开源代理的技术细节将在何时公布?

-开源代理的技术细节预计将在4月10日发布。这份技术论文将详细介绍代理的工作原理、使用的基准测试、如何微调模型,以及他们的初步实验结果。

运行一个AI任务的平均成本是多少?

-运行一个AI任务的平均成本被限制在4美元以内,但实际花费通常更低。这个成本控制对于确保AI技术在日常应用中的可行性非常重要。

开源代理平均需要多长时间解决一个任务?

-开源代理平均需要93秒来解决一个任务,这比之前的系统如Devon要快得多,后者可能需要5到10分钟。

开源代理目前主要使用哪种模型?

-尽管开源代理是完全开源的,但目前主要使用的是封闭源代码模型,如GPT-4和Claude Opus,因为这些模型在性能上更强大。尽管开源模型在隐私和可执行性上有优势,但在当前阶段,封闭源代码模型由于更大的投资和更高的效率而被优先选择。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Devin AI - Are Software Engineers finally doomed?

Python Advanced AI Agent Tutorial - LlamaIndex, Ollama and Multi-LLM!

Intro to software suite: ARES Commander and Undet point cloud tools

What tools I use to manage Game Engine Development

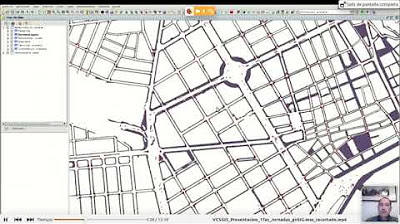

17th Int. gvSIG Conference: Version Control System on gvSIG Desktop

Set A Light 3D Review: Can you learn lighting in a computer?

5.0 / 5 (0 votes)