What is Sensor Calibration and Why is it Important?

Summary

TLDR传感器校准对于现代工艺流程工厂的运作至关重要,它能确保传感器准确测量流量、压力、温度等关键过程变量,从而让控制系统精确调整阀门、泵和其他执行器,保障工厂的安全高效运行。视频介绍了校准的概念、重要性以及如何通过“现状检查”和精确调整来最小化误差,强调了校准对于提高过程控制精度和保障过程安全的重要性。

Takeaways

- 🔍 传感器校准对于现代工艺流程工厂的运行至关重要,它能确保传感器准确地测量流量、水平、压力和温度等重要工艺变量。

- 📈 校准是通过一系列调整,使仪器或设备尽可能准确无误地运行,减少误差,即测量值与实际值之间的代数差。

- 🛠️ 传感器误差可能由多种因素引起,包括设备零点参考不准确、环境条件变化导致的量程偏移,以及机械磨损或损坏。

- 🔧 校准过程包括对传感器进行“现状检查”,即在进行任何调整之前先进行一次校准,以确定是否需要重新校准。

- 📊 进行“现状检查”时,使用精确的仪器生成0%、25%、50%、75%和100%的过程范围信号,并记录相应的输出信号,这称为“五点检查”。

- 🔄 检查滞后现象(hysteresis)时,记录并比较传感器输出在上升和下降过程中的偏差,以确保传感器的准确性。

- 🎯 如果测量偏差超过允许的最大偏差,就需要进行完整的校准。如果偏差在允许范围内,则不需要校准。

- 🔩 校准时需要使用非常精确的过程模拟器,如压力源,并使用电流表测量传感器的4-20 mA输出。

- 🔄 校准模拟传输器时,需要调整零点和量程,以减少测量误差。模拟传输器的零点和量程调整是相互影响的,因此校准是一个迭代过程。

- 📱 数字传输器允许通过调整模拟到数字转换器的输出(称为“传感器微调”)或数字到模拟转换器的输入(称为“4-20 mA微调”或“输出微调”)来校准。

- 🚀 校准后的误差会再次被图形化表示,以确保误差控制在规定的容忍范围内,从而确保工艺流程的高效和安全运行。

Q & A

现代工艺工厂中,工程师为何要指定传感器来测量过程变量?

-工程师指定传感器来测量如流量、液位、压力和温度等重要的过程变量,以便过程控制系统能够调整阀门、泵和其他执行器,以维持这些量的适当值,并确保安全运行。

传感器校准的目的是什么?

-传感器校准的目的是进行一系列的调整,使传感器或仪器尽可能准确地或无误差地运行,以确保传感的实际值能够被感知并传递给控制系统。

传感器测量误差可能由哪些因素引起?

-传感器测量误差可能由多种因素引起,包括仪器可能没有正确的零点参考、电子设备随时间漂移、传感器范围的偏移以及机械磨损或损坏等。

为什么控制系统需要准确的传感器数据?

-控制系统需要准确的传感器数据来做出正确的控制决策,如调整控制阀的输出或设置给料泵的速度,以保证过程的高效和安全运行。

传感器校准程序通常要求多久进行一次?

-现代工艺工厂的传感器校准程序通常要求仪器定期进行校准,尽管校准可能需要相当长的时间,特别是当设备难以到达或需要特殊工具时。

进行“现状”检查的目的是什么?

-进行“现状”检查的目的是在使用精确的仪器进行校准之前,对传感器进行一次校准,以确定当前的仪器校准是否在设备的公差范围内,从而决定是否需要重新校准。

“五点检查”是如何进行的?

-“五点检查”是通过使用精确的仪器产生对应于传感器0%、25%、50%、75%和100%过程范围的过程信号,然后观察并记录相应的传感器输出,以毫安为单位。

如何检查传感器的滞后现象?

-为了检查滞后现象,即传感器输出对于过程值在减小('downscale')时与增加('upscale')时不同的现象,需要记录对应于100%、75%、50%、25%和0%的输出信号,并计算每个检查点的偏差。

如果传感器的偏差大于允许的最大偏差,应该怎么办?

-如果传感器的偏差大于允许的最大偏差,则需要进行完整的校准。

在校准模拟传感器时,通常使用什么类型的设备?

-在校准模拟传感器时,通常使用非常精确的过程模拟器,如压力源,以及连接到过程侧的传感器,并使用电流表来测量传感器的4-20 mA输出。

数字传感器的校准如何进行?

-对于数字传感器,可以通过调整模拟到数字转换器的输出(称为“传感器微调”)和/或数字到模拟转换器的输入(称为“4-20 mA微调”或“输出微调”)来调整传入的传感器信号。

校准后,传感器的最大偏差应降低到多少?

-校准后,传感器的最大偏差应降低到规定的公差范围内,例如从0.38%降低到0.18%,确保在0.20%的公差范围内。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

what Is Instrument Calibration. Instrument Calibrator. RTD Calibration. Calibration certificates.

How to calibrate RTD temperature transmitters - Beamex

Groq Architecture

NASA | TIRS: The Thermal InfraRed Sensor on LDCM

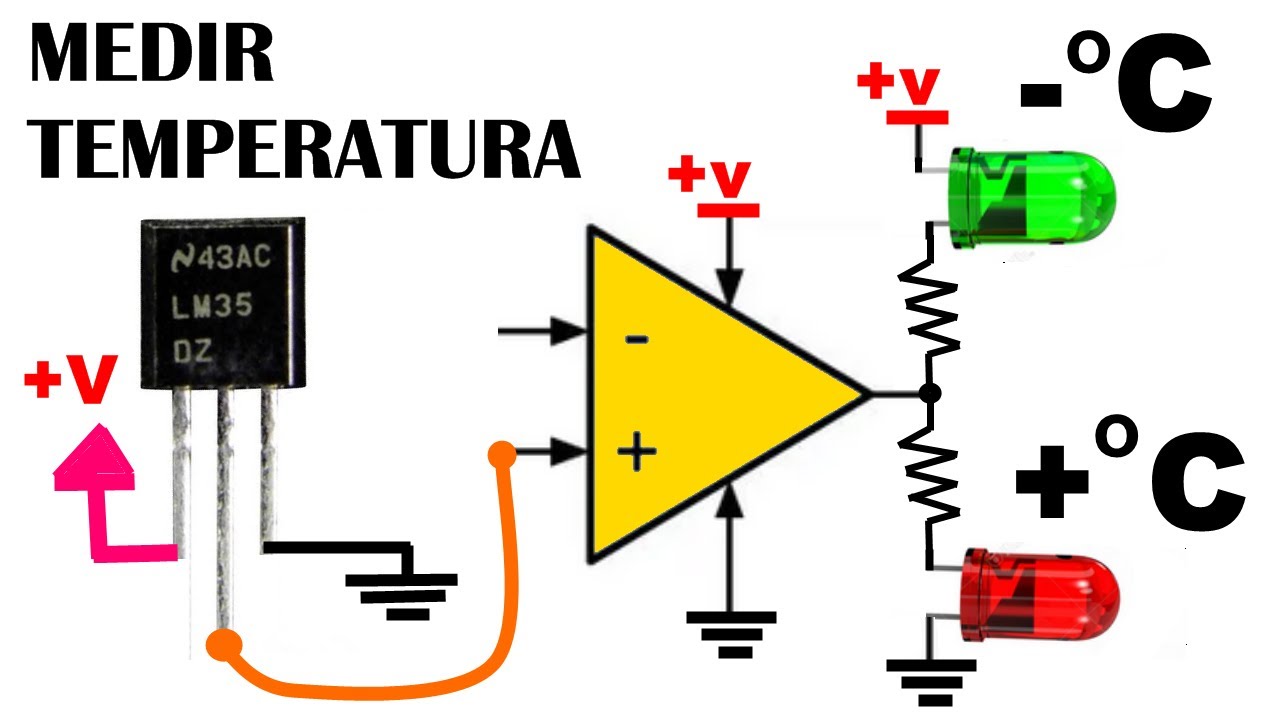

Como medir la temperatura usando el sensor LM35 y un Op-Amp!

Inside DFRobot Factory in China! | How Your Favourite Maker Boards Are Really Made

5.0 / 5 (0 votes)