RAG vs. Fine Tuning

Summary

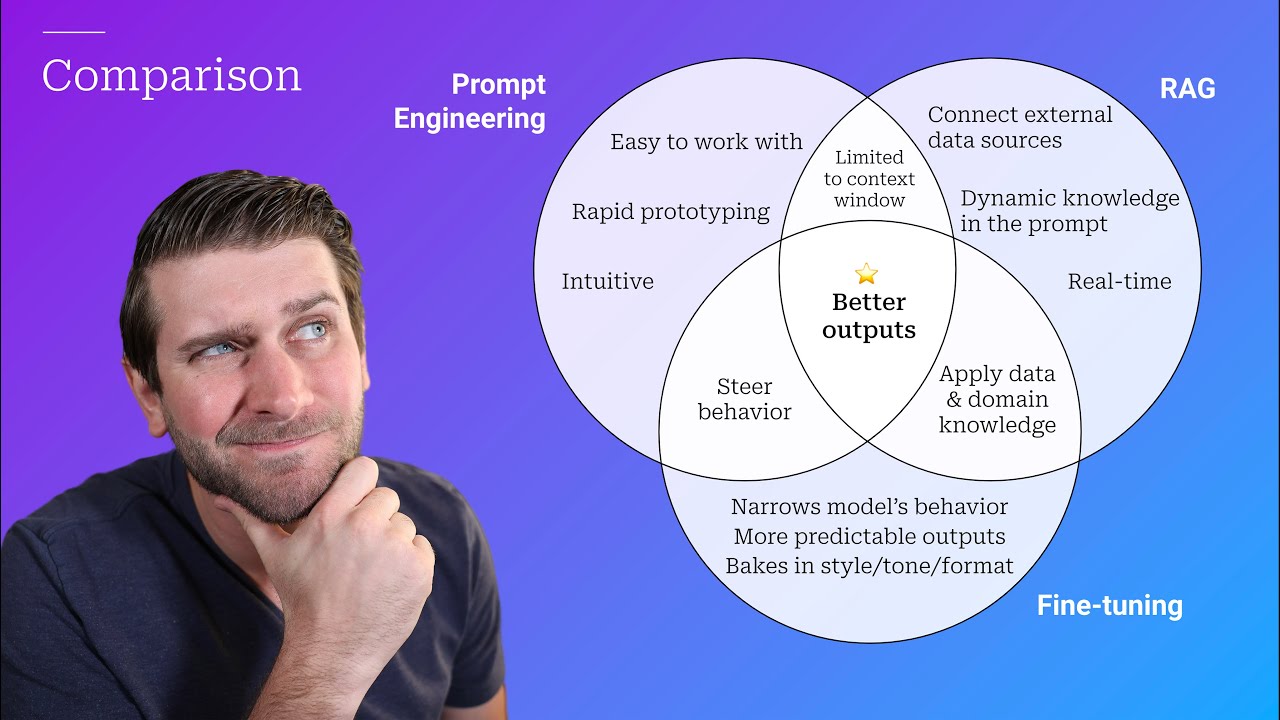

TLDRThis video explores the strengths and use cases of Retrieval Augmented Generation (RAG) and fine-tuning for enhancing large language models. RAG retrieves up-to-date information to provide accurate responses, while fine-tuning specializes models for specific domains. The video discusses their respective advantages, such as RAG's ability to handle dynamic data and fine-tuning's efficiency in inference cost. It also suggests combining both techniques for optimal AI application performance, emphasizing the importance of choosing the right approach based on data dynamics, industry needs, and the desire for transparency.

Takeaways

- 🔍 RAG (Retrieval Augmented Generation) and fine-tuning are two powerful methods for enhancing large language models.

- 📅 One limitation of LLMs is that they may not have up-to-date or specific information unless trained on it.

- 🧠 RAG retrieves external data and adds context to prompts, allowing models to give accurate responses without retraining.

- 📄 Fine-tuning involves training the model with specific data to tailor its output style and tone for specialized use cases.

- 💡 RAG is ideal for dynamic, frequently changing data sources like databases or real-time information.

- ⚖️ Fine-tuning works well for specialized industries with specific jargon or writing styles, such as legal or financial sectors.

- 🚀 RAG reduces hallucinations and provides transparent, source-backed information, ideal for trusted AI systems.

- ⏱ Fine-tuning is beneficial for models requiring faster inference and smaller context windows.

- 🛠 Both methods can be combined for more powerful applications, such as in finance where accuracy and industry-specific language are important.

- 🎯 The choice between RAG and fine-tuning depends on the application's need for up-to-date information or specialization in a certain domain.

Q & A

What are the two powerful ways mentioned to enhance the capabilities of large language models?

-The two powerful ways mentioned are Retrieval Augmented Generation (RAG) and fine-tuning.

What is the primary issue with generative AI when dealing with enhancing models and their limitations?

-The primary issue is that generative AI models may not have the specific information needed to provide accurate or up-to-date answers, and they are very generalistic, which can be a challenge when trying to specialize them for specific use cases.

How does Retrieval Augmented Generation (RAG) work to enhance a model's capabilities?

-RAG enhances a model's capabilities by retrieving external and up-to-date information, augmenting the original prompt, and then generating a response using that context and information.

What is a limitation of large language models (LLMs) that RAG helps to mitigate?

-A limitation of LLMs is that they may provide incorrect or hallucinated answers when they lack the specific information needed to answer a query. RAG mitigates this by using a corpus of information to provide the necessary context.

How does fine-tuning differ from RAG in terms of enhancing a model's capabilities?

-Fine-tuning involves specializing a large language foundational model in a certain domain or area by using labeled and targeted data, thus baking in context and intuition into the model's weights.

What are the strengths of using RAG for dynamic data sources?

-RAG is perfect for dynamic data sources like databases because it allows for continuously pulling up-to-date information and helps with reducing hallucinations by providing sources for the information.

Why is fine-tuning beneficial for specific industries with unique writing styles and terminologies?

-Fine-tuning is beneficial for such industries because it allows the model to be specialized in the nuances of their writing styles, terminology, and vocabulary, leading to more accurate and relevant responses.

How does the choice between RAG and fine-tuning affect a model's performance, accuracy, and compute cost?

-The choice between RAG and fine-tuning can greatly affect a model's performance by influencing its accuracy, outputs, and compute cost. RAG is useful for dynamic data and provides transparency, while fine-tuning can lead to faster inference and is suitable for specific industry needs.

What are some common use cases for RAG mentioned in the script?

-Some common use cases for RAG include product documentation chatbots that need to update responses with the latest information and applications that require transparency and trust in the information source.

How can a combination of RAG and fine-tuning be utilized effectively?

-A combination of RAG and fine-tuning can be utilized effectively by fine-tuning a model to understand industry-specific lingo and providing it with past data to understand the specific domain, while also using RAG to retrieve the most up-to-date sources for news and data.

What factors should be considered when choosing between RAG and fine-tuning for AI-enabled applications?

-When choosing between RAG and fine-tuning, one should consider the nature of the data (slow-moving or fast), the industry and its specific needs, the importance of transparency and sources, and whether the application requires up-to-date external information or specialized domain knowledge.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Fine Tuning, RAG e Prompt Engineering: Qual é melhor? e Quando Usar?

The Vertical AI Showdown: Prompt engineering vs Rag vs Fine-tuning

Prompt Engineering, RAG, and Fine-tuning: Benefits and When to Use

RAG vs Fine-Tuning vs Prompt Engineering: Optimizing AI Models

Introduction to Generative AI

Building Production-Ready RAG Applications: Jerry Liu

5.0 / 5 (0 votes)