Building Production-Ready RAG Applications: Jerry Liu

Summary

TLDRThe speaker discusses building production-ready retrieval-augmented generation (RAG) applications using language models. He outlines RAG techniques like data ingestion, querying, retrieval, and synthesis. He then analyzes key challenges with basic RAG like poor response quality and solutions across the pipeline, emphasizing task-specific evaluation. Finally, he provides RAG optimization techniques from basic chunk size tuning to more advanced concepts like recursive retrieval, multi-document agents, and fine-tuning for improved performance.

Takeaways

- 😀 Rag systems consist of data ingestion, retrieval, and synthesis components

- 📝 Evaluation metrics are critical for iterating on rag system performance

- 🔎 Tuning chunk sizes and metadata filtering are table stakes techniques for optimization

- 🔍 Advanced retrieval methods like small-to-big can improve precision

- 🤖 Incorporating agents enables more sophisticated reasoning over data

- 📈 Fine-tuning embeddings or language models optimizes components for your dataset

- 👥 Paradigms are retrieval augmentation and model fine-tuning for knowledge incorporation

- 📊 Response quality issues like hallucination can stem from bad retrieval or outdated info

- 🧠 Using LMs for reasoning instead of just text generation opens up more capabilities

- 🎯 Task-specific benchmarking and evaluation drives rag system iteration

Q & A

What are the main paradigms for getting language models to understand data they haven't been trained on?

-The main paradigms are retrieval augmentation, which involves creating a data pipeline to insert context into the language model's input prompt, and fine-tuning, which updates the model's weights to incorporate new knowledge.

What is Retrieval Augmented Generation (RAG)?

-RAG is a technique that combines data ingestion and querying (retrieval and synthesis) to enhance question-answering systems. It has become a popular method for building QA systems that leverage the reasoning capabilities of language models.

What are the common applications of language models mentioned in the script?

-Common applications include knowledge search, QA, conversational agents, workflow automation, and document processing.

What challenges might developers face when building naive RAG applications?

-Challenges include response quality issues like bad retrieval, low precision and recall, hallucination, and outdated information, as well as issues inherent to language models like irrelevance, toxicity, and bias.

How can the performance of a RAG application be improved?

-Improvements can be made across the entire pipeline, including optimizing data storage, playing with chunk sizes, enhancing embedding representations, refining retrieval algorithms, and exploring synthesis beyond pure generation.

What is the importance of evaluating RAG systems, and how can it be done?

-Evaluation is crucial for defining benchmarks and understanding how to iterate on and improve the system. It involves evaluating both the end-to-end solution and specific components like retrieval and synthesis through metrics like success rate, hit rate, and NDCG.

What are 'table stakes' RAG techniques mentioned?

-Table stakes techniques include better parsing, adjusting chunk sizes, integrating with vector databases for hybrid search and metadata filters, and trying out advanced retrieval methods.

How does metadata filtering contribute to RAG performance?

-Metadata filtering allows for the addition of structured context to text chunks, improving retrieval precision by combining structured query capabilities with semantic search to return the most relevant candidates.

What advanced concept involves using LLMs for reasoning beyond synthesis?

-The concept involves moving towards agent-based systems where each document is modeled as a set of tools for summarizing and QA, allowing for more complex reasoning and analysis beyond simple question answering.

What role does fine-tuning play in enhancing RAG systems?

-Fine-tuning optimizes specific parts of the RAG pipeline, such as embedding representations for better performance and tailoring language models for improved response synthesis, reasoning, and structured outputs.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Introduction to Generative AI

The Vertical AI Showdown: Prompt engineering vs Rag vs Fine-tuning

Retrieval Augmented Generation - Neural NebulAI Episode 9

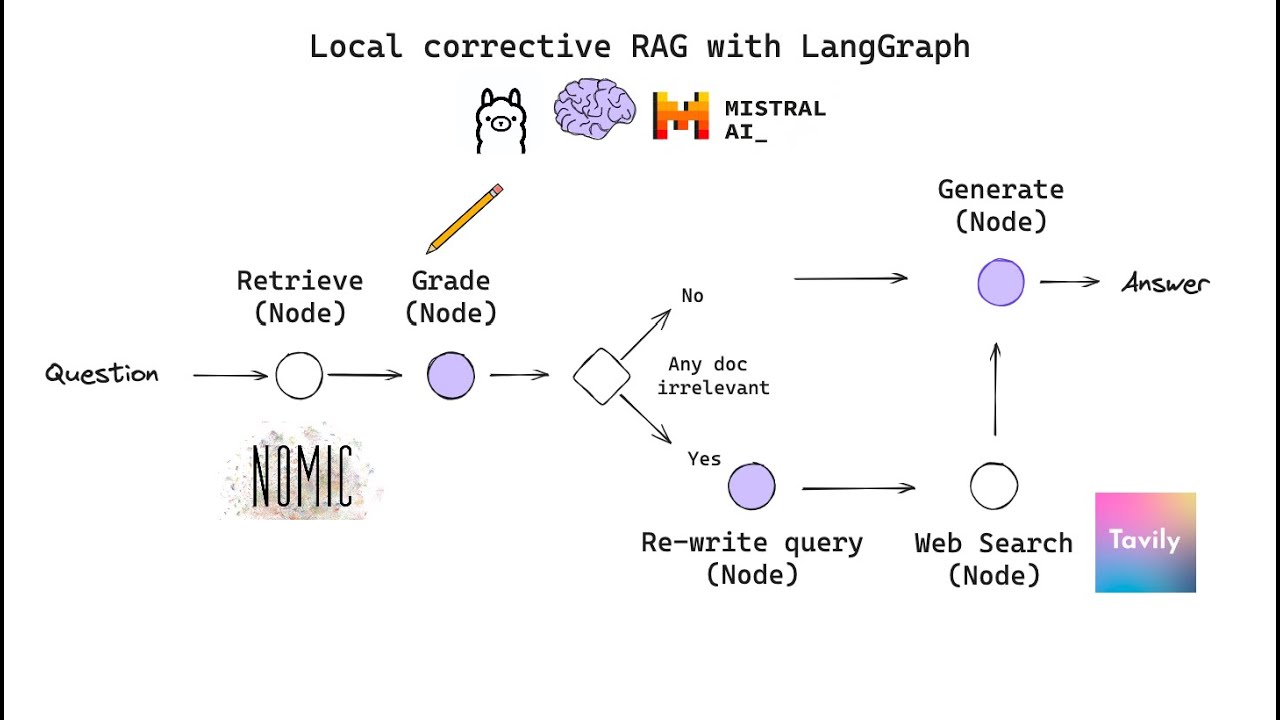

Building Corrective RAG from scratch with open-source, local LLMs

Build your own RAG (retrieval augmented generation) AI Chatbot using Python | Simple walkthrough

Introduction to generative AI scaling on AWS | Amazon Web Services

5.0 / 5 (0 votes)