A Comprehensive Cookbook for Claude 3

Summary

TLDRスクリプトのエッセンスを包む魅力的な要約で、ユーザーを引き付ける短くyet正確な概観を提供する。

Takeaways

- 🚀 Claud 3が最新リリースされ、AnthropicからCLA, CLA3 Haiku, CLA3 Opusの3つのモデルが提供された。

- 🏆 CLA3 Opusが最も優れたモデルであり、jt4を含んだ他のモデルをすべてアウトパフォームしている。

- 📈 CLA3 Opusは、様々なベンチマークで優れた結果を示しており、ユーザーのテスト結果も印象的だ。

- 🤖 200,000のコンタクトウィンドォが存在し、Anthropicはそれを1兆に拡大する予定である。

- 🔌 Cloud 3はAPIを通じてアクセス可能で、Cloud ProにサブスクライブしてOpusにアクセスすることができる。

- 📚 Llama index Pythonライブラリを利用して、Anthropicの統合を簡単に行える。

- 📄 シンプルな記事を用いて、データインデックスの方法を紹介している。

- 🔍 Vector Store IndexとSummary Indexを使って、ドキュメントの知識をインデックス化し、検索を行う。

- 🛠️ RAG(Retrieval-Augmented Generation)パイプラインを使って、特定の質問に対する回答を生成する。

- 🔄 Router Query Engineを使えば、複数のツールを用いて質問をルーティングすることができる。

- 🔢 SQL Query Engineを使って、構造化されたデータベース上でのテキストSQLを実行することができる。

- 🌐 React Agentを使って、直接的なプロンプトをLG(Language Model)に投げて、問題解決のためのアクションを決定する。

Q & A

Claud 3は何日リリースされたのですか?

-Claud 3は2024年3月4日にリリースされました。

Anthropicがリリースした3つの新しいモデルは何ですか?

-Anthropicがリリースした3つの新しいモデルはCLA 3, Haiku CLA 3, そしてCLA 3 Opusです。

CLA 3 Opusがどのように他のモデルを優位であると評価されていますか?

-CLA 3 Opusは、さまざまなベンチマークにおいて他のモデルを優位であると評価されています。特に、jt4を含んでおり、数値的な結果だけでなく、TwitterでClaudeを試して遊んでいる人々の意見からも、その優位性が示されています。

CLA 3 Opusのcontact wendoは何ですか?

-CLA 3 Opusのcontact wendoは200,000で、Anthropicはこれを1 millionに拡大する予定です。

CLA 3 Opusにアクセスする方法は何ですか?

-CLA 3 OpusにアクセスするにはAPIを通じて行うことができます。また、CLA Proにサブスクライブすることで、Opusにデフォルトでアクセスすることができます。

Llama index pythonライブラリとAnthropicの統合方法について教えてください。

-Llama index pythonライブラリを利用することで、Anthropicを統合することができます。Anthropicは標準で埋め込モデルを提供していないため、この場合、Hugging FaceのBGE埋め込モデルを使用します。また、Anthropicパッケージをインストールして、統合を直接使用できるラッパーをpip installでインストールすることができます。

トイなデータセットを使用して、CLA 3 Opusをどのようにデモンストレーションするか教えてください。

-トイなデータセットを使用して、CLA 3 Opusをデモンストレーションするために、単純な記事をWebから読み込んで、Beautiful Soupを使用してHTMLをクリーニングし、テキストを整形します。そして、AnthropicのAPIキーを入力し、必要なインポートと設定を行います。最後に、ベクトルストアインデックスとサマリーインデックスを作成し、問い合わせエンジンを実行して、CLA 3 Opusの機能を示します。

CLA 3 Opusを使ったSQLクエリエンジンの設定方法について教えてください。

-CLA 3 Opusを使ったSQLクエリエンジンを設定するためには、まずChinook SQLライトデータベースをダウンロードし、SQLAlchemyを使用してデータベースに接続します。その後、SQLデータベースとクエリを実行したいテーブルをNL SQLテーブル問い合わせエンジンに渡します。このエンジンは自然言語をSQLに翻訳し、データベースに対して実行し、結果を返します。

CLA 3 Opusを使った構造化データ抽出の方法について教えてください。

-CLA 3 Opusを使った構造化データ抽出では、L indexの構造化データ抽出プログラムを使用します。これは、プロンプトLLMと必要な出力形式のPonicスキーマを組み合わせたものです。LLMテキスト完成プログラムや、OpeenAIの関数呼び出しと統合するPonicプログラムを使用して、LLMに適切なJSON出力を生成させることができます。

CLA 3 Opusを使ったリアクションエージェントの作り方について教えてください。

-CLA 3 Opusを使ったリアクションエージェントを作るためには、直接的なプロンプティングを使用してLMを操作します。リアクションエージェントは一般的なエージェントで、任意のLMを取り扱います。エージェントは入力とツールのセットを受け取り、LMにプロンプトを生成してアクションを出力します。また、Chain of Thought reasoningとツール使用を組み合わせたフレームワークを使用して、問題を解決するためのアクションを決定します。

上記のスクリプトで説明されたCLA 3 Opusの使用例之中で最も興味深いものは何ですか?

-最も興味深い使用例は、サブクエストションクエリエンジンです。これは質問をサブクエストションに分解し、それぞれのサブクエストションに対応するツールを決定するものです。これにより、複雑な質問をより細かく分解し、異なるツールを使って回答することができるため、より深い理解と洞察を得ることができます。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Magic Market | L-Systems

Accenture CEO on earnings beat and revenue cut, spending and generative AI

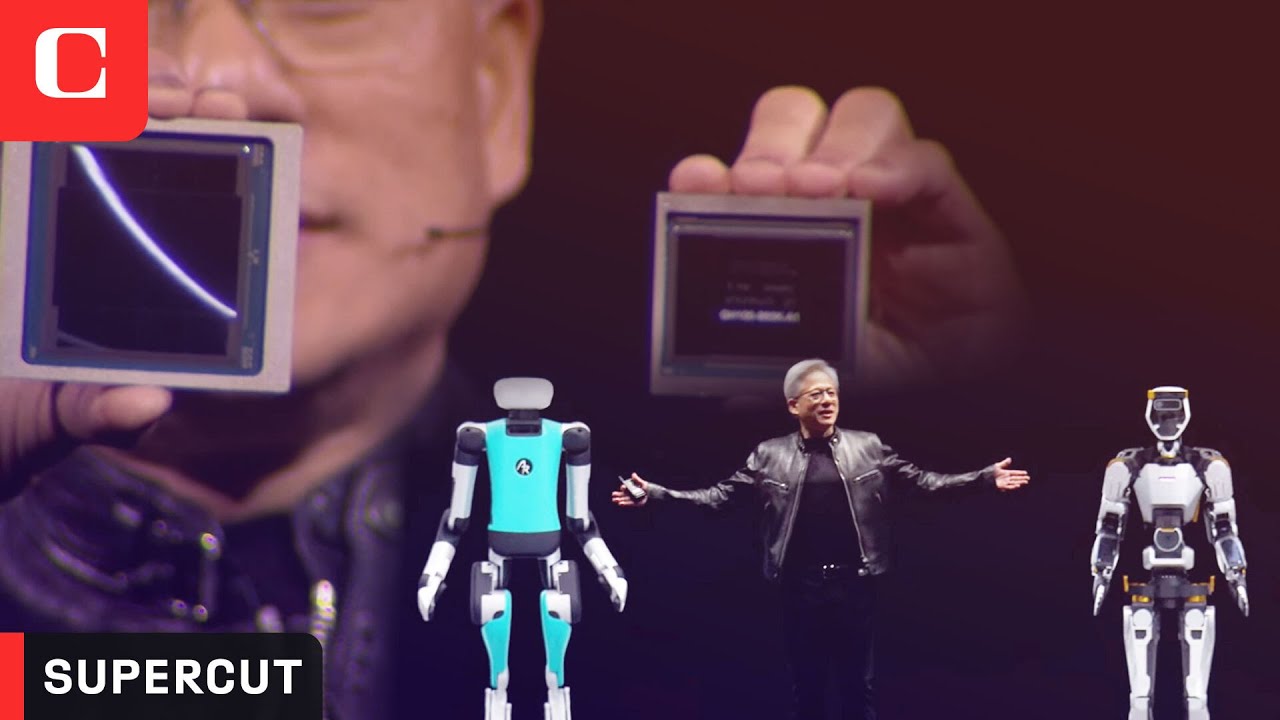

Nvidia 2024 AI Event: Everything Revealed in 16 Minutes

The Problem with Human Specialness in the Age of AI | Scott Aaronson | TEDxPaloAlto

コンテンツには5種類ある3 実際にわっきーのコンテンツをその視点で見る

ReAct Agent (Part 1, Introduction to Agents)

5.0 / 5 (0 votes)