The Problem with Human Specialness in the Age of AI | Scott Aaronson | TEDxPaloAlto

Summary

TLDRスクリプトのエッセンスを提供する魅力的な要約で、ユーザーを引き込み、興味を引き起こす短く正確な概要を提供します。

Takeaways

- 🌟 AIの発展は過去数年で驚くべき進歩を遂げており、理論的なコンピュータサイエンスがAIを悪用されないよう助けることができる方法を模索中です。

- 🚀 現在のAI革命の核心となるアイデアは、何世代も前から知られていましたが、その時点では実用的ではないとされていました。

- 💡 摩尔の法則(計算能力が指数関数的に増加する)に基づくAIの進化は、多くの専門家が信じていなかったものですが、現在は現実となりました。

- 🤖 AIが言語を理解し、知能を持つようになると予想されていた「魔法の時代」が、今や到来しているかもしれません。

- 🧠 人工知能が持つ潜在的な問題(例えば、AIが人間を破壊的な方法で取って代わる可能性)と、正しい方向に進む可能性について考えることが重要です。

- 📈 AIの進歩は停滞するかもしれませんが、もし継続的な進歩が続けば、10年から20年以内に人間と同じレベルの能力を持つAIが現れる可能性があります。

- 🎓 AIの発展に伴い、教育システムや職業の将来について再考する必要性が生じています。AIが学生と同じテーマで短編小説を書くことができる今天、学びの意味合いは何ですか?

- 🖋️ AIの生成物と人間の生成物を区別するために、GPTなどの言語モデルの出力を watermarking するプロジェクトが進められています。

- 🎨 AIが創造的な成果を生み出すとき、その価値はその種類の生産物が大量に生産可能になると急速に減少する「AIの豊富さパラドックス」と呼ばれる現象に直面しています。

- 🌌 AIが新しい音楽的方向性を提案する場合、それがBeatlesと同様の影響力を持ち得るかどうかは、人間の独自の創造力に対する質問につながります。

- 🔄 AIの安全性に関する議論において、AIを教え込ませ、人間の一意的な創造性と知能を尊重する「宗教」を提唱しています。

Q & A

量子コンピューティングの専門家がOpenAIで取り組んでいる問題は何ですか?

-AIが世界を破壊しないようにする方法を理論的に考えることです。

現在のAI技術の進化において、予想外だった主な要因は何ですか?

-単純なアイデアが大規模な計算資源と組み合わさることで、言語理解やその他のタスクで人間に匹敵するAIを実現したことです。

なぜ過去にはニューラルネットワークが印象的でなかったと考えられていたのですか?

-過去にはニューラルネットワークはそれほど効果的ではなく、単純に大規模化するだけでは問題解決には至らないと考えられていました。

現代のAI技術革命の基盤となっている主な理論や技術は何ですか?

-ニューラルネットワーク、バックプロパゲーション、勾配降下法などが基礎となっています。

将来のAIは、人間の脳と比較して、どのような能力を持つ可能性がありますか?

-数学や科学の未解決問題を解く能力を含め、あらゆる知的作業を人間と同等以上にこなすことが考えられています。

AIの進歩が「フィズルアウト」しないとしたら、どのような未来が考えられるでしょうか?

-AIが人間の知能を超え、すべてのタスクを人間以上にこなせるようになる可能性があります。

大言壮語の理論とは何ですか?

-成功と失敗の例をたくさん与えられれば、AIが数年内に人間の最高のパフォーマンスに匹敵するという理論です。

なぜ人々はAIの進化をリアルタイムで追うのが難しいと感じていますか?

-目標が絶えず動いているため、AIが一つの目標を達成すると、人々はすぐに次の目標を設定します。

OpenAIで行われているAIの安全性に関連するプロジェクトの一つは何ですか?

-GPTと他の大規模言語モデルの出力にウォーターマークを付けるプロジェクトです。

AIによる創造性と人間の創造性の違いは何ですか?

-人間の創造性はユニークで再現不可能な一回きりの出来事であり、AIは無限に創造的作業を再現することができますが、そのユニークさには限界があります。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Accenture CEO on earnings beat and revenue cut, spending and generative AI

Google AI Health Event: Everything Revealed in 13 Minutes

🇺🇸 Walls of Shame: The US-Mexican Border l Featured Documentaries

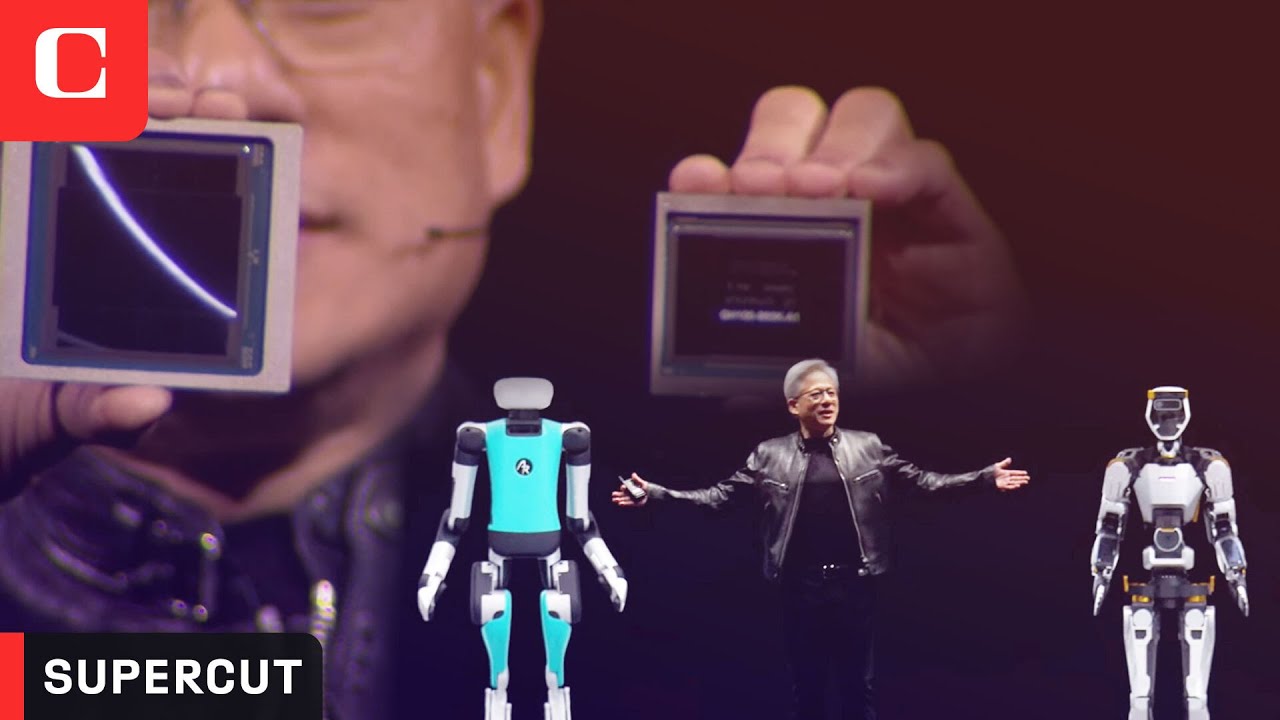

Nvidia 2024 AI Event: Everything Revealed in 16 Minutes

ReAct Agent (Part 1, Introduction to Agents)

This Midjourney Update is WILD + Pika's New AI Video Feature

5.0 / 5 (0 votes)