Don’t Build AI Products The Way Everyone Else Is Doing It

Summary

TLDRThis video script advocates a strategic approach to building AI products that are unique, valuable, and efficient. It critiques the common practice of simply wrapping existing large language models like ChatGPT, highlighting issues like lack of differentiation, high costs, and slow performance. Instead, it proposes a toolchain approach, combining specialized AI models with traditional coding for targeted problem-solving. This method involves first exploring the problem space using standard programming, then introducing AI models only for specific challenges that are difficult to solve with conventional code. By owning and continuously improving these custom models, companies can create differentiated, cost-effective, and high-performing AI solutions tailored to their needs.

Takeaways

- 🔑 Don't just wrap existing AI models like ChatGPT; build your own custom AI toolchain for a differentiated, valuable, and fast product.

- ⚠️ Using large pre-trained models like ChatGPT is risky as they can be easily copied, are expensive to run, slow, and difficult to customize.

- 🧩 Break down complex problems into smaller parts that can be solved with specialized AI models combined with traditional code.

- 🔍 Explore the problem space using normal programming practices first, then identify areas that require specialized AI models.

- 📊 Generate your own training data creatively, e.g., using web scraping or other techniques, to train custom AI models.

- 🎯 Train specialized AI models for specific tasks using off-the-shelf tools like Google's Vertex AI.

- 🧱 Connect multiple specialized AI models with traditional code to create the final product.

- ⚡ Custom AI toolchains can be faster, more reliable, cheaper, and more differentiated than using large pre-trained models.

- 🔄 Continuously improve your AI models by incorporating user feedback and new data.

- 🔐 Owning your AI models allows for better control, privacy, and customization compared to relying on third-party models.

Q & A

What is the main issue with building AI products by simply wrapping other models like ChatGPT?

-The main issue is that this approach does not create differentiated technology. It's easy for competitors to copy and replicate, putting the product at risk of being commoditized.

What are the other major problems with relying solely on large language models like ChatGPT?

-Other major problems include high costs of running large and complex models, slow performance for applications that require instant responses, and limited customizability despite fine-tuning.

How did the speaker's company approach building their Visual Co-Pilot product?

-Instead of relying on a single large language model, they created their own toolchain by combining a fine-tuned LLM with other technologies and custom-trained models for specific tasks.

Why did the speaker recommend not using AI initially when building an AI product?

-The speaker recommended exploring the problem space using normal programming practices first, to determine which areas truly require specialized AI models. This approach avoids building overly complex models from the start.

How did the speaker's company generate data to train their object detection model?

-They used Puppeteer to automate opening websites, taking screenshots, and mapping the locations of images on the page. This generated the input and output data needed to train the object detection model.

What are the advantages of owning and training your own models, according to the speaker?

-Owning and training your own models allows for faster improvements, lower costs, better privacy control, and the ability to meet specific customer requirements that pre-trained models may not address.

What advice did the speaker give for building AI products?

-The speaker advised using AI for as little as possible, and instead relying on normal code combined with specialized AI models for critical areas. This approach aims to create faster, more reliable, and more cost-effective products.

How does the speaker's approach differ from the common perception of how AI products are built?

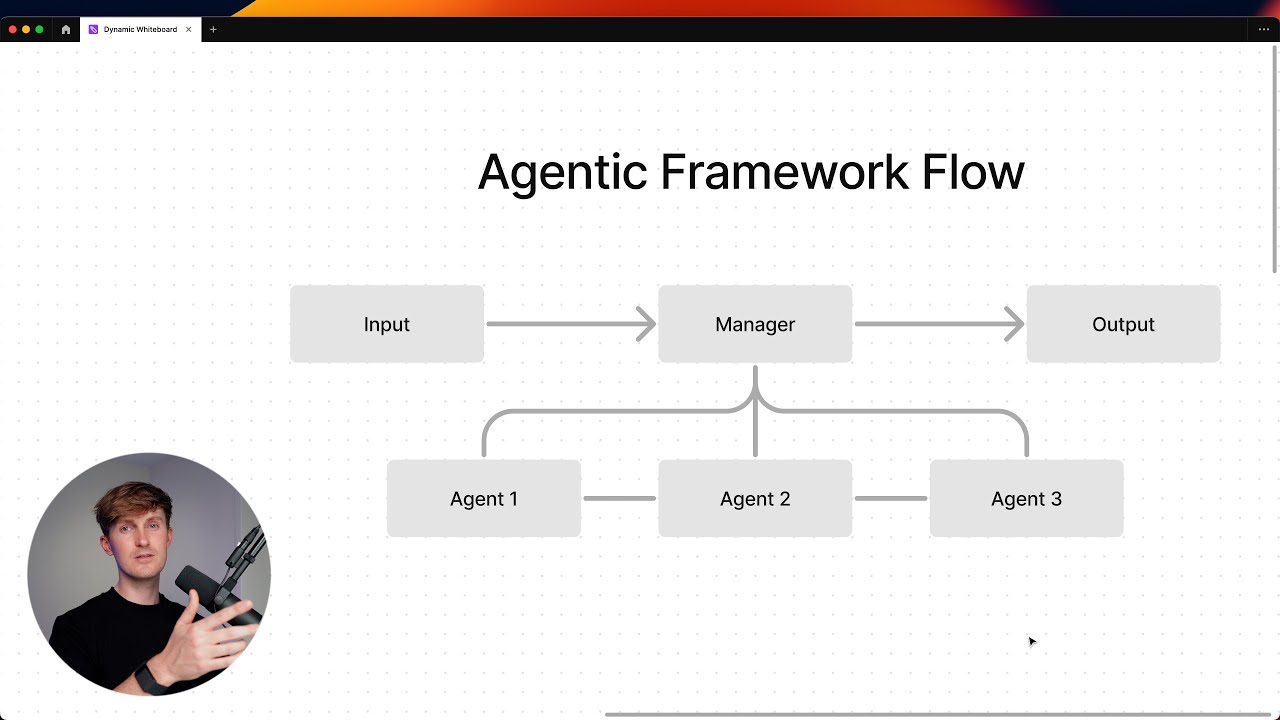

-The speaker's approach differs from the misconception that AI products are built using a single, large model that handles all inputs and outputs. Instead, the speaker advocates for a toolchain of specialized models combined with regular code.

What example did the speaker use to illustrate the toolchain approach?

-The speaker used the example of self-driving cars, which are not built using a single AI brain, but rather a toolchain of specialized models for tasks like computer vision, predictive decision-making, and natural language processing, combined with regular code.

What advice did the speaker give for companies with strict privacy requirements?

-For companies with strict privacy requirements, the speaker suggested that owning and controlling the entire technology stack allows for holding models to a high privacy bar, or even allowing companies to plug in their own in-house or enterprise language models.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)