How to Manage DBT Workflows Using Airflow!

Summary

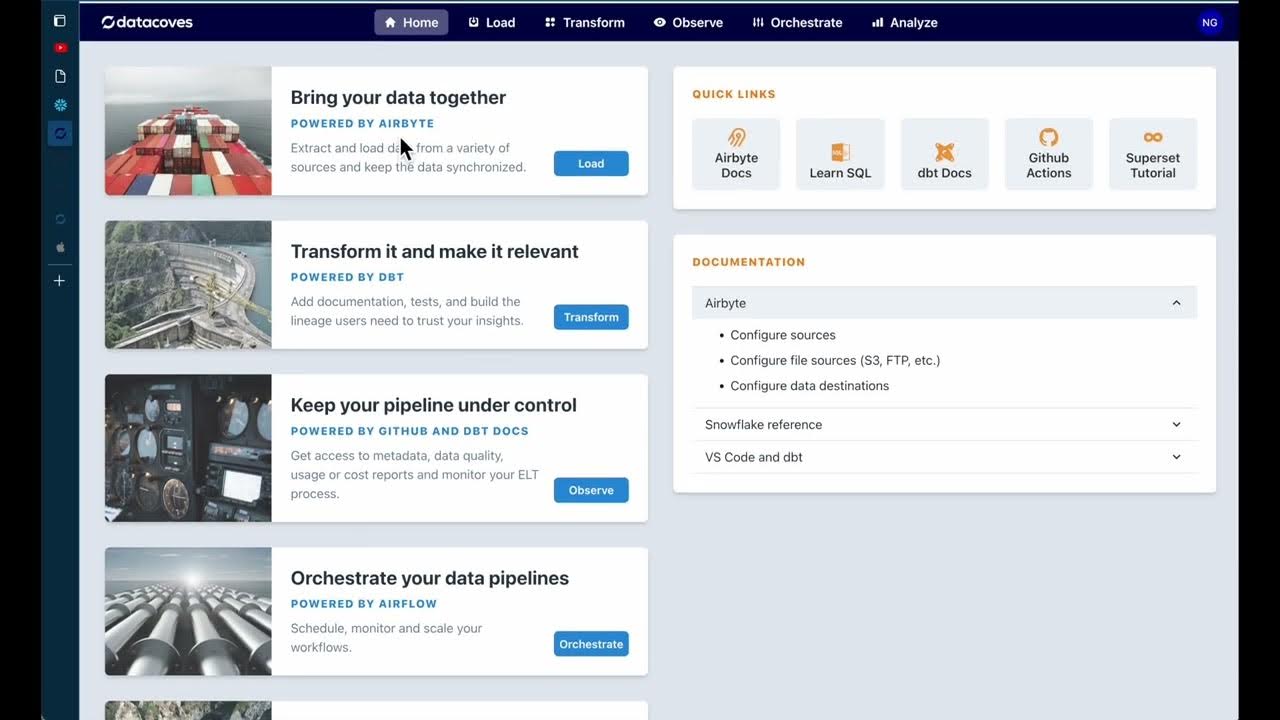

TLDRIn this tutorial, the speaker demonstrates how to create an efficient data pipeline using DBT, Apache Airflow, and the Cosmos library for visualizing DBT workflows. The process simplifies data ingestion, transformation, and monitoring by integrating Python, SQL, and DBT tasks in a single Airflow interface. By leveraging the Astro SDK, users can seamlessly move data between SQL and Python, reducing boilerplate code. The Cosmos library ensures that DBT workflows are visualized within Airflow, allowing users to monitor and manage tasks from one unified dashboard, streamlining the entire data processing pipeline.

Takeaways

- 😀 The Cosmos library for Airflow allows DBT workflows to be visualized as task groups within Airflow, making it easier to monitor data pipelines from one interface.

- 😀 The Astro SDK helps seamlessly pass data between SQL and Python tasks without needing to switch providers, simplifying database interactions.

- 😀 Using the AQL function in Astro SDK, data can be processed as a pandas DataFrame and loaded directly into a database, reducing the need for format conversions.

- 😀 A crucial step in the pipeline is creating a Postgres database connection for executing DBT tasks and transformations.

- 😀 The DBT project is configured with task-specific variables, such as project name, executable file, and DBT root path for easy access and integration within the Airflow DAG.

- 😀 Data is ingested from a local CSV file (simulating API or database input) and processed to analyze energy capacity, such as the percentage of solar and renewable energy.

- 😀 Task logging in Airflow allows tracking and monitoring of data processing, including sorting and deduplication operations.

- 😀 The integration of DBT and Airflow using Cosmos visualizes the entire data pipeline, showing all tasks from data ingestion to SQL transformations in one unified interface.

- 😀 The AQL decorator enables you to load files into the Postgres database directly, eliminating the need for intermediate data format conversions, such as from CSV to pandas DataFrame.

- 😀 Once the DAG is configured, the full DBT workflow can be monitored through the Airflow UI, providing transparency in data transformations and progress tracking.

Q & A

What is the main purpose of combining Airflow, DBT, and Cosmos in this tutorial?

-The main purpose is to streamline the data pipeline monitoring and visualization process by using Cosmos to visualize DBT workflows directly in the Airflow UI, allowing users to monitor everything from one interface instead of toggling between multiple tools.

What is the role of Cosmos in this setup?

-Cosmos is used to visualize DBT workflows as task groups within Airflow, making it easier to monitor and manage DBT tasks without needing to switch interfaces or systems.

How does the Astro SDK help in the pipeline?

-The Astro SDK allows seamless integration between SQL and Python tasks. It helps pass data between databases and Python code without needing to manually convert between data formats like pandas dataframes and SQL tables.

Why is the AQL decorator important in this setup?

-The AQL decorator is used to create tasks that interact with data. It allows for easy loading and processing of data into databases, eliminating the need to manually convert pandas dataframes to database tables before running SQL operations.

How does the DBT task group work in this setup?

-The DBT task group is responsible for running the DBT transformations on the data after it has been loaded into the database. This group allows you to perform SQL operations on the data within Airflow, automating the data processing pipeline.

What is the significance of using a Postgres database in this example?

-The use of a Postgres database in this example simplifies the process of loading and transforming data, as Postgres is widely supported and can easily integrate with DBT and Airflow. However, the setup can be adapted to work with other databases as well.

Can the pipeline be used with other data sources besides the local CSV file?

-Yes, the pipeline can be adapted to pull data from different sources like APIs or other databases. In this tutorial, a local CSV file is used for simplicity, but it can be replaced with any data source that fits your use case.

How does Airflow help in monitoring the pipeline?

-Airflow allows users to monitor the entire pipeline through its UI, where users can visualize task execution, track errors, and view logs. Cosmos enhances this by visualizing DBT workflows, so users can see the full data pipeline within Airflow.

What happens if there are errors in the pipeline during execution?

-If errors occur, they will be logged in the Airflow task logs. The script includes logging functionality to help track issues and debug the pipeline. This ensures that any issues in the pipeline can be easily identified and resolved.

How do you visualize the DBT task group within the Airflow UI?

-Once the DBT task group is set up, it can be visualized in the Airflow UI by expanding the task group. Cosmos allows you to view the detailed DBT workflow, including the specific transformations applied to the data, directly within the Airflow UI.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

How to Create an ELT Pipeline Using Airflow, Snowflake, and dbt!

dbt + Airflow = ❤ ; An open source project that integrates dbt and Airflow

Airflow with DBT tutorial - The best way!

Code along - build an ELT Pipeline in 1 Hour (dbt, Snowflake, Airflow)

How to build and automate your Python ETL pipeline with Airflow | Data pipeline | Python

dbt model Automation compared to WH Automation framework

5.0 / 5 (0 votes)