A Survey of Techniques for Maximizing LLM Performance

Summary

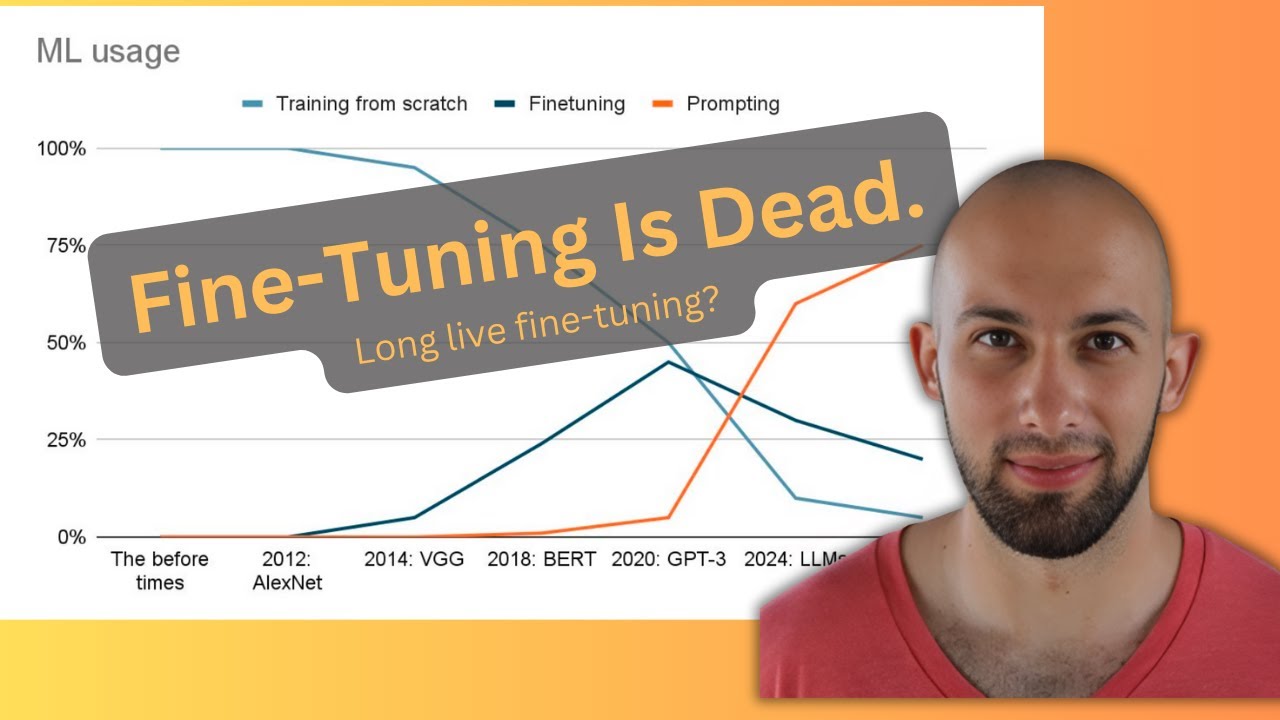

TLDRThe video discusses effective strategies for fine-tuning language models to replicate specific writing styles and enhance task performance, such as generating SQL queries. It emphasizes the importance of high-quality data, understanding training parameters, and iterative evaluation techniques. The presenters showcase their methodology through the Spider benchmark, demonstrating that starting with simple prompt engineering can yield competitive results. By combining fine-tuning with retrieval-augmented generation (RAG), they highlight how to maximize model efficiency and improve outputs, advocating for a flexible, iterative approach to model enhancement.

Takeaways

- 😀 Fine-tuning a model requires careful consideration of the dataset to ensure it aligns with the desired output style.

- 😀 Starting with prompt engineering and few-shot learning can provide quick insights into model performance without extensive investment.

- 😀 Establishing a baseline performance through simple techniques is crucial before progressing to more complex fine-tuning methods.

- 😀 The quality of training data is more important than quantity; focus on fewer high-quality examples.

- 😀 Combining fine-tuning with retrieval-augmented generation (RAG) can enhance context utilization and model efficiency.

- 😀 It's vital to evaluate fine-tuned models through expert ranking and comparisons with other models to assess improvements.

- 😀 Iterative feedback loops are essential for continual model refinement after deployment, utilizing production data for further training.

- 😀 A case study on SQL query generation demonstrated the effectiveness of simple prompt engineering and RAG techniques in improving model accuracy.

- 😀 Understanding hyperparameters and loss functions during fine-tuning can help prevent issues like overfitting and catastrophic forgetting.

- 😀 The process of fine-tuning is non-linear; expect to revisit and adjust techniques multiple times to achieve desired results.

Q & A

What is the primary focus of the discussion in the transcript?

-The discussion focuses on the process of fine-tuning large language models (LLMs) and integrating retrieval-augmented generation (RAG) techniques to improve their performance on tasks such as generating SQL queries from natural language.

Why is it important to analyze writing style when fine-tuning a model?

-Analyzing writing style is crucial because it helps ensure that the fine-tuned model replicates the desired tone and behavior, particularly for applications that require specific communication styles, such as internal messaging platforms.

What are some common methods for collecting datasets for fine-tuning?

-Common methods include downloading open-source datasets, purchasing data, employing human labelers to collect and annotate data, or distilling data from larger models, as long as it complies with the terms of service.

What role do hyperparameters play in the fine-tuning process?

-Hyperparameters are critical as they influence the model's learning process, affecting issues like overfitting, underfitting, and the potential for catastrophic forgetting during fine-tuning.

How can models be evaluated after fine-tuning?

-Models can be evaluated through various methods, including expert human rankings of outputs, peer model comparisons, or using a more powerful model to rank outputs based on quality.

What is a feedback loop in the context of model fine-tuning?

-A feedback loop refers to the iterative process where model outputs are evaluated, used to refine the dataset, and then further fine-tuned, creating a cycle of continuous improvement.

What are some best practices recommended for fine-tuning LLMs?

-Best practices include starting with prompt engineering and few-shot learning, establishing a performance baseline, focusing on high-quality data, and adopting an iterative approach to refine the model progressively.

What was the specific problem the team addressed using the Spider 1.0 benchmark?

-The team aimed to produce syntactically correct SQL queries from natural language questions and corresponding database schemas.

What was one of the significant findings from the RAG approach used in their experiments?

-Using hypothetical document embeddings—essentially generating a SQL query based on the question to perform similarity searches—resulted in a substantial performance improvement.

How did combining fine-tuning with RAG impact the results of the team's model?

-Combining fine-tuning with RAG led to improved performance metrics, getting the model close to state-of-the-art results without requiring complex preprocessing techniques.

Outlines

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифMindmap

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифKeywords

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифHighlights

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифTranscripts

Этот раздел доступен только подписчикам платных тарифов. Пожалуйста, перейдите на платный тариф для доступа.

Перейти на платный тарифПосмотреть больше похожих видео

5.0 / 5 (0 votes)