"I want Llama3 to perform 10x with my private knowledge" - Local Agentic RAG w/ llama3

Summary

TLDRThis video tutorial demonstrates how to build a robust, intelligent agent using Llama 3 on a local machine with LangChain and FileCrawler. The agent retrieves relevant documents, grades their relevance, and generates answers to user queries. If the answers are not relevant or accurate, it performs web searches and validates the response to ensure quality. The workflow is structured using LangChain's state management and conditional logic, balancing efficiency and accuracy. This project shows the power of combining local models and web search to create reliable AI-driven agents for complex question-answering tasks.

Takeaways

- 😀 Local Llama 3 model can be used for building retrieval-augmented generation (RAG) agents to answer user queries by retrieving relevant documents and generating answers.

- 😀 LangChain and LangGraph are utilized to structure and manage the workflow of the agent, including the retrieval of documents, relevance grading, and answer generation.

- 😀 The process involves downloading and running the Llama 3 model locally to execute document retrieval and answer generation tasks.

- 😀 FileCrawl is used to index documents from a website into a vector database, allowing quick retrieval based on user queries.

- 😀 A document grader is employed to assess whether the retrieved documents are relevant to the question. If not, a web search is conducted using TAV, a web search engine for agents.

- 😀 If relevant documents are found, a LangChain model generates answers based on those documents. If not, it resorts to web searches for supplemental information.

- 😀 The agent includes a hallucination checker to ensure that the generated answer is factual and grounded in the relevant documents.

- 😀 A workflow state is maintained across different agent steps, including documents, answers, and search results, allowing for smoother processing.

- 😀 LangGraph defines nodes for document retrieval, relevance grading, and answer generation, with conditional edges routing the workflow based on specific outcomes.

- 😀 The agent follows a multi-step process to ensure quality: document retrieval, relevance checking, answer generation, and final validation to ensure the answer is accurate and grounded.

- 😀 While the agent ensures high-quality answers, the tradeoff is slower performance due to the multiple validation and search steps involved in the process.

Q & A

What is the main goal of the tutorial?

-The tutorial aims to show how to build an agentic rack with Llama on a local machine, using LangChain to integrate various components for document retrieval, answer generation, and quality checks.

What are the key steps involved in building the agentic rack?

-The key steps are setting up Llama on a local machine, creating a document retriever with Filecrawl, implementing a decision-making model to check document relevance, generating answers using LangChain, and setting up checks for hallucinations and answer quality.

How does LangChain fit into the process of building the agentic rack?

-LangChain is used to structure and manage the workflow, defining the logic and interactions between different steps, such as document retrieval, relevance checking, answer generation, and quality assessment.

What is the purpose of the Llama model in this tutorial?

-Llama is used to evaluate the relevance of retrieved documents to a user’s question, as well as to generate answers based on those documents. It acts as the decision-making and answer-generation model in the process.

What is Filecrawl, and how does it contribute to the workflow?

-Filecrawl is used to create a vector database by crawling blog posts from a website. It splits the documents into smaller chunks and stores metadata, making it possible to retrieve relevant documents later in the process.

How is document relevance assessed in the tutorial?

-Document relevance is assessed by using a scoring system with Llama, where it checks whether the retrieved documents are pertinent to the user’s question. If they are relevant, the process proceeds to generate an answer; if not, a web search is triggered.

What role does Tav play in the system?

-Tav is a web search engine designed for agents, used when the retrieved documents are not relevant. It fetches web search results that might contain the necessary information to answer the user's question.

How does the hallucination checker work?

-The hallucination checker ensures that the generated answer is grounded in reality and does not provide false or fabricated information. It evaluates the accuracy of the generated response, confirming that it aligns with the retrieved documents or web search results.

What are the benefits and trade-offs of using this agentic rack setup?

-The main benefit is that the system ensures high-quality, relevant answers by checking each step thoroughly. The trade-off is that this makes the process slower compared to simpler models that may not perform as rigorously.

What is the LangGraph state and how does it fit into the overall workflow?

-LangGraph state stores values that are shared across different steps of the workflow, such as the user’s question, the answer, and retrieved documents. This helps to maintain consistency and facilitate decision-making throughout the process.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Build Anything with Llama 3 Agents, Here’s How

LangGraph: Agent Executor

How to Chat with YouTube Videos Using LlamaIndex, Llama2, OpenAI's Whisper & Python

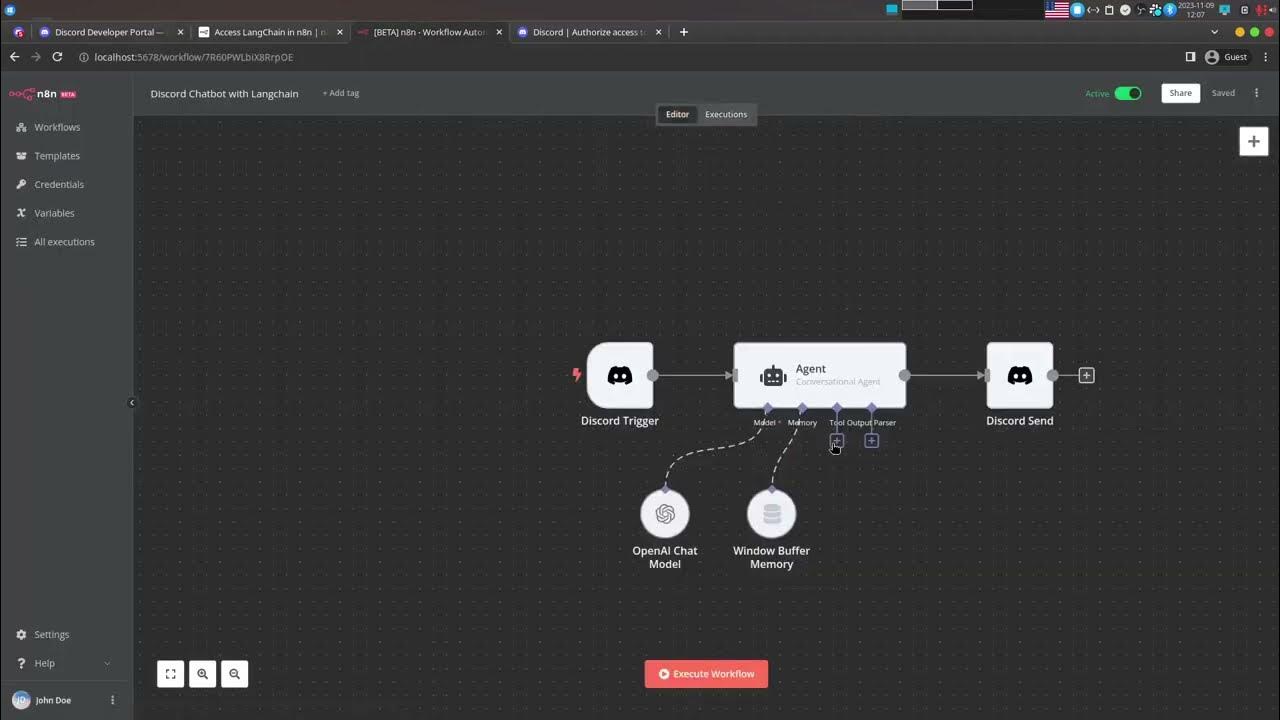

Create a No-code Discord Chatbot powered by Open AI using n8n and LangChain

Crew AI Build AI Agents Team With Local LLMs For Content Creation

Google Cloud Agent Builder - Full Walkthrough (Tutorial)

5.0 / 5 (0 votes)