S6E10 | Intuisi dan Cara kerja Recurrent Neural Network (RNN) | Deep Learning Basic

Summary

TLDRIn this session, Wira explains the fundamentals of Recurrent Neural Networks (RNNs), focusing on how they handle sequential data such as time series, audio signals, and text. RNNs are distinct from traditional neural networks because they incorporate memory, allowing them to process sequences of varying lengths and preserve context across steps. The presentation highlights how RNNs use iterative processes to predict outcomes, updating their memory or hidden state with each step. The session also introduces the concept of Backpropagation Through Time (BPTT) for training RNNs, and discusses practical applications, including text prediction.

Takeaways

- 😀 The presenter introduces the topic of RNN (Recurrent Neural Networks) and contrasts it with CNN (Convolutional Neural Networks) as another deep learning algorithm, highlighting their differences in handling sequential data.

- 😀 The main challenge that RNNs address is the inability of traditional neural networks to handle sequential data or time-series data, where the order of inputs matters.

- 😀 Examples of sequential data include time series (like company revenue over time) and audio signals, which also have an inherent sequence.

- 😀 RNNs are particularly useful for handling data in the form of sequences, such as text, where the order of words impacts the meaning of the sentence.

- 😀 One key feature of RNNs is the ability to handle variable-length sequences, unlike traditional models that require fixed-length inputs.

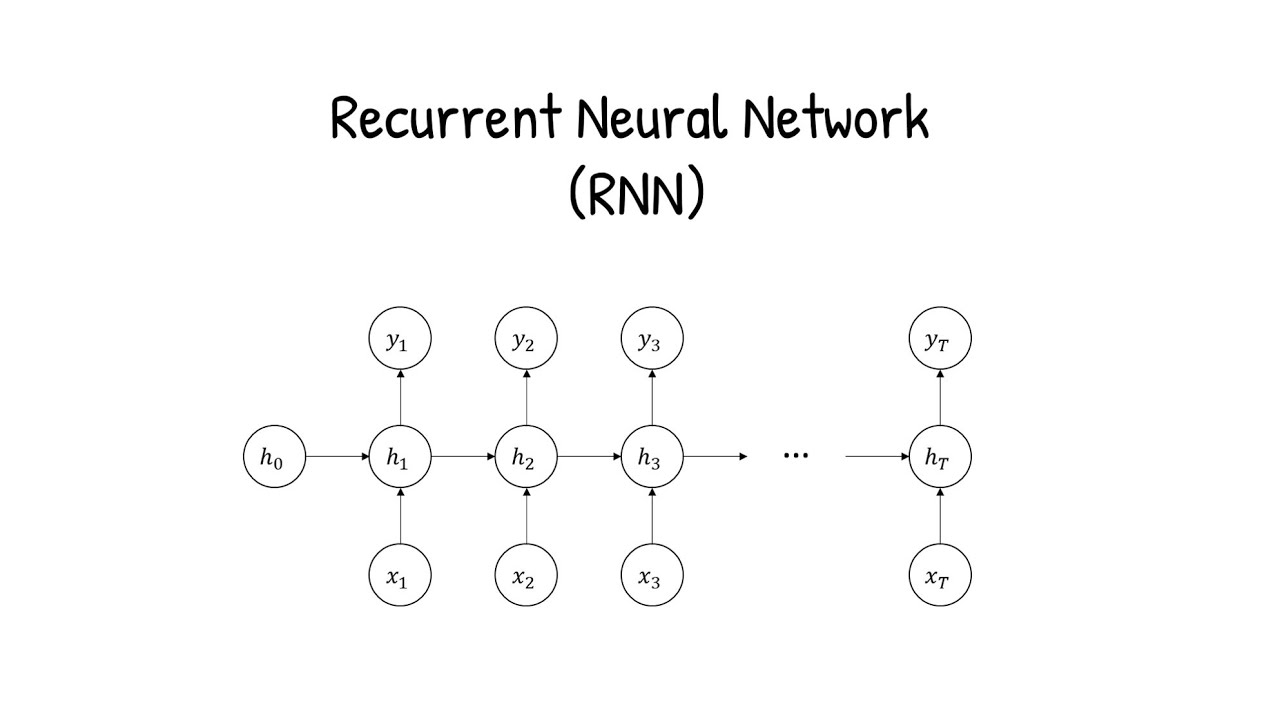

- 😀 The concept of memory or hidden states is essential in RNNs, where the context from previous predictions is carried over to influence future predictions in a sequence.

- 😀 An RNN architecture involves repeatedly passing data through the same network multiple times to create a hidden state or memory that influences predictions as new data comes in.

- 😀 The ability to propagate memory across multiple steps is essential in handling long-range dependencies in sequential data, which standard feedforward networks cannot manage.

- 😀 The backpropagation through time (BPTT) algorithm is used to train RNNs, as it involves calculating gradients over multiple time steps, unlike traditional backpropagation which only considers the current state.

- 😀 The simplicity of RNNs allows for basic operations like linear combinations of input and hidden states, but more advanced variants like LSTMs (Long Short-Term Memory networks) improve upon the basic RNN model.

Q & A

What is the main focus of this session?

-The session focuses on explaining the concept and application of Recurrent Neural Networks (RNN), particularly how they differ from other deep learning algorithms like Convolutional Neural Networks (CNN), and their ability to handle sequential data, such as time series or text.

What is the key difference between CNN and RNN?

-The key difference is that CNN is typically used for processing spatial data, like images, while RNN is designed to handle sequential data, where the order of data matters, such as in time series or text.

What kind of data can RNNs handle, and why is this important?

-RNNs can handle sequential data, such as time series (e.g., stock prices, weather data) or text. This is important because sequential data has dependencies between elements, and RNNs are designed to capture these dependencies over time, unlike other neural networks that might treat data independently.

What does the term 'memory' or 'hidden state' refer to in RNNs?

-'Memory' or 'hidden state' in RNNs refers to the information that is passed from one time step to the next in the sequence, which helps the network retain context and make predictions based on previous inputs.

How does an RNN process sequential data?

-An RNN processes sequential data by repeatedly passing the input through the same neural network, using the output (or 'memory') from the previous step as part of the input for the next step. This allows the network to learn dependencies across time steps.

What is the role of 'context' in RNNs?

-The context in RNNs refers to the memory or hidden state that is passed from one step to another. The context helps the network retain important information from previous inputs, allowing it to make more accurate predictions based on the sequence as a whole.

How does RNN handle sequences of different lengths?

-RNNs can handle sequences of different lengths by processing each element of the sequence one at a time, repeating the same network structure for each time step, and adjusting the memory accordingly. This approach allows RNNs to work with sequences of varying lengths.

What is the Backpropagation Through Time (BPTT) algorithm?

-Backpropagation Through Time (BPTT) is an extension of the traditional backpropagation algorithm, used for training RNNs. It involves calculating the gradients for each time step and updating the weights based on the errors accumulated over time, essentially 'backpropagating' through the entire sequence.

What is a 'vanilla RNN'?

-A 'vanilla RNN' refers to the most basic form of a recurrent neural network, where each time step uses the same neural network structure, and the output is simply a linear combination of the previous memory and the current input.

What are some applications of RNNs in real-world scenarios?

-RNNs are widely used in applications involving sequential data, such as natural language processing (e.g., text prediction or sentiment analysis), speech recognition, time series forecasting, and even video analysis, where the temporal order of the data is crucial.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Recurrent Neural Networks - Ep. 9 (Deep Learning SIMPLIFIED)

Pengenalan RNN (Recurrent Neural Network)

Recurrent Neural Networks (RNNs), Clearly Explained!!!

What is Recurrent Neural Network (RNN)? Deep Learning Tutorial 33 (Tensorflow, Keras & Python)

3 Deep Belief Networks

ANN vs CNN vs RNN | Difference Between ANN CNN and RNN | Types of Neural Networks Explained

5.0 / 5 (0 votes)