Kafka Tutorial - Schema Evolution Part 2

Summary

TLDRThis Kafka tutorial walks through the process of configuring the open-source Confluent platform, installing necessary components, and demonstrating schema evolution in action. The session covers setting up producers and consumers with Avro schemas, modifying schemas while ensuring backward compatibility, and updating code to handle schema changes. The tutorial showcases how old and new versions of schemas, producers, and consumers can coexist within the same Kafka system. It emphasizes the importance of understanding schema compatibility guidelines and provides practical examples to ensure smooth schema evolution in real-world applications.

Takeaways

- 😀 Learn how to install and configure the open-source version of the Confluent Platform for Apache Kafka.

- 😀 Understand the concept of schema evolution in Apache Kafka and why it’s important for maintaining compatibility between different versions of data.

- 😀 Follow a step-by-step guide to set up Zookeeper, Kafka, and Schema Registry services on your machine.

- 😀 Get hands-on experience creating a producer and consumer that use Avro schemas for serializing and deserializing messages.

- 😀 Discover how to modify an existing Avro schema to create a new version while ensuring backward compatibility with old producers and consumers.

- 😀 Understand the rules and best practices for evolving Avro schemas, ensuring compatibility between different schema versions.

- 😀 Learn how to create and compile a new producer that sends messages based on an evolved schema.

- 😀 Explore how to test and verify that both old and new producers and consumers can work together without breaking the system.

- 😀 Understand the importance of ensuring that an old consumer can still read messages from a new producer with an evolved schema.

- 😀 Discover how to implement a new consumer that can handle both old and new schema versions, reading messages without errors or exceptions.

- 😀 Gain insight into using Avro and Schema Registry to build a flexible and scalable streaming system where multiple schema versions coexist and function together.

Q & A

What is the primary goal of this tutorial?

-The primary goal of this tutorial is to demonstrate how schema evolution works in Kafka using Avro and the Schema Registry. It shows how old and new versions of schemas can coexist, allowing producers and consumers to work with different schema versions in the same system.

What is schema evolution, and why is it important in Kafka?

-Schema evolution refers to the process of changing the structure of data schemas over time. In Kafka, it is important because it allows producers and consumers to continue functioning smoothly even as the data schema evolves, ensuring backward and forward compatibility between different versions of schemas.

How does the Confluent Platform relate to Apache Kafka?

-The Confluent Platform is a distribution of Apache Kafka that includes additional tools and services, such as schema registry, Kafka Connect, and KSQL, to enhance Kafka's functionality and ease of use. It combines Kafka with additional components to create a more flexible and powerful streaming platform.

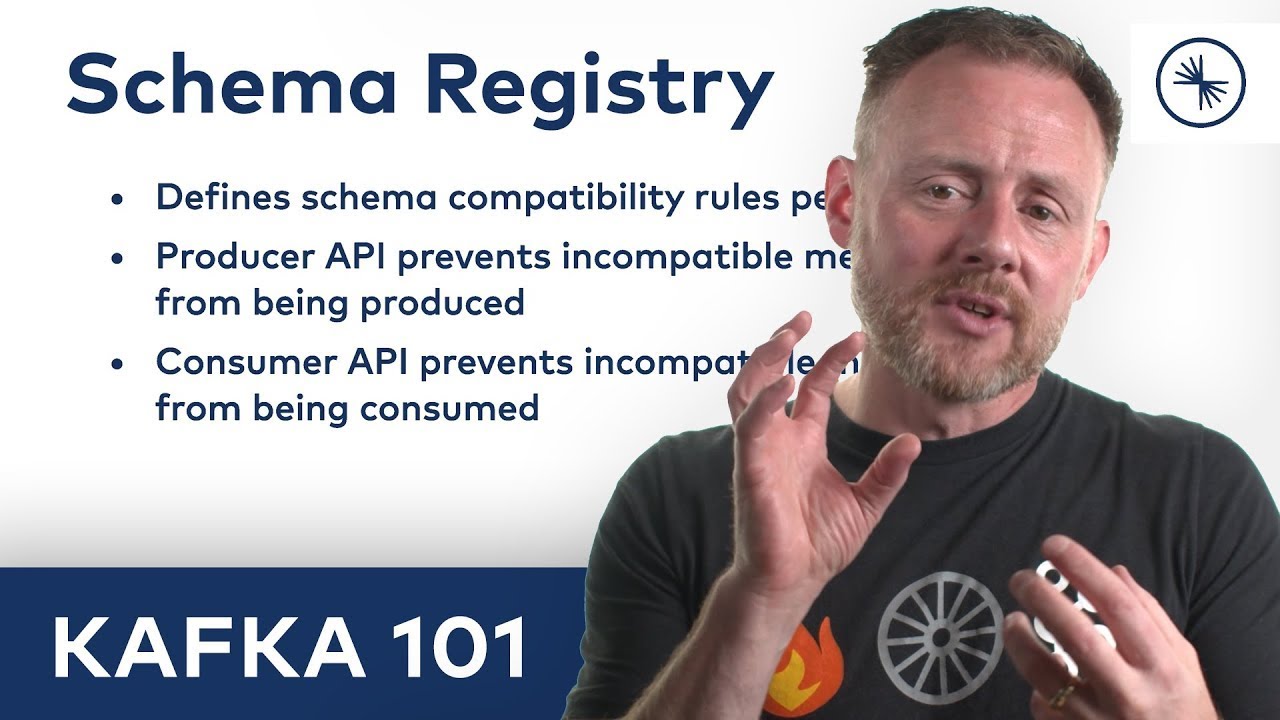

What role does the Schema Registry play in Kafka?

-The Schema Registry in Kafka is responsible for managing Avro schemas. It ensures that producers and consumers use compatible schemas for message exchange and handles schema validation and versioning, allowing schema evolution to occur without breaking the system.

What are the necessary steps to set up the Confluent Platform in this tutorial?

-The necessary steps include installing the Confluent public key, creating a repository file, and installing the Confluent Platform using the `yum` package manager. After installation, the Zookeeper server, Kafka broker, and Schema Registry must be started to enable the system to function.

What is the significance of Avro in this tutorial?

-Avro is used as the serialization format for messages in this tutorial. It allows data to be efficiently serialized and deserialized in Kafka, and its integration with the Schema Registry ensures that the schema evolves over time while maintaining compatibility between old and new versions.

What changes are made to the schema in this tutorial, and why?

-In the tutorial, the schema is modified by removing the 'referrer' field and adding three new attributes. These changes simulate schema evolution and demonstrate how a system can handle changes without breaking the ability to read and write messages in both old and new formats.

How do producers and consumers work with the evolved schema?

-Producers and consumers are adapted to handle the evolved schema. The new producer sends messages using the updated schema, while the old consumer can still read messages with the previous schema. The tutorial also demonstrates creating a new consumer capable of reading both old and new message formats.

What are the compatibility guidelines for schema evolution in Kafka?

-Schema evolution in Kafka follows compatibility rules defined by the Avro specification. These rules ensure that schema changes do not break existing systems. In this tutorial, for example, the schema is modified in a way that preserves compatibility, meaning the old consumer can still read messages sent by the new producer.

Can both old and new versions of schemas coexist in the same Kafka system?

-Yes, both old and new versions of schemas can coexist in the same Kafka system. The Schema Registry manages different versions of the schema, ensuring that producers and consumers using different versions of schemas can communicate without issues.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Apache Iceberg: What It Is and Why Everyone’s Talking About It.

Apache Kafka 101: Schema Registry (2023)

Installing Apache Kafka on Windows 11 in 5 minutes

Cara Membuat Perpustakaan Digital Gratis dengan SLIMS

Belajar Ethical Hacking Lengkap (Part 2) || Virtualization & Virtual Machine

How to Install Wazuh Server on Ubuntu 22.04 | Step-by-Step Guide

5.0 / 5 (0 votes)