The OpenAI (Python) API | Introduction & Example Code

Summary

TLDRIn this tutorial, the basics and advanced features of OpenAI’s Python API are explored, offering a beginner-friendly guide for creating chatbots and working with language models. The video explains APIs in simple terms, walks through setting up an OpenAI account, and demonstrates how to make API calls to generate responses using GPT-3.5. Advanced topics include customizing system messages, adjusting parameters like temperature and token limits, and using the API for creative tasks like lyric completion. The video concludes by introducing Hugging Face’s Transformers library as an open-source alternative to avoid API costs.

Takeaways

- 😀 APIs are interfaces that allow different software applications to communicate with each other. In the context of OpenAI’s Python API, it lets you interact programmatically with OpenAI's language models.

- 😀 OpenAI’s Python API provides more flexibility compared to using the web interface. You can customize the chatbot’s behavior, manage the number of responses, adjust randomness, and process multiple input types.

- 😀 The cost of using OpenAI’s API is based on tokens, which represent words and characters. Both the input and output tokens count toward the total cost.

- 😀 Setting up an OpenAI account involves creating an account, adding a payment method, setting usage limits, and obtaining a secret API key for authentication.

- 😀 The temperature parameter controls the randomness of responses. A temperature of 0 makes responses deterministic, while higher values (up to 2) introduce more randomness.

- 😀 You can adjust input parameters like the maximum response length (max tokens) and the number of responses (n) to better suit your needs when making API calls.

- 😀 The OpenAI API supports multiple models, and you can fine-tune them for specific tasks, such as language processing or even custom tasks like transcription or translation.

- 😀 Example code demonstrates how to make an API call to OpenAI’s language models, including how to handle responses, extract the relevant content, and iterate for more advanced use cases like chatbot development.

- 😀 A system message in the OpenAI API can set the context or behavior of your chatbot, like specifying that it is a lyric completion assistant or defining the chatbot’s role.

- 😀 By tweaking parameters like 'temperature', you can experiment with creative or more structured outputs. For instance, the assistant can continue song lyrics, but with temperature adjustments, the results may become unpredictable or creative.

- 😀 If cost is a concern, OpenAI's API might not scale well for large applications. Open-source alternatives like Hugging Face’s Transformers library offer free solutions to download and run pre-trained models locally, saving costs on API calls.

Q & A

What is an API, and why is it important for working with OpenAI's models?

-An API (Application Programming Interface) is a set of tools and protocols that allows different software programs to communicate with each other. In the case of OpenAI, the API enables users to send requests to the model (like GPT-3.5) and receive responses. This communication allows for various applications, such as building chatbots, generating text, and more.

How do you set up the OpenAI Python API?

-To set up the OpenAI Python API, you need to create an account on OpenAI, navigate to the API section, and obtain an API key. Then, install the OpenAI Python client library using the command `pip install openai` in your terminal. Finally, you need to set the API key in your Python script using `openai.api_key = 'your-api-key'`.

What are some key parameters you can adjust when making a request to OpenAI's API?

-Key parameters include 'temperature', which controls the randomness of responses; 'max tokens', which sets the maximum length of the generated response; and 'n', which specifies the number of response variations to generate. These parameters help fine-tune the behavior and output of the model.

What does the 'temperature' parameter do, and how does it affect the response?

-'Temperature' controls the level of randomness in the model's responses. A low temperature (close to 0) makes the output more deterministic and focused, while a higher temperature (closer to 1) makes the responses more creative and varied. Adjusting this parameter allows you to control the style of the output.

What is an example of using the OpenAI API to create a chatbot?

-An example would be creating a lyric-completion chatbot. You could send a message like 'I know there's something in the wake of your smile', and the chatbot, based on the system message, would complete it with 'I get a notion from the look in your eyes'. This setup uses a system message to define the chatbot’s role, and the assistant provides contextually accurate responses based on that.

How can you use the OpenAI API to generate text based on a series of prompts?

-To generate text based on prompts, you can create a list of 'messages' that includes both the user's inputs and the assistant's previous responses. These messages are sent in an API call, which then generates a new response based on the entire conversation history. This allows the model to maintain context over the course of the interaction.

What is the purpose of setting 'n' in the OpenAI API request?

-The 'n' parameter defines how many responses the model should generate for a single prompt. By setting this to a higher number, you can get multiple variations of a response, which can be useful when you want to explore different outputs or pick the best one for a particular use case.

What happens when you set the temperature to 0?

-When you set the temperature to 0, the model's responses become highly predictable and deterministic, producing the most likely completion for a given prompt. This results in responses that are more consistent and less creative, which can be useful when you want factual or concise answers.

Can the OpenAI API generate creative or nonsensical outputs?

-Yes, depending on the settings (e.g., higher temperature values), the OpenAI API can generate more creative or nonsensical outputs. For instance, in the demo, when the temperature was increased, the model produced abstract and somewhat nonsensical responses, demonstrating the model's flexibility to generate both coherent and random text.

What are some potential drawbacks of using OpenAI's API for large-scale projects?

-One major drawback is the cost of API usage, especially if you are making many requests in a large-scale project. Each API call incurs a cost, which can quickly add up if you have thousands or millions of users. Additionally, scaling to meet high demand may require significant infrastructure planning to handle the load effectively.

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

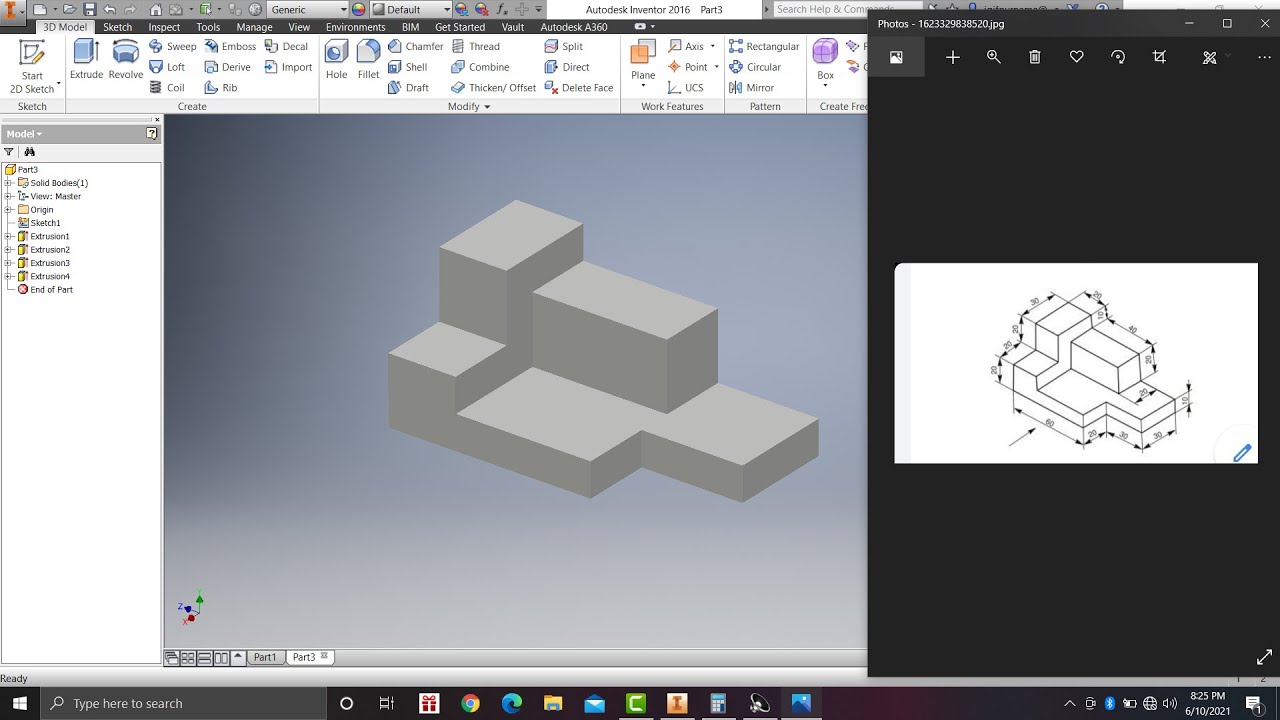

Learn autodesk inventor 3dimensional # 13 mechanical engineering

Konsep Membuat Website Dari 0 Untuk Pemula

LEGO MINDSTORMS Robot Inventor Guide – How to program in Python

#0. Overview | Imaginary E-Commerce Application | Django | In Hindi 🔥😳

Microsoft Access Tutorial - Beginners Level 1 (Quick Start)

Python - Variables - W3Schools.com

5.0 / 5 (0 votes)