Azure EmbodiedAI Sample Lip Sync Visemes

Summary

TLDRIn this video, Alex demonstrates how to integrate lip sync visemes into an AI Unity application using the Azure Speech SDK. The tutorial covers modifying models in Maya to create blend shapes for different mouth movements, mapping them to visemes, and implementing them in Unity with a sample manager class. The process involves capturing viseme events, timing their playback, and smoothly transitioning between blend shapes for a realistic lip-sync effect, showcasing a practical approach to animating character speech in real-time.

Takeaways

- 📘 The video is a tutorial on implementing lip sync visemes in a Unity application using Azure Speech SDK.

- 🎨 Modifications were made to a GitHub repository to enable lip sync visemes, including design files for initial blender and Maya files with associated FBX.

- 🔍 The tutorial uses Maya's shape editor to create blend shapes for different mouth variations that correspond to phonemes mapped to visemes.

- 📐 It's important to adjust vertex positions when creating targets in the blend shape editor, as simple scaling won't suffice.

- 🗣️ A 'mouth neutral' position is introduced, which is crucial for mapping viseme number zero from the Azure Speech SDK.

- 🕒 The Azure Speech SDK's SpeechSynthesizer class captures viseme events, providing viseme IDs and audio offsets for precise lip syncing.

- 📊 The script explains the timing and precision involved in lip syncing, comparing frame rates and audio offsets to determine the best approach.

- 🔧 The implementation involves setting up variables in the sample manager class, including a visemes dictionary, a list for collected visemes, and transition durations.

- 🔄 A core routine is used for animating lip sync visemes, involving the calculation of timings and the activation and deactivation of visemes.

- 👄 The last viseme received corresponds to silence (viseme ID zero), allowing the mouth to return to a neutral shape after the last utterance.

- 🛠️ The script concludes with a demonstration of the lip sync in Unity, showing how blend shapes are played in sequence for a given utterance.

Q & A

What is the main topic of the video?

-The video discusses how to implement simple and robust lip sync visemes in an embedded AI Unity application using Azure Speech SDK.

What modifications were made to enable lip sync visemes in the project?

-Additional modifications were made to the GitHub repository, including the upload of design files such as an initial Blender file with its associated FBX and a Maya file with the associated FBX.

Which software was used to implement lip sync visemes?

-Maya was used to implement lip sync visemes, specifically utilizing the shape editor to introduce blend shapes for different variations of the mouth.

What is the purpose of the initial mesh in the character's mouth?

-The initial mesh represents the character's mouth in its default position and is placed in the scene as a starting point for introducing multiple variations or targets.

Why is simple scaling not sufficient when creating targets for the mouth shape?

-Simple scaling is not sufficient because it does not account for the specific positioning adjustments of vertices needed to represent different visemes accurately.

What is the significance of the 'mouth neutral' position in the viseme implementation?

-The 'mouth neutral' position is the same as the original position and is used when mapping viseme number zero from Azure Speech SDK, providing a default state for the character's mouth.

How does the Azure Speech SDK's SpeechSynthesizer class help in lip sync viseme implementation?

-The SpeechSynthesizer class allows capturing and catching viseme received events, which provide viseme IDs and audio offsets for different visemes, aiding in the timing and synchronization of lip movements.

What is the importance of timing in implementing visemes?

-Timing is crucial as it determines the precision of when visemes should be played, ensuring that the lip movements are synchronized with the audio.

How does the video script describe the process of implementing visemes in Unity?

-The script describes the process as involving the setup of variables in the sample manager class, the use of a core routine for animation, and the activation and deactivation of visemes based on timing and received events.

What role does the 'transition duration' play in the lip sync process?

-The 'transition duration' determines the time it takes to transition from one viseme to another, allowing for smooth changes in the character's mouth shape.

How is the blend shape weight used in the activation and deactivation of visemes?

-The blend shape weight is used to interpolate between blend shapes, going from zero to the maximum range for activation and from the current weight to zero for deactivation, creating a smooth transition effect.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Create an ANIMATION 🔥 in 3 Steps using 3 Canva AI Tools - 5 min

Lip Sync - React Three Fiber Tutorial

Cashfree Flutter Integration

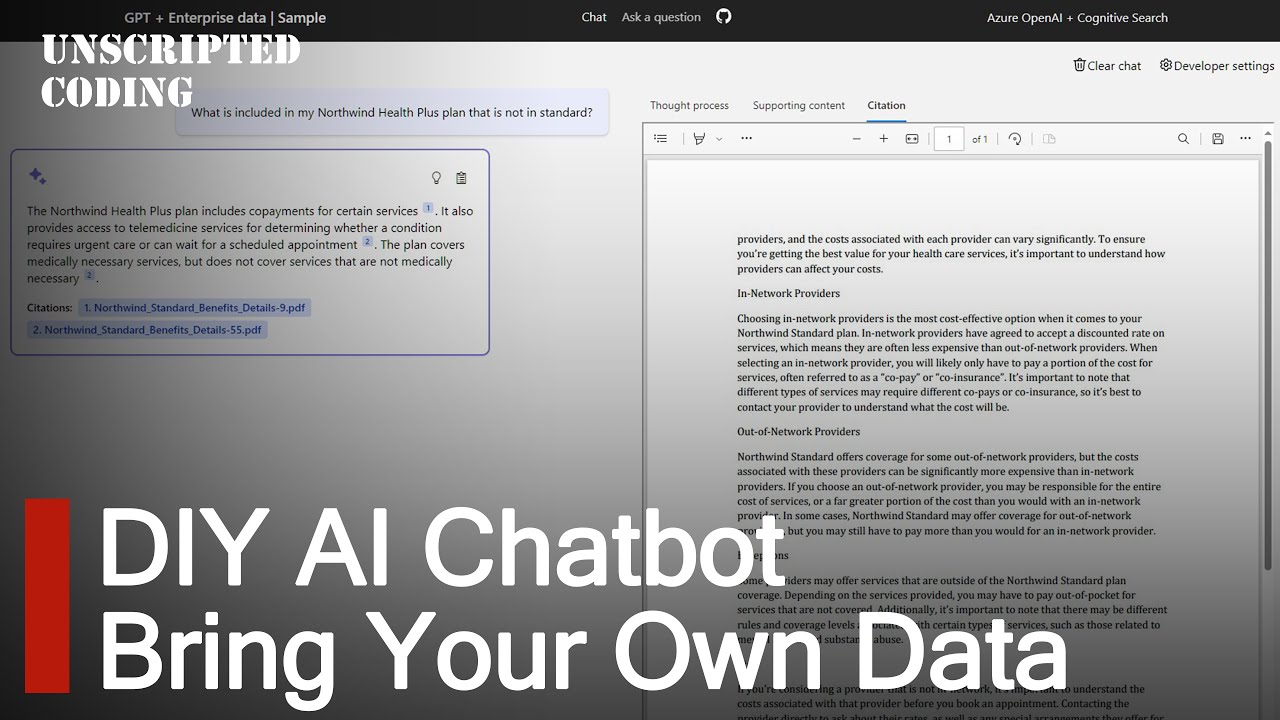

Azure Search OpenAI Demo - DIY Microsoft AI chatbot with bring-your-own-data | Unscripted Coding

What is Azure File Sync & how to configure it?

How to Make UGC Ads with AI For FREE

5.0 / 5 (0 votes)