Representing Numbers and Letters with Binary: Crash Course Computer Science #4

Summary

TLDRThis Crash Course Computer Science episode delves into how computers store and represent numerical data, introducing binary and its application in computing. It explains the concept of bits and bytes, the significance of 8-bit and 64-bit computing, and how binary is used to represent both positive and negative numbers. The video also covers floating-point numbers, the ASCII and Unicode systems for encoding text, and the importance of binary in various file formats. It sets the stage for understanding computation and the manipulation of binary data.

Takeaways

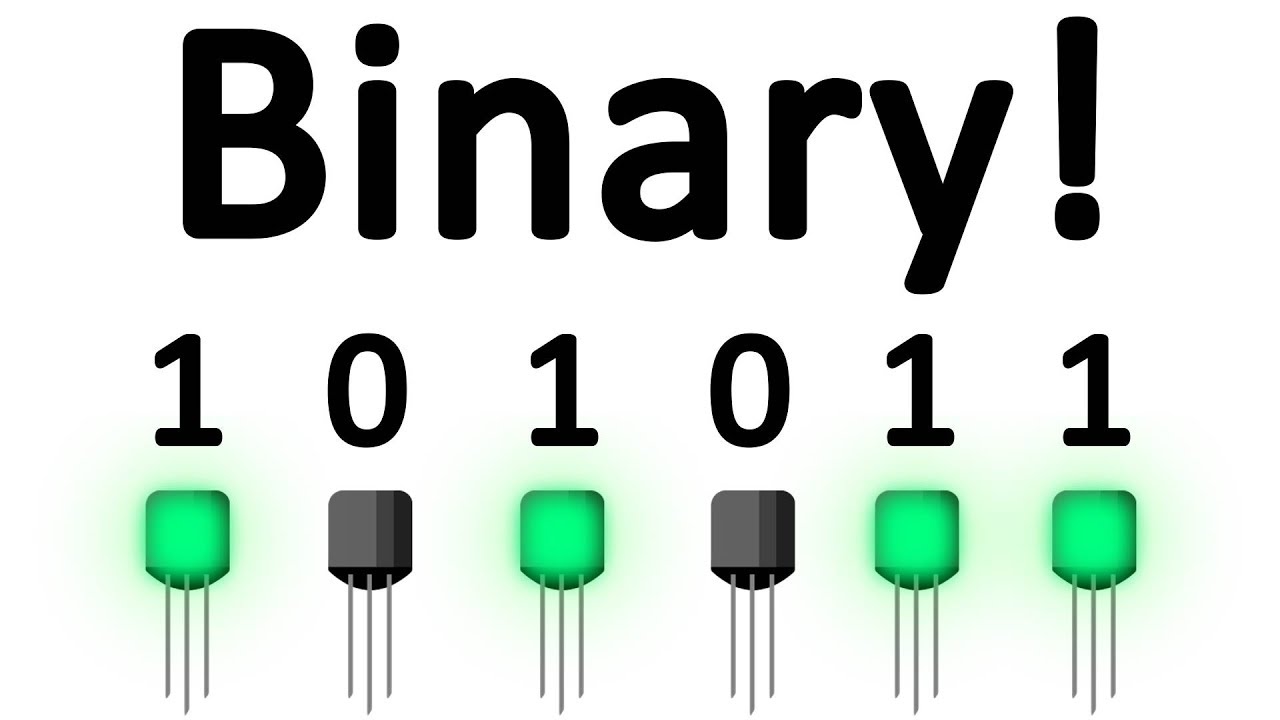

- 😲 Computers use binary digits (bits) to represent data, with two states: 1 and 0, similar to true and false in boolean algebra.

- 📚 Binary numbers work on the base-2 system, where each digit represents a power of 2, allowing for the representation of larger numbers with more digits.

- 🔢 The script explains how to convert binary numbers to decimal and vice versa, demonstrating basic binary addition.

- 💾 A byte is defined as 8 bits, and larger units like kilobytes, megabytes, and gigabytes are powers of 1024 bytes, not the traditional 1000.

- 🔄 The first bit in a binary number often represents the sign of a number, with 0 for positive and 1 for negative, in a system that can handle both positive and negative integers.

- 🌐 32-bit and 64-bit computers operate in chunks of 32 or 64 bits, respectively, which significantly increases the range of numbers they can represent.

- 🌈 Computers use 32-bit color graphics to display a wide range of colors, which is why images can be so smooth and detailed.

- 📈 Floating point numbers, like 12.7 or 3.14, are represented using methods such as the IEEE 754 standard, which is similar to scientific notation.

- 🔤 ASCII is a 7-bit code that can represent 128 different values, including letters, digits, and symbols, enabling basic text representation in computing.

- 🌍 Unicode was created in 1992 to provide a universal encoding scheme for text in any language, using 16 bits to cover over a million codes.

- 🎥 All digital media, including text, images, audio, and video, are ultimately composed of long sequences of binary 1s and 0s.

Q & A

How do computers store and represent numerical data?

-Computers store and represent numerical data using binary digits, or bits, which can be either 1 or 0. They use a base-two system, similar to how the decimal system uses base-ten, but with powers of two instead of ten.

What is a binary value and how is it useful?

-A binary value is a single digit in the binary number system, which can either be 1 or 0. It is useful because it allows computers to represent numbers and information in a simple form that can be easily manipulated by electronic components.

How does the binary number system represent larger numbers than just 1 and 0?

-To represent larger numbers, binary uses multiple binary digits. Each digit represents a power of two, with each position to the left representing a larger power, similar to how the decimal system uses powers of ten.

What is the significance of the number 256 in the context of binary numbers?

-The number 256 is significant because it represents the total number of different values that can be represented using 8 bits, which is 2 to the power of 8 (2^8). This is also the size of a byte in computing.

What is a bit and how does it relate to bytes and kilobytes?

-A bit is a binary digit, which can be either 1 or 0. A byte is 8 bits, and kilobyte is 1000 bytes or, in binary terms, 1024 bytes, which is 2 to the power of 10 (2^10) bytes.

How do computers represent positive and negative numbers?

-Most computers use the two's complement system, where the first bit is used for the sign of the number, with 1 representing negative and 0 representing positive. The remaining bits are used to store the magnitude of the number.

What is the range of numbers that can be represented with 32-bit and 64-bit systems?

-With 32 bits, the largest number that can be represented is just under 4.3 billion, while a 64-bit system can represent numbers up to around 9.2 quintillion.

What is the IEEE 754 standard and why is it used?

-The IEEE 754 standard is a method used to represent floating-point numbers in computers. It stores decimal values in a format similar to scientific notation, with a sign bit, exponent, and significand (or mantissa), allowing for efficient representation of both very large and very small numbers.

How does ASCII represent text?

-ASCII (American Standard Code for Information Interchange) represents text by assigning a unique 7-bit binary number to each character, including letters, digits, and symbols. This allows for the encoding of 128 different values.

What is the purpose of Unicode and how does it differ from ASCII?

-Unicode was devised to create a universal encoding scheme that could represent characters from all languages. Unlike ASCII, which is limited to 128 characters, Unicode uses 16 bits, allowing for over a million codes to represent characters from every written language, along with symbols and emojis.

How do computers manipulate binary sequences for computation?

-Computers manipulate binary sequences through logic gates and arithmetic operations, such as addition, subtraction, multiplication, and division. These operations are performed on bits, which are the fundamental units of data in computing.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenant5.0 / 5 (0 votes)