EDA - part 1

Summary

TLDRIn this Python class lecture, the focus is on a practical application of pandas for data manipulation and visualization. The lecturer guides through a real-life case study using a house prices dataset. Key topics include data cleaning, exploratory data analysis, and creating various graphs using matplotlib and seaborn libraries. The session covers handling missing values, analyzing the impact of different features on sale prices, and introduces basic plotting techniques.

Takeaways

- 📊 This lecture focuses on practical case studies using pandas for data manipulation and visualization with a real estate dataset.

- 🏠 The dataset explores various factors affecting house prices, emphasizing the importance of data cleaning and exploratory data analysis (EDA).

- 📈 Visualization is a key component, teaching how to create different types of graphs to represent data insights.

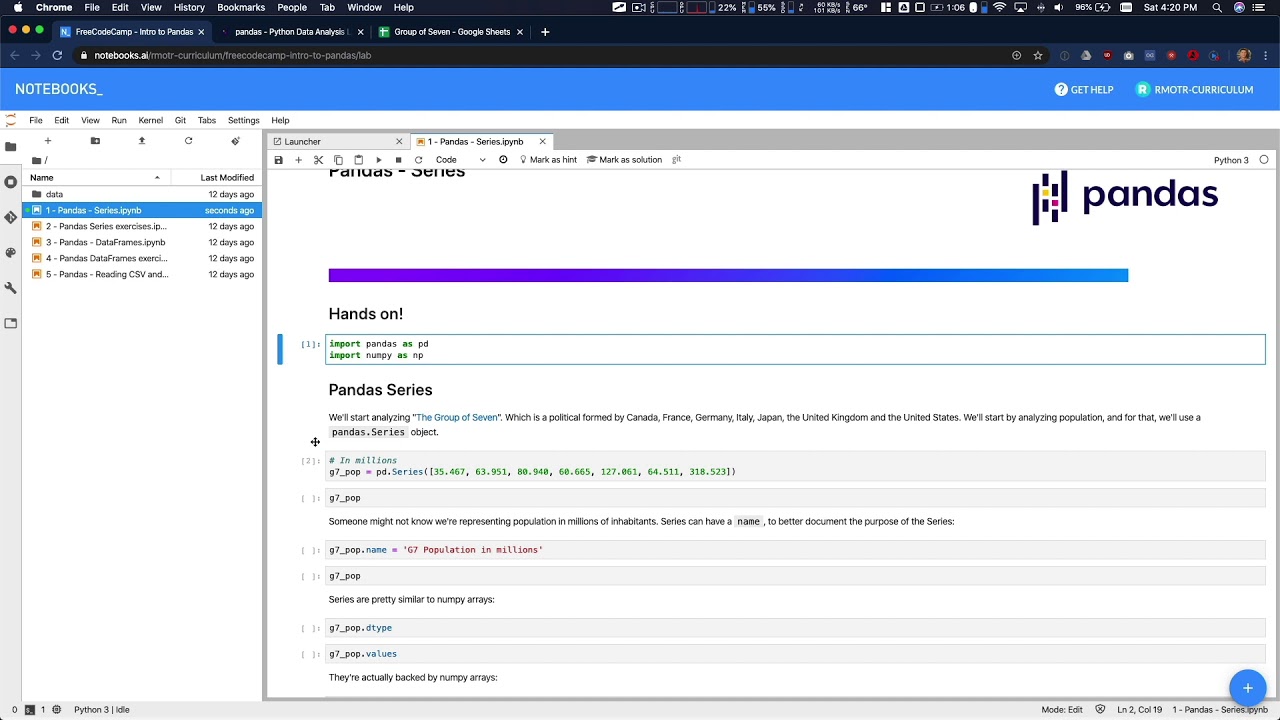

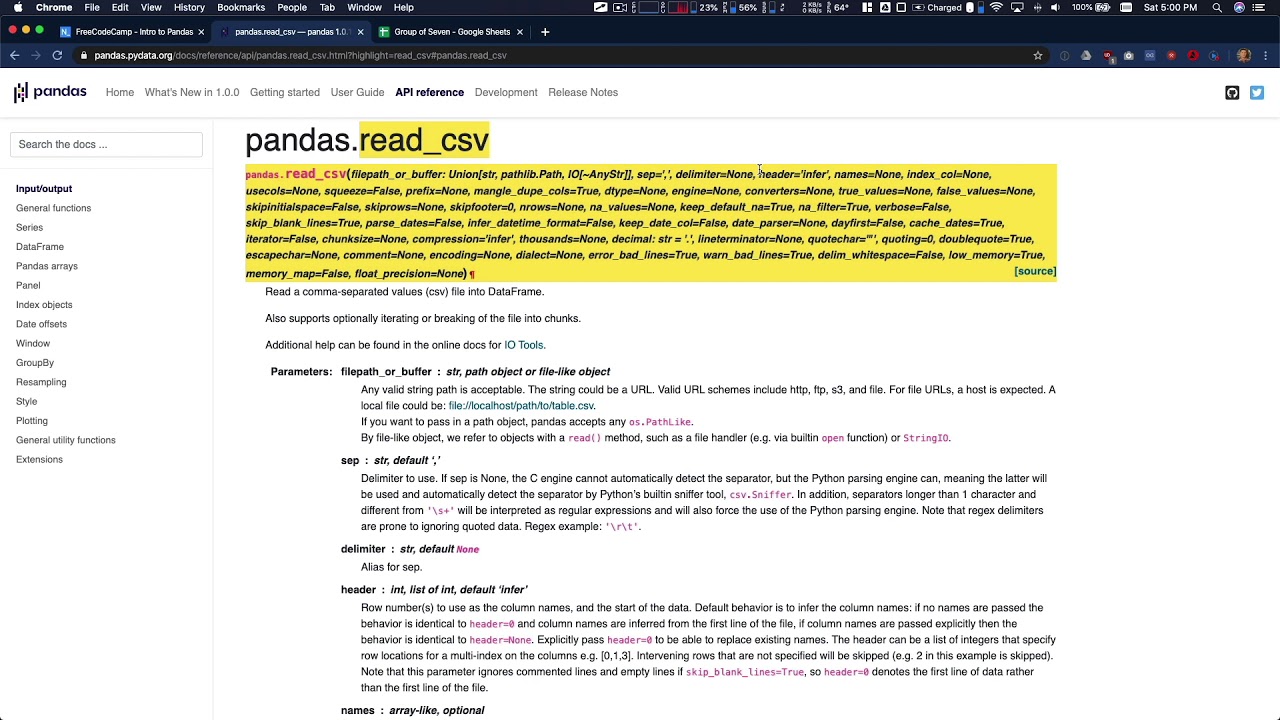

- 📂 The lecture demonstrates how to import data from a CSV file, emphasizing the use of relative paths for file locations.

- 🔍 Data exploration techniques such as `head()`, `tail()`, and `shape` are covered to understand the dataset's structure and contents.

- 🧹 The importance of data cleaning is highlighted, including checking for and handling duplicates using `drop_duplicates()`.

- 📊 `describe()` function is used to get an overview of the dataset's statistics, helping to understand data distribution and identify outliers.

- 🕵️♂️ The script discusses checking for missing values using `isnull()` and `sum()`, which is crucial for accurate data analysis.

- 📉 A demonstration on plotting missing values using matplotlib to create bar graphs, showing which features have missing data.

- 🏡 The lecture explores how to fill missing values, using techniques like filling missing alley types with 'No Alley' as an example.

- 📊 Grouping data by categories (like Alley, Fence, or Bedroom) and calculating statistics (like mean or median) to find patterns or relationships with the sale price.

Q & A

What is the main focus of the lecture series on Python classes?

-The main focus of the lecture series is to explore practical cases with Python, specifically using the pandas library for data manipulation and visualization.

What dataset is used in the lecture for practical case studies?

-The dataset used in the lecture is about house prices, which depends on a variety of factors, and is intended for data cleaning and exploratory data analysis.

How can one obtain the house price dataset mentioned in the lecture?

-The house price dataset can be obtained from sources like Kaggle or Google Dataset. The lecturer saved a CSV file from the web for the lecture.

What is exploratory data analysis and why is it important?

-Exploratory data analysis (EDA) is the process of using statistics and visualizations to discover patterns in data. It's important for understanding the characteristics of a dataset and informing further analysis or modeling.

How does one check for duplicates in a pandas DataFrame?

-One can check for duplicates in a pandas DataFrame using the `drop_duplicates()` method. This method removes duplicate rows, and if no duplicates are found, the shape of the DataFrame remains the same.

What is the significance of checking for null values in a dataset?

-Checking for null values is significant because it helps identify missing data which can affect the accuracy of statistical analysis. It's a part of data cleaning to ensure the quality of the dataset.

How can one visualize the count of missing values in different columns of a DataFrame?

-One can visualize the count of missing values using a bar plot with matplotlib. The columns can be on the x-axis and the count of missing values on the y-axis.

What does the term 'normalize' mean in the context of value counts?

-In the context of value counts, 'normalize' means to scale the counts to represent proportions rather than absolute numbers, providing a percentage distribution of the unique values.

How can one analyze the impact of different parameters on the sale price in the dataset?

-One can analyze the impact of different parameters on the sale price by using group by operations to calculate statistics like mean or median within categories defined by those parameters.

What libraries are mentioned for data visualization in Python?

-The libraries mentioned for data visualization in Python are matplotlib, seaborn, and plotly.

What is the role of seaborn in data visualization compared to matplotlib?

-Seaborn is built on top of matplotlib and is designed to provide more attractive and informative statistical graphics. It is easier to use for creating complex visualizations and is well adapted for working with pandas DataFrames.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenant5.0 / 5 (0 votes)