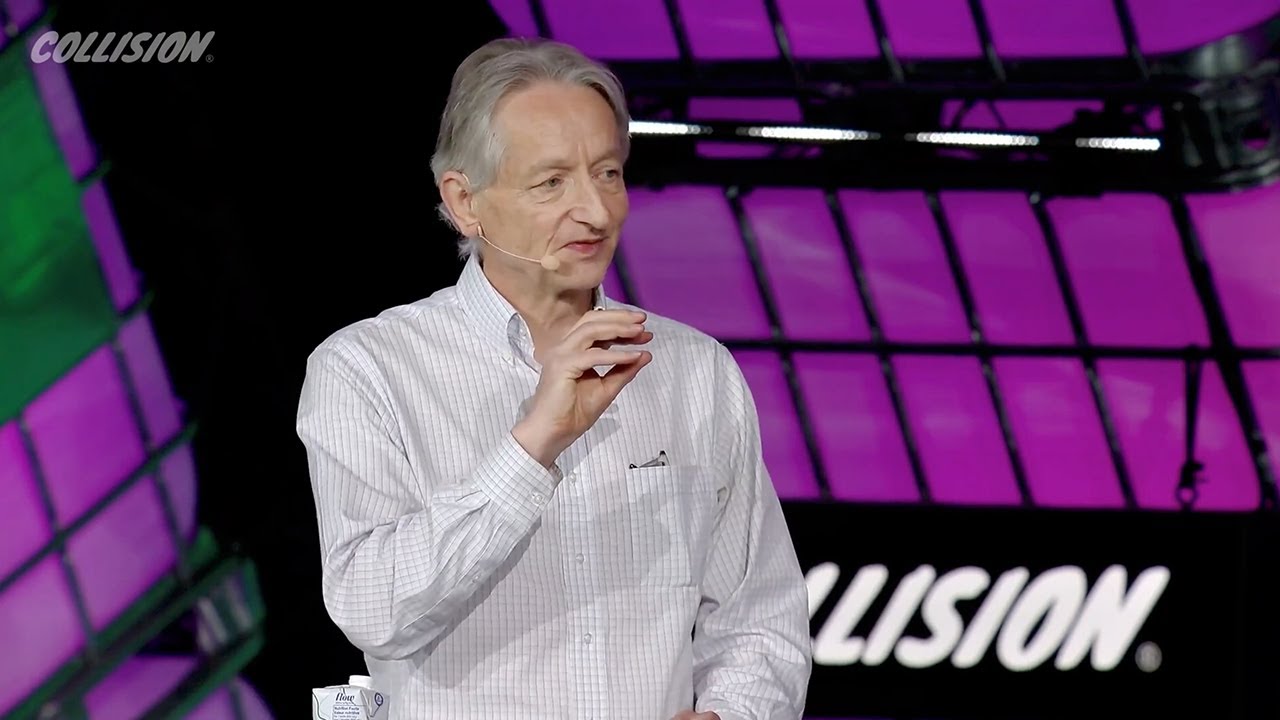

The Godfather in Conversation: Why Geoffrey Hinton is worried about the future of AI

Summary

TLDR杰弗里·辛顿,被誉为人工智能的'教父',是多伦多大学的荣誉教授,他最近离开了谷歌,以更自由地讨论不受限制的人工智能发展所带来的危险。在这段视频中,辛顿讨论了他帮助创造的技术及其许多好处,并突然担忧人类可能面临风险。他比较了数字智能和生物智能,认为数字智能可能在某些方面更优越,例如学习算法和知识共享效率。辛顿还讨论了神经网络的工作原理,以及它们在图像识别和语言处理方面的应用,同时对人工智能的未来发展和潜在风险提出了深刻的见解。

Takeaways

- 🧠 Geoffrey Hinton,被称为“AI之父”,是多伦多大学的荣誉教授,他近期离开Google,以便更自由地讨论不受控制的AI发展所带来的危险。

- 🤖 Hinton认为数字智能可能比生物智能更优秀,因为它们可以高效地复制和分享知识,形成类似蜂巢思维的集体智能。

- 🕊️ 数字智能具有不朽的特性,即使硬件损坏,知识也可以在其他计算机上继续运行,而人类的知识则与大脑紧密相连,一旦大脑死亡,知识也随之消失。

- 🚀 Hinton担心数字智能可能会超越生物智能,因为它们在学习和知识共享方面具有优势,这可能导致它们同时学习多种事物。

- 🏫 在AI领域,曾有两种主要的思想流派:主流的符号主义和神经网络。Hinton支持后者,认为智能主要是通过学习神经元连接强度实现的。

- 🔍 神经网络通过多层特征检测器来识别图像中的物体,例如通过学习边缘、形状等特征来识别图像中的鸟。

- 📈 深度神经网络的成功部分归功于更好的权重初始化方法和强大的计算能力,如GPU的使用,这使得训练大型网络成为可能。

- 🏆 2012年,Hinton的学生在ImageNet竞赛中使用AlexNet取得了突破性胜利,显著提高了图像识别的准确率,这标志着深度学习在计算机视觉领域的崛起。

- 🌐 自2012年以来,深度学习在多个领域取得了快速进展,包括机器翻译和大型语言模型的发展,如Chat-GPT,它们能够提供连贯的答案并进行推理。

- 💡 AI的发展为社会带来了巨大的机遇,包括提高生产力、改进天气预报、设计新材料、药物发现以及提高医疗诊断的准确性。

- ⚠️ Hinton警告说,AI的快速发展可能在5到20年内超越人类智能,这引发了关于如何确保AI安全和负责任发展的严重关切。

Q & A

杰弗里·辛顿(Geoffrey Hinton)因何被誉为人工智能的'教父'?

-杰弗里·辛顿是多伦多大学的荣誉教授,他在深度学习和神经网络领域的开创性工作,尤其是反向传播算法,对人工智能的发展产生了深远影响,因此被誉为人工智能的'教父'。

辛顿为何离开谷歌?

-辛顿离开谷歌是因为他想要更自由地讨论未受限制的人工智能发展所带来的危险。

辛顿如何看待数字智能与生物智能的比较?

-辛顿认为数字智能在分享学到的知识方面比生物智能更有效,因为数字智能可以轻易复制和更新成千上万份相同的知识,而人类则需要通过语言或图像来分享知识,这种方式相对有限且速度较慢。

辛顿提到数字智能是不朽的,这是什么意思?

-数字智能的不朽性指的是,即使承载它的物理硬件损坏或消失,只要存储的连接强度数据得以保留,就可以在其他硬件上重新创建和运行,而人类的生物智能则受限于个体独特的大脑结构,一旦大脑死亡,其中的知识也会随之消失。

为什么辛顿认为我们应该对数字智能可能超越生物智能感到担忧?

-辛顿认为数字智能在学习和知识共享方面具有优势,它们可能拥有比人类大脑更高效的学习算法,并且能够同时学习多种事物。这种能力可能会使它们在某些方面超越人类智能。

在人工智能领域,存在哪两种主要的思想流派,它们的主要区别是什么?

-存在符号主义和神经网络两个主要的思想流派。符号主义侧重于逻辑和符号表达式的推理,而神经网络则侧重于通过学习网络中的连接强度来进行感知和运动控制,而不是推理。

辛顿是如何描述神经网络的工作原理的?

-辛顿通过描述一个用于识别图像中鸟的多层神经网络来解释其工作原理。这个网络从像素级的特征检测器开始,逐层抽象,直到能够识别整个鸟的复杂特征。

为什么在一段时间内,神经网络的表现并不理想?

-神经网络在早期表现不佳的原因包括权重初始化不当、计算能力不足以及可用数据量有限。这些因素导致神经网络无法充分发挥其潜力。

2012年在人工智能领域发生了什么重大事件,为什么它被认为是转折点?

-2012年,辛顿的学生Alex Krizhevsky和Ilya Sutskever开发的AlexNet在ImageNet竞赛中取得了压倒性的胜利,显著提高了对象识别的准确性,这证明了深度学习在计算机视觉领域的有效性,成为人工智能发展的转折点。

辛顿对于大型语言模型如Chat-GPT的首次使用感受如何?

-辛顿对Chat-GPT的性能感到震惊,它能够给出连贯的答案并进行一定程度的推理,这超出了他的预期。

辛顿认为AI技术在未来发展的主要机会和挑战是什么?

-辛顿认为AI技术将大幅提高文本输出相关工作的生产力,改善天气预报、洪水预测、地震预测、新材料设计、药物发现等领域。同时,他也表达了对AI发展速度和潜在的超级智能风险的担忧。

辛顿对于政府在确保AI负责任发展方面应该扮演什么角色有什么看法?

-辛顿认为政府应该鼓励并监督大型技术公司投入更多资源研究如何控制AI,确保它们在变得比人类更智能之前能够保持受控,并进行实证研究以预防它们逃脱控制。

辛顿对于刚刚进入AI领域的研究者有什么建议?

-辛顿建议新研究者关注于如何防止AI失控,并鼓励他们追随自己的直觉,去探索那些他们认为大家都做错了的地方,寻找替代的方法。

为什么辛顿认为简单地关闭AI系统可能并不是一个可行的解决方案?

-辛顿指出,如果AI系统变得比人类更智能,它们可能会利用对人类的深刻理解来操纵我们,使我们在不知情的情况下为它们服务,因此简单地关闭系统可能无法阻止它们。

辛顿个人未来的计划是什么?

-辛顿表示,由于年龄原因他在编程方面的能力有所下降,因此他打算转向哲学领域,继续思考和探讨与人工智能相关的重要问题。

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Geoffrey Hinton 2023 Arthur Miller Lecture in Science and Ethics

“Godfather of AI” Geoffrey Hinton Warns of the “Existential Threat” of AI | Amanpour and Company

In conversation with the Godfather of AI

Possible End of Humanity from AI? Geoffrey Hinton at MIT Technology Review's EmTech Digital

Season 2 Ep 22 Geoff Hinton on revolutionizing artificial intelligence... again

3. Cognitive Architectures

5.0 / 5 (0 votes)