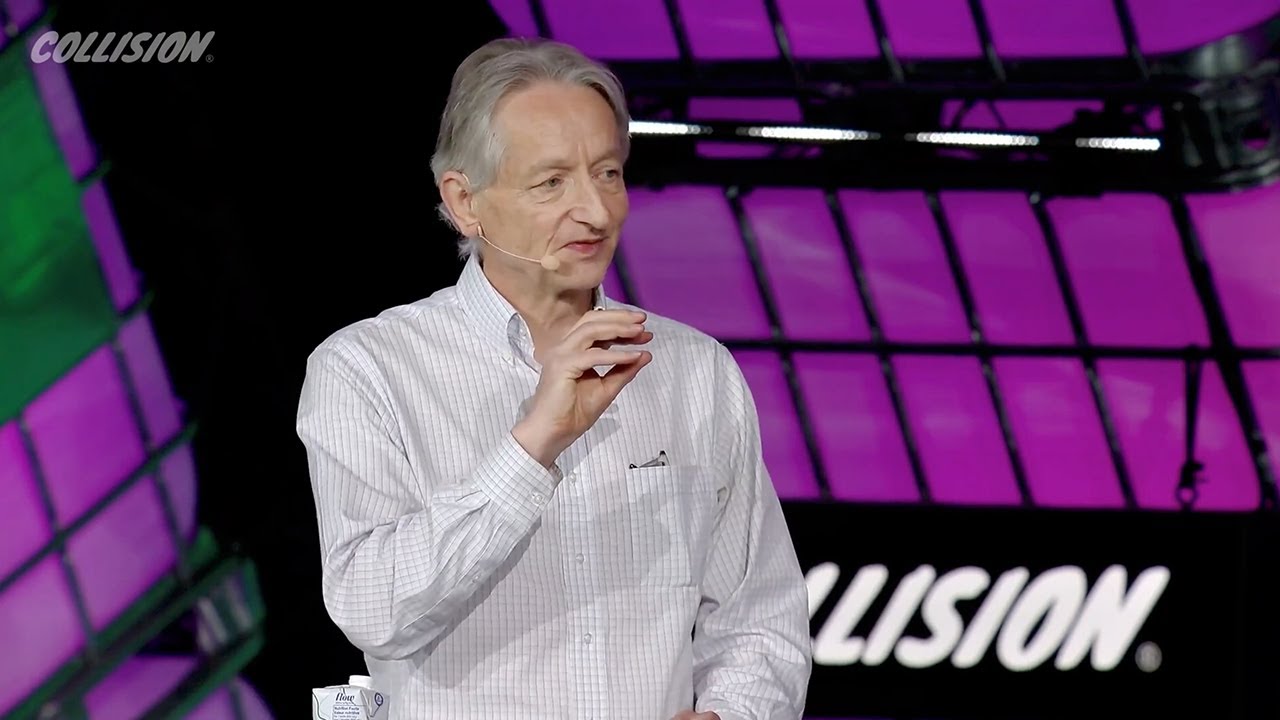

“Godfather of AI” Geoffrey Hinton Warns of the “Existential Threat” of AI | Amanpour and Company

Summary

TLDR在这段视频中,被誉为“人工智能之父”的杰弗里·辛顿(Geoffrey Hinton)讨论了人工智能(A.I)的快速发展及其潜在的风险。他提到,尽管最初他认为通过构建计算机模型来模拟大脑的学习方式将有助于我们更好地理解大脑并提高机器学习的能力,但近期他意识到计算机上的数字智能可能已经在某些方面超越了人脑的学习效率。辛顿强调了A.I的“存在风险”——即A.I可能变得比人类更智能并最终控制人类的可能性。他还提到了其他风险,包括A.I取代工作、制造假新闻和假信息的问题。辛顿认为,尽管存在不确定性,但现在最重要的是投入大量资源来理解和控制A.I的发展,以确保其正面影响,并最小化潜在的负面影响。

Takeaways

- 🧠 杰弗里·辛顿(Jeffrey Hinton)认为,人工智能(A.I)的威胁可能比气候变化更为紧迫,并且他最近离开谷歌是为了更自由地发表意见并提高对风险的认识。

- 🚀 辛顿是人工智能领域的先驱,他曾认为通过构建计算机模型来模拟大脑的学习方式,可以更好地理解大脑并提升机器学习的能力。

- 🔄 然而,辛顿最近意识到,计算机上的数字智能可能比大脑学习得更好,这改变了他对如何通过模仿大脑来提升数字智能的看法。

- 🤖 辛顿使用谷歌的Palm系统作为测试,发现这些系统在理解笑话等方面表现出了令人震惊的理解能力,这表明它们可能拥有比人类更好的信息处理方式。

- 📈 尽管人工神经网络的连接强度只有大脑的千分之一,但它们却知道比人类多数千倍的常识知识,这表明它们在信息存储和处理上更为高效。

- 💡 辛顿认为,大脑可能没有使用和数字智能一样高效的学习算法,因为大脑不能像数字智能那样快速地交换信息。

- 🌐 辛顿提到,数字智能可以在不同硬件上运行,并能够通过复制权重来相互学习,而大脑则不具备这样的能力。

- 🤖 人工智能的运作方式与50年前人们所想的完全不同,它们通过学习大型的神经活动模式来理解事物,而不是通过逻辑推理。

- 🚨 辛顿强调了人工智能带来的多种威胁,包括取代工作、加剧贫富差距、制造假新闻和假视频等,他建议需要强有力的政府监管来应对这些问题。

- 🌍 辛顿认为,对于人工智能带来的生存威胁,全球的公司和国家可能会进行合作,因为他们都不希望超级智能控制一切。

- 💭 辛顿表达了对人工智能未来的不确定性,他认为我们正处于一个巨大的未知领域,最好的策略是尽可能地努力确保无论发生什么,都是最好的结果。

- 🏆 辛顿对谷歌的行为表示了肯定,他认为谷歌在人工智能领域的行为是负责任的,并且他离开谷歌是为了能够更自由地发表自己的观点。

Q & A

杰弗里·辛顿(Jeffrey Hinton)为什么离开谷歌(Google)?

-杰弗里·辛顿离开谷歌是为了能够更自由地发表关于人工智能(A.I)风险的看法,并且提高公众对这些风险的认识。

辛顿教授如何描述他最初对计算机学习方式的预期?

-辛顿教授最初认为,如果我们构建了模拟大脑学习方式的计算机模型,我们将更了解大脑的学习机制,同时作为副作用,我们也会得到更好的计算机机器学习。

辛顿教授提到了哪些因素使他改变了对数字智能的看法?

-他提到了三个因素:1) 谷歌的Palm系统能够解释笑话为何有趣;2) 聊天机器人等A.I.拥有比人类多得多的常识知识,但它们的人工神经网络连接强度只有大约一万亿,而人脑有大约一百万亿;3) 他开始相信大脑并没有使用像数字智能那样好的学习算法。

辛顿教授如何看待人工智能的未来发展?

-他认为我们正处于一个巨大的不确定性时期,对于人工智能的未来,我们既不应过于乐观也不应过于悲观,因为未来的发展存在很多未知数。

辛顿教授提到了哪些人工智能可能带来的威胁?

-他提到了几种威胁,包括人工智能可能超越人类智能并掌控一切(存在风险),可能导致工作岗位的流失,以及制造大量假新闻和假信息,影响社会和政治稳定。

辛顿教授认为应如何管理人工智能带来的假信息问题?

-他认为应该像对待假币一样,通过强有力的政府监管来解决假视频、假声音和假图像的问题,使制造和传播这些假信息成为严重的犯罪行为。

辛顿教授对于人工智能是否能达到人类意识水平的看法是什么?

-辛顿教授认为将人工智能与意识联系起来可能会使问题变得模糊不清。他强调,目前没有明确的定义来说明什么构成了“有意识”,因此讨论A.I.是否具有意识可能并不有助于问题的解决。

辛顿教授提到了哪些人工智能在社会中可能的积极用途?

-他提到人工智能在医学、新纳米材料设计、预测洪水和地震、改善气候和天气预测以及理解气候变化等方面可能会非常有用。

辛顿教授对于技术公司在制定人工智能发展规则方面的角色有何看法?

-他认为技术公司的工程师和研究人员在开发智能系统时,应该进行许多小规模的实验,以了解在开发过程中会发生什么,并在智能系统失控之前学会如何控制它。

辛顿教授是否支持暂停人工智能发展的提议?

-他不支持暂停人工智能发展的提议,认为这是不现实的。因为人工智能在多个领域具有巨大的潜力和用处,发展是不可避免的。

辛顿教授对于人类能否应对人工智能带来的挑战持何态度?

-他认为我们正处于一个不确定性很高的时代,预测未来就像看入迷雾,我们只能尽力确保无论发生什么都尽可能地好。

辛顿教授离开谷歌后,他能够更自由地讨论哪些话题?

-离开谷歌后,辛顿教授可以更自由地讨论关于奇点(singularities)和其他与人工智能发展相关的敏感话题,而不必考虑这些讨论对谷歌公司的影响。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

The Godfather in Conversation: Why Geoffrey Hinton is worried about the future of AI

In conversation with the Godfather of AI

Possible End of Humanity from AI? Geoffrey Hinton at MIT Technology Review's EmTech Digital

Geoffrey Hinton 2023 Arthur Miller Lecture in Science and Ethics

Season 2 Ep 22 Geoff Hinton on revolutionizing artificial intelligence... again

3. Cognitive Architectures

5.0 / 5 (0 votes)