AWS re:Invent 2020: Understand ML model predictions & biases with Amazon SageMaker Clarify

Summary

TLDRIn this re:Invent presentation, Pinar Yilmaz and Michael Sun explore Amazon SageMaker Clarify, a tool designed to demystify machine learning predictions and biases. They define bias in ML, introduce SageMaker Clarify, discuss its application at Prudential, and demonstrate its use in detecting bias and enhancing explainability across the ML lifecycle. The tool's integration into various SageMaker components and its ability to comply with regulatory requirements highlight its comprehensive utility in the financial sector.

Takeaways

- 📚 Amazon SageMaker Clarify is a tool designed to help understand machine learning predictions and detect biases within models.

- 🔍 Bias in machine learning is defined as imbalances in prediction accuracy across different groups and is crucial to identify and mitigate throughout the ML lifecycle.

- 🛠️ SageMaker Clarify offers a suite of APIs and core libraries that integrate with various SageMaker components, aiding in bias detection, mitigation, and model explainability.

- 📈 The tool is used in practical applications like Bundesliga match facts, where it helps explain the machine learning model's decisions in real-time for a better fan experience.

- 🏢 Prudential Financials leverages SageMaker Clarify to ensure transparency and trust with regulators, internal stakeholders, and customers by explaining AI decisions and detecting biases.

- 🔑 SageMaker Clarify is particularly important for regulated industries like insurance, where explainability is key for compliance with laws and maintaining customer trust.

- 📊 The tool provides a way to generate bias reports and visualize metrics, helping to understand and address class imbalances and feature importance within models.

- 🔄 SageMaker Clarify can be used to monitor models in production, detecting drift in bias and explainability metrics over time, signaling the need for potential model retraining.

- 🛑 There's a trade-off between model accuracy and interpretability; simple models may be more interpretable but less accurate, while complex models may be more accurate but harder to understand.

- 🌐 Various techniques for explainability exist, such as perturbation-based, gradient-based algorithms, and rule extraction, which can be selected and applied according to the use case.

- 🔬 SageMaker Clarify is being considered for inclusion in Prudential's future AI/ML platform governance, ensuring model explainability is a standard part of their AI practice.

Q & A

Who is Pinar Yilmaz and what is her role in the AWS Deep Engine team?

-Pinar Yilmaz is a senior software engineer in the AWS Deep Engine team. She is responsible for discussing Amazon SageMaker Clarify, a tool that helps in understanding machine learning predictions and biases.

What is Amazon SageMaker Clarify and what does it aim to address?

-Amazon SageMaker Clarify is a tool designed to help users understand the predictions and biases in machine learning models. It provides insights into potential imbalances in the accuracy of predictions across different groups and offers methods to detect and mitigate these biases.

What are the three main reasons for addressing bias in the machine learning lifecycle?

-The three main reasons for addressing bias in the machine learning lifecycle are: 1) During the data science phase to understand inherent biases in the dataset or model, 2) When operationalizing models to provide explanations to stakeholders, and 3) For regulatory purposes to comply with laws and regulations around algorithm behavior and the right to explanations.

How does SageMaker Clarify help in the data science phase of a machine learning project?

-SageMaker Clarify helps in the data science phase by allowing users to run a bias report to understand the bias metrics in the dataset before training begins. This helps in identifying any inherent or embedded biases early in the process.

What is the trade-off between accuracy and interpretability in machine learning models?

-The trade-off between accuracy and interpretability in machine learning models is that simple models, which are easy to understand and interpret by humans, may not provide the desired accuracy. Conversely, complex models like deep learning, which offer high accuracy, can be difficult for humans to understand and interpret, essentially becoming a 'closed box'.

Can you explain the concept of 'xGoals' as mentioned in the Bundesliga example?

-xGoals, as mentioned in the Bundesliga example, refers to expected goals statistics. It uses a machine learning model trained on Amazon SageMaker to determine real-time goal-scoring chances based on 16 different factors. With the help of SageMaker Clarify, Bundesliga can explain the key underlying components that influence the prediction of a certain xGoals value.

How does SageMaker Clarify integrate with other SageMaker components?

-SageMaker Clarify integrates with other SageMaker components such as Studio, Data Wrangler, Debugger, Experiments, Model Monitor, and Pipelines. It offers APIs and core libraries that are used for bias detection, mitigation, and explainability, and are optimized to run on AWS.

What is the importance of explainability for a company like Prudential Financials?

-For Prudential Financials, explainability is crucial as it helps build trust with customers and regulators by providing transparency in how data is collected, features are generated, algorithms are used, and decisions are made by AI systems. It ensures an open and honest dialogue, which is fundamental to the company's relationship with its customers.

How does SageMaker Clarify assist in addressing the challenges faced by Prudential Financials in explaining AI models?

-SageMaker Clarify assists Prudential Financials by offering multiple algorithmic choices that can be easily combined, providing flexibility. It also optimizes and parallelizes algorithms, enabling the company to achieve results more quickly. This helps in explaining the AI models to various stakeholders, including regulators and customers.

What are the next steps for Prudential Financials in terms of using SageMaker Clarify?

-The next steps for Prudential Financials include scaling the tasks by incorporating multiple new use cases and scaling up the dataset and algorithms. They are also actively considering SageMaker Clarify as part of their future governance, ensuring that every model on Prudential's AIML platform will incorporate explainability.

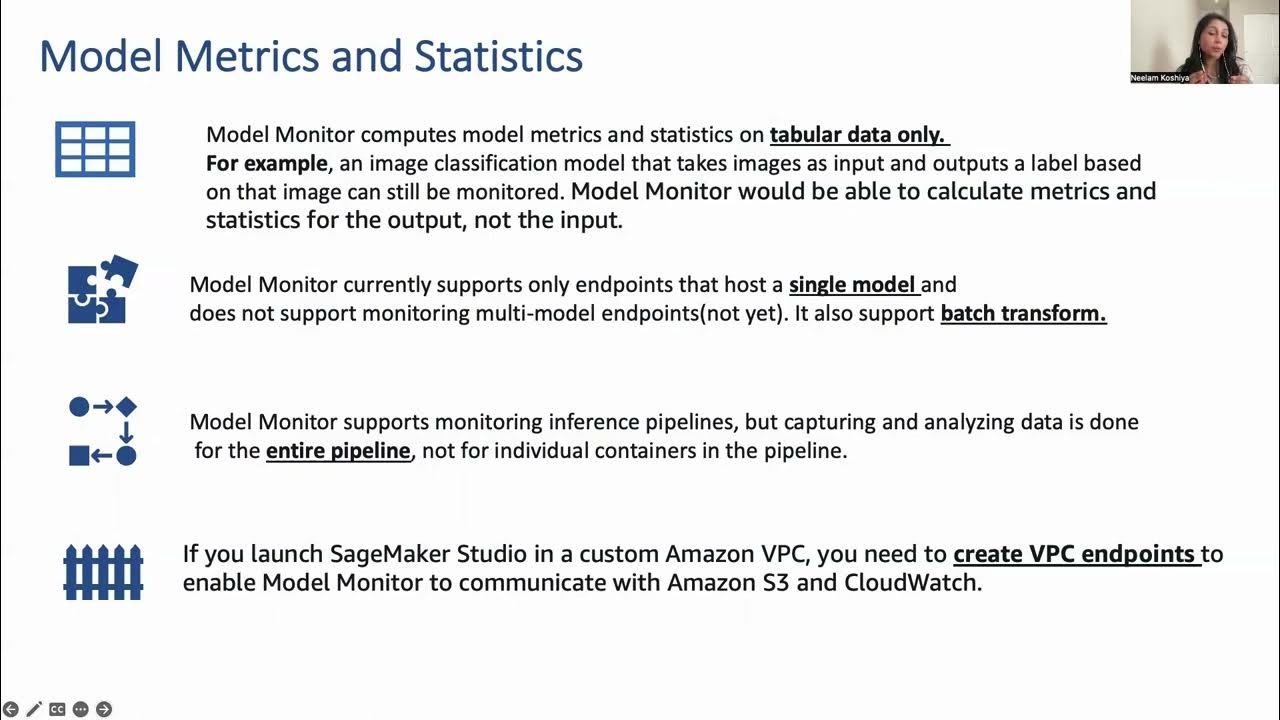

How can bias and explainability metrics be monitored over time using SageMaker Model Monitor?

-Bias and explainability metrics can be monitored over time using SageMaker Model Monitor by deploying an endpoint with data capture enabled and creating a model monitoring schedule. This allows for the visualization and understanding of how these metrics change, ensuring that they remain stable and indicating when it might be necessary to collect more data or retrain the model.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Introduction to Amazon SageMaker

AWS re:Invent 2020: Detect machine learning (ML) model drift in production

What is Amazon SageMaker?

Estimating Healthcare Receivables using Amazon SageMaker Canvas | Amazon Web Services

AWS CCP exam | 9 machine learning services to know

Sagemaker Model Monitor - Best Practices and gotchas

5.0 / 5 (0 votes)