Fine Tuning Microsoft DialoGPT for building custom Chatbot || Step-by-step guide

Summary

TLDRThis video demonstrates how to fine-tune a conversational chatbot using the DialogGPT medium model and multi-turn dialogue datasets. It walks through the process in a Google Colab environment, covering necessary library installations, data preprocessing, and model setup using Hugging Face's Transformers library. The video explains how to load the model, tokenize inputs, and handle conversation history for coherent responses. It also shows how to set up a training pipeline with an 80-20 data split and use the trainer module for fine-tuning the chatbot. A comparison of results before and after fine-tuning is provided.

Takeaways

- 💻 Fine-tuning a conversational chatbot requires using a dataset and a pre-trained model like Microsoft's DialogGPT medium model.

- 🛠 The necessary libraries for fine-tuning include datasets, torch, torchvision, torchaudio, and transformers.

- 🚀 The tutorial demonstrates how to fine-tune a DialogGPT model using the multi-turn dialogue dataset in a Google Colab environment.

- 🧑💻 Padding is crucial during the fine-tuning process, and the EOS token is used as the pad token for batch inputs.

- 🤖 DialogGPT is a causal language model, and causal LM methodology is necessary for fine-tuning such models.

- 📊 The dataset must be pre-processed, and input features are created by concatenating context and response for conversation continuity.

- 📄 The dataset object is created using the datasets library, which makes it compatible with the trainer module in the Transformers library.

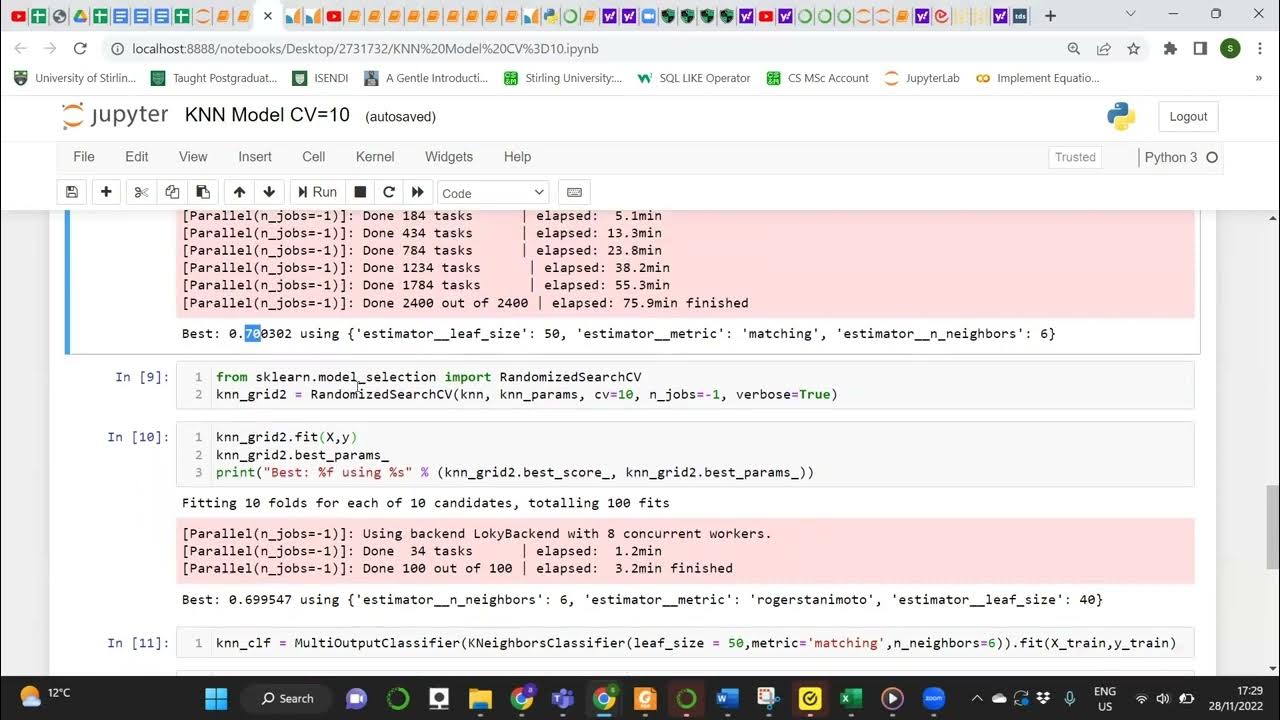

- 📈 The training pipeline involves splitting the dataset into train and test sets (80-20) and setting up training arguments, including batch size and learning rate.

- 🔍 The fine-tuning process is evaluated before and after training, showing a significant improvement in loss, from 7.86 to 1.02 after 10 epochs.

- 🎯 The overall approach provides a detailed method to fine-tune a DialogGPT model, enabling custom conversational chatbot development.

Q & A

What is the purpose of the video?

-The video explains how to fine-tune a conversational chatbot using a conversational dataset. It demonstrates the process of fine-tuning the 'Dialog GPT Medium' model to create a custom chatbot using a multi-turn dialogue dataset.

Which model is being used for fine-tuning in this video?

-The model being used for fine-tuning is the 'Microsoft Dialog GPT Medium' model from the Hugging Face hub.

Why is the Transformers Auto classes used instead of pipelines?

-Auto classes are used instead of pipelines because the fine-tuning process requires more control over how the model is loaded and used. Pipelines are generally more straightforward but less flexible when it comes to fine-tuning tasks.

Why do we need to check if the pad token is missing, and what happens if it is?

-Many GPT-based models, including Microsoft Dialog GPT, often lack a pad token by default. The pad token is needed for batch processing, so if it's missing, the end-of-sequence (EOS) token is used as the pad token to allow for batch processing during fine-tuning.

What dataset is used for fine-tuning, and how is it structured?

-The dataset used is a multi-turn dialogue dataset. It is structured to maintain the continuity of a conversation, with the context being concatenated with the response to create input features for fine-tuning the model.

How is the data pre-processed before fine-tuning?

-The data is pre-processed by concatenating context and response to create a single input variable. The dataset is then converted from a pandas DataFrame into a dataset object using the `datasets` library for easier handling during fine-tuning.

How does the model handle multi-turn conversations during inference?

-The model uses a conversation history mechanism. Each new input is appended to the history of input IDs from previous turns, and this concatenated input is passed to the model to generate a response, maintaining the flow of the conversation.

What tokenization and padding methods are used during the fine-tuning process?

-The inputs are tokenized with padding set to 'max length', and a max length of 40 tokens is specified. Padding is necessary to ensure all inputs in a batch are the same length, which is crucial for the supervised fine-tuning process.

How is the dataset split for training and evaluation?

-The dataset is split into an 80/20 ratio, with 80% of the data used for training and 20% for evaluation. This split ensures that the model is trained on most of the data while reserving a portion for testing its performance.

What are the key arguments passed to the trainer module during fine-tuning?

-Key arguments include the output directory, number of training epochs, batch sizes for training and evaluation, learning rate, weight decay, and precision (FP16 is used for faster training in this example). These parameters control the fine-tuning process.

What was the evaluation loss before and after fine-tuning?

-The evaluation loss before fine-tuning was 7.86, and after fine-tuning for 10 epochs, the evaluation loss dropped to 1.02, indicating a significant improvement in the model's performance.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

PaliGemma by Google: Train Model on Custom Detection Dataset

EASIEST Way to Fine-Tune LLAMA-3.2 and Run it in Ollama

Fine-tuning LLMs on Human Feedback (RLHF + DPO)

Fine-tuning Multimodal Models (CLIP) with DataChain to Match Cartoon Images to Joke Captions

Enhance RAG Chatbot Performance By Refining A Reranking Model

INTRO TO BIG DATA AND AI MEET 13

5.0 / 5 (0 votes)