Concurrency in Go

Summary

TLDRThis video delves into concurrency in Go, explaining how it differs from parallelism and why it's a key feature of the language. The speaker demonstrates Go routines, channels, wait groups, and worker pools to illustrate how Go handles concurrent tasks. Key concepts include blocking operations, synchronization, and efficient CPU usage across multiple cores. The video also introduces advanced constructs like buffered channels and select statements, showing how to manage concurrent tasks effectively. It's a practical guide for Go programmers to write efficient, concurrent code.

Takeaways

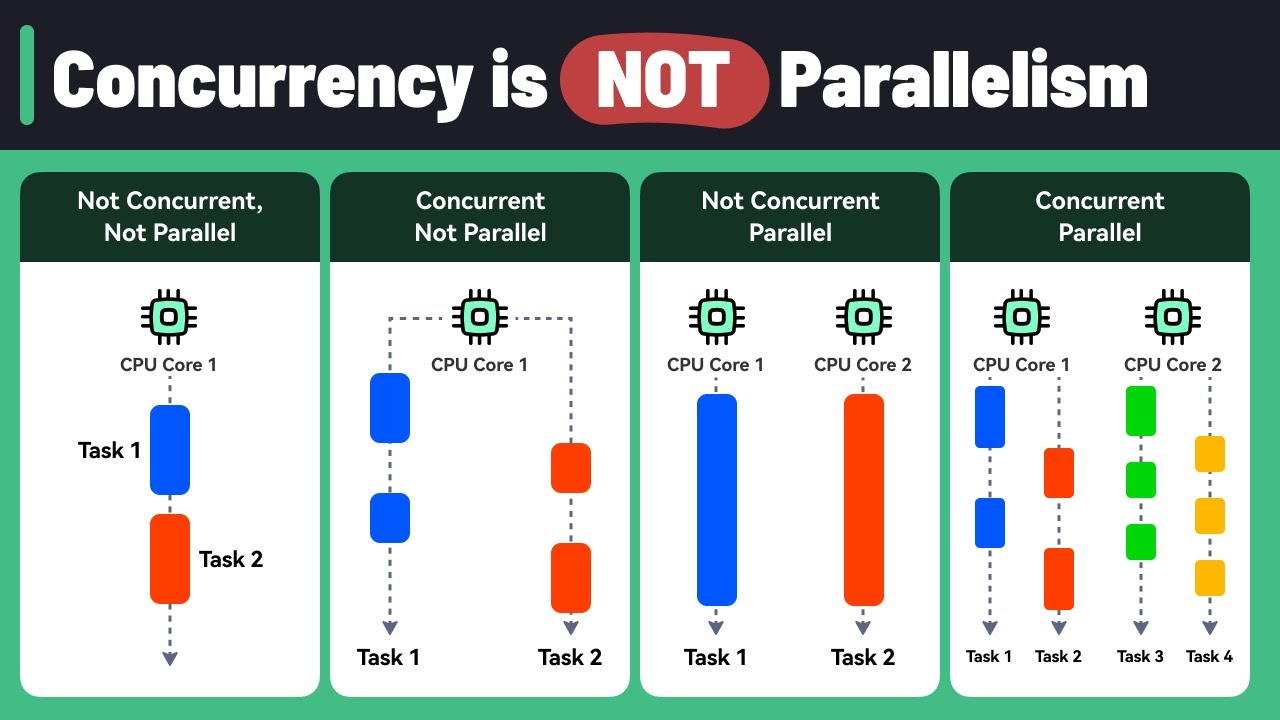

- 🖥️ Go supports concurrency, which allows programs to run tasks independently, but it’s not the same as parallelism.

- 🔄 Concurrency involves breaking a program into tasks that can potentially run simultaneously, but parallelism specifically refers to running multiple tasks at the exact same time on different CPU cores.

- 🐑 By adding 'go' before a function in Go, you create a goroutine, which runs concurrently with the main program without waiting for the function to finish.

- ⏳ When the main goroutine finishes, the entire program terminates, even if other goroutines are still running, so you need to handle this to avoid premature termination.

- ⌛ A WaitGroup can be used to wait for multiple goroutines to finish before the main program continues, making it a practical way to synchronize concurrent processes.

- 🛠️ Channels in Go provide a way for goroutines to communicate with each other, acting as a pipeline for sending and receiving messages between them.

- 🚫 Sending and receiving on channels are blocking operations; the sender waits until a receiver is ready and vice versa, which can cause deadlocks if not handled properly.

- 🔀 The Select statement in Go lets you work with multiple channels at once, ensuring the program doesn’t block while waiting for slower processes.

- 👷 Worker pools, a common concurrency pattern in Go, allow multiple workers to handle tasks from a job queue concurrently, improving program efficiency.

- ⚙️ Buffered channels can hold a certain number of messages without blocking, allowing asynchronous operations but may cause deadlocks if overfilled.

Q & A

What is the difference between concurrency and parallelism in Go?

-Concurrency is the ability to break a program into independently executing tasks that can run simultaneously, but it doesn't guarantee that they will. Parallelism, on the other hand, means running multiple tasks at exactly the same time on multiple CPU cores. Go focuses on concurrency by letting you write programs that could run in parallel if the system supports it.

What is a Go routine, and how do you create one?

-A Go routine is a lightweight thread managed by Go’s runtime. It allows functions to run concurrently. To create a Go routine, you simply place the 'go' keyword before a function call, which runs the function concurrently in the background.

What happens if the main Go routine finishes before other Go routines?

-If the main Go routine finishes, the program terminates, regardless of whether other Go routines are still running. To prevent this, you can use a synchronization mechanism like a `WaitGroup` to wait for all Go routines to finish before exiting the program.

How does a `WaitGroup` work in Go?

-A `WaitGroup` in Go is used to wait for a collection of Go routines to finish executing. You increment the WaitGroup counter when a Go routine starts and decrement it when the Go routine finishes using `wg.Add()` and `wg.Done()` respectively. The `wg.Wait()` method blocks the main Go routine until the counter reaches zero.

What is a channel in Go, and how is it used?

-A channel in Go is a communication mechanism that allows Go routines to send and receive messages. Channels have a type, and you can send or receive values of that type using the arrow syntax. Channels are blocking by default, meaning the sender waits until the receiver is ready and vice versa.

What does closing a channel do in Go?

-Closing a channel signals that no more values will be sent on it. This is useful for preventing deadlocks when receivers are waiting for values. Receivers can check whether a channel is closed by receiving a second value (a boolean) from the channel.

What causes a deadlock in Go, and how can it be avoided?

-A deadlock occurs when a Go routine is waiting for something that will never happen, like trying to receive from an empty channel without a sender. Deadlocks can be avoided by closing channels when they are no longer needed, or by ensuring that every send operation has a corresponding receive.

What is the role of buffered channels in Go?

-Buffered channels allow sending a specified number of values without blocking, even if no receiver is ready. The send operation only blocks when the buffer is full. This is useful for situations where a producer needs to send multiple values before a consumer is ready.

What is a select statement in Go, and how is it used?

-A `select` statement in Go lets you wait on multiple channel operations. It blocks until one of its cases can proceed. This allows you to receive from whichever channel is ready first, making it useful when handling multiple concurrent Go routines communicating via channels.

What is a worker pool in Go, and how does it function?

-A worker pool in Go is a design pattern where multiple Go routines (workers) are used to perform tasks from a job queue concurrently. Each worker pulls a job from the queue, processes it, and then sends the result back to a results channel. This improves efficiency by distributing work across multiple CPU cores.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahora5.0 / 5 (0 votes)