CONCURRENCY IS NOT WHAT YOU THINK

Summary

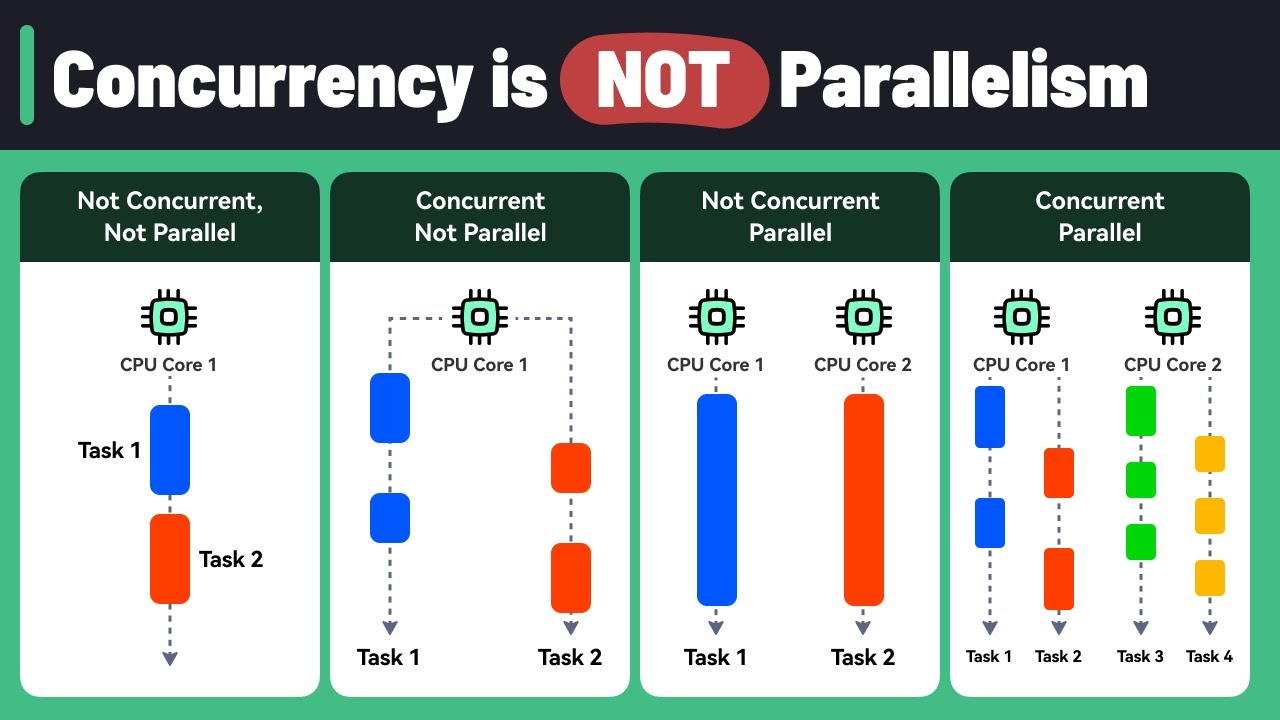

TLDRThis video script explores the concept of concurrency in computing, which allows multiple processes to run simultaneously on a single CPU. It traces the evolution from mainframes to personal computers, explaining how early operating systems enabled multitasking. The script delves into CPU operation, detailing how processes are managed through queues and scheduling. It contrasts cooperative and preemptive scheduling, highlighting the importance of hardware support for effective multitasking. The video concludes by discussing the role of multi-core systems in enhancing parallelism and the future of complex computing concepts.

Takeaways

- 💡 Multitasking has been possible since the era of single-core CPUs, challenging the misconception that it's solely a feature of multicore systems.

- 🏛️ Early mainframe computers were massive, expensive, and limited in number, leading to the development of multitasking to maximize their usage.

- 🔄 The process of running a program in early computers involved multiple steps, including loading compilers and assemblers, which was time-consuming and inefficient.

- 👥 The advent of time-sharing operating systems like Multics allowed multiple users to connect to a single computer, improving resource utilization.

- 🔄 The concept of concurrency was pivotal in allowing the execution of multiple processes by interleaving them, giving the illusion of simultaneous execution.

- 🖥️ Personal computers initially could only run one program at a time, but with the influence of systems like Unix, they evolved to support multitasking.

- 🤖 CPUs handle instructions in a cycle of fetch, decode, and execute, with jump instructions allowing for non-linear execution paths.

- 🕒 Operating systems manage process execution through a scheduler, which assigns CPU time to processes in a queue, ensuring fairness in resource allocation.

- ⏰ Preemptive scheduling, enabled by hardware timers, prevents any single process from monopolizing CPU resources, enhancing system security and responsiveness.

- 🔩 Multi-core systems enhance processing power by allowing true parallelism, but concurrency remains essential for efficient resource management when there are more processes than cores.

Q & A

What is the main focus of the video script?

-The main focus of the video script is to explain the fundamentals of concurrency, a technique that allows computers to run multiple processes simultaneously, even with a single CPU.

How did early computers manage to run multiple programs despite having a single processor?

-Early computers managed to run multiple programs by breaking down programs into smaller segments and interleaving them so that the CPU could execute them in an alternate order, creating the illusion of multitasking.

What is the significance of the invention of transistors in the context of the script?

-The invention of transistors allowed mainframes to become smaller and more affordable, which eventually led to the development of personal computers and the need for multitasking capabilities.

Why were early computers designed to be used by one user at a time?

-Early computers were designed for single-user use due to their high cost and limited availability. They were massive and expensive, making it impractical for multiple users to access them simultaneously.

What is the role of an operating system in managing multiple processes on a single CPU?

-An operating system manages multiple processes by using a scheduler to allocate CPU time to each process, ensuring that each process gets a fair share of processing time and that the CPU is never idle.

What is the difference between cooperative scheduling and preemptive scheduling as explained in the script?

-Cooperative scheduling relies on processes voluntarily giving up control of the CPU, whereas preemptive scheduling uses hardware timers to forcibly take control of the CPU from processes that do not relinquish it in a timely manner.

Why was the development of multi-core systems significant for multitasking?

-Multi-core systems allow for true parallelism and simultaneous execution of multiple processes, as each core can handle a separate process, reducing the need for context switching and improving overall performance.

How does the CPU handle instructions as described in the script?

-The CPU handles instructions through a cycle of fetch, decode, and execute. It uses special registers like the instruction register and the address register to manage the flow of instructions.

What is the purpose of a hardware timer in the context of process scheduling?

-A hardware timer is used to implement preemptive scheduling by setting a time limit for a process's CPU usage. Once the timer expires, it triggers an interruption, forcing the process to relinquish control of the CPU.

What is the role of interruptions in the interaction between user programs and the operating system?

-Interruptions serve as signals to the CPU, allowing user programs to request services from the operating system, such as file operations or memory allocation, and to regain control of the CPU when necessary.

Why did personal computers initially struggle with multitasking?

-Personal computers initially struggled with multitasking because they were designed for single-tasking and lacked the hardware and software mechanisms to efficiently manage multiple processes simultaneously.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Concurrency Vs Parallelism!

What are differences in multiprogramming, multiprocessing, multitasking and multithreading.

Lec-76: What is Schedule | Serial Vs Parallel Schedule | Database Management System

Java Concurrency and Multithreading - Introduction

Why Are Threads Needed On Single Core Processors

12 Conceitos de Sistemas Operacionais que todo Estudante de Tecnologia precisa conhecer

5.0 / 5 (0 votes)