Use the OpenAI API to call Mistral, Llama, and other LLMs (works with local AND serverless models)

Summary

TLDRThis video showcases how to seamlessly swap between different language models (LLMs) using serverless architectures or local models for OpenAI requests. The speaker demonstrates how to adjust configurations like API keys, base URLs, and model names to quickly toggle between serverless platforms like Together and local models with AMA. They also explore LangChain integration for error handling and flexible model interactions. Despite some limitations, like the lack of function calling in certain models, the video highlights the potential for cost-effective and efficient LLM usage with easy integration and model swapping.

Takeaways

- 😀 Serverless models now make it easy to switch between local and serverless OpenAI models with just a few overrides.

- 😀 The process of swapping between models is seamless, as both local models and serverless models use the same OpenAI API structure.

- 😀 AMA has recently introduced OpenAI compatibility, but function calling is not yet supported in their implementation.

- 😀 LangChain can integrate with serverless models, allowing for easy toggling between different models in the same framework.

- 😀 You can make API requests using the same setup, whether to OpenAI, AMA, or a serverless model like Together, with only minor adjustments.

- 😀 Some serverless models support streaming responses, which can be activated with a simple 'stream' parameter in requests.

- 😀 Not all models support tool calling out of the box; only a select few serverless models currently support this feature.

- 😀 Llama 2 and MRA models can be run locally, but they may take longer to process compared to serverless models.

- 😀 Using serverless models like Together can be much faster than running models locally, especially for resource-intensive tasks.

- 😀 OpenAI models are considered the gold standard, but serverless options offer lower costs, providing an affordable alternative for running models.

- 😀 AMA and other serverless platforms are pushing competition in the model hosting space, often offering lower prices to attract users, but with some limitations in terms of tool calling functionality.

Q & A

What is the main goal of the video?

-The main goal of the video is to demonstrate how to easily swap between local models and serverless models while maintaining compatibility with OpenAI's API, allowing seamless integration and use of different models in an application.

What models are discussed in the video?

-The video discusses local models like LLaMA 2 and MRA, as well as serverless models like Together and AMA, which are compatible with OpenAI's API.

How do you swap between different models?

-You swap between different models by overriding the base URL, API key, and model parameters in the API request. This allows you to toggle between local or serverless models without changing the overall request structure.

What is the role of LangChain in this setup?

-LangChain acts as a framework that simplifies interaction with LLMs. It allows users to integrate different models (like OpenAI or local models) with just a few changes to the setup, such as overriding the API key and model parameters.

Are there any limitations to using serverless models?

-Yes, serverless models like AMA have limitations. For example, AMA does not yet support function calling, which is a feature that may be added in the future. Additionally, serverless models may not offer the same performance or feature set as OpenAI's models.

How does the streaming feature work?

-The streaming feature works by passing the 'stream' parameter as 'True' in the request, allowing the response to be received in real-time. This functionality works across both local and serverless models, provided they support it.

What is the significance of overriding the model parameters?

-Overriding the model parameters allows you to change the model being used in the API request. This enables you to swap between different models like LLaMA 2, MRA, and OpenAI without altering the core functionality of the application.

What are some of the challenges when using local models?

-One challenge with local models is that they may take longer to respond compared to serverless models. Local models also require more configuration and resources, such as running them on a personal server or machine.

Which serverless models support function calling?

-Currently, only a few serverless models, such as Together, support function calling. Others like AMA and LLaMA 2 do not support this feature out of the box, though it may be supported in future updates.

What is the potential benefit of using serverless models over local models?

-Serverless models tend to be faster and more cost-effective, especially when using public hosted models. They also offer more convenience as you don't have to manage the infrastructure or server, but you may need to consider privacy and model limitations.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

LM Studio Tutorial: Run Large Language Models (LLM) on Your Laptop

Ollama-Run large language models Locally-Run Llama 2, Code Llama, and other models

Private & Uncensored Local LLMs in 5 minutes (DeepSeek and Dolphin)

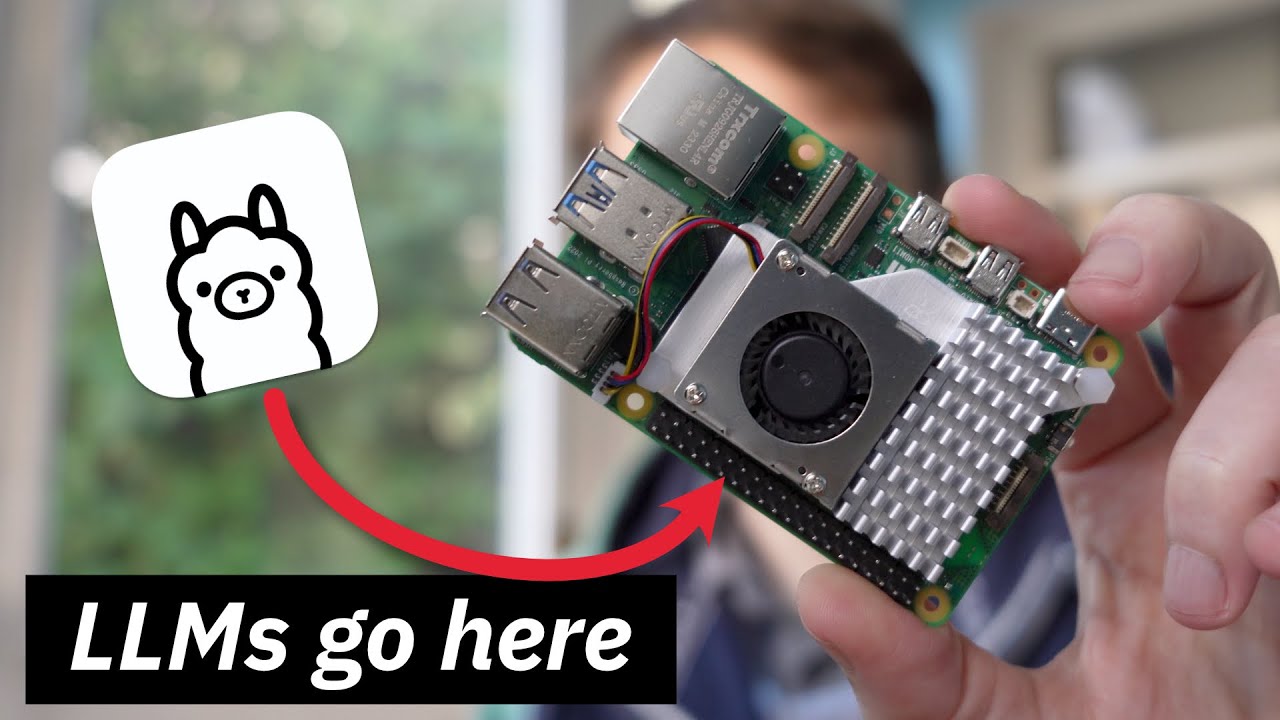

Using Ollama to Run Local LLMs on the Raspberry Pi 5

Python RAG Tutorial (with Local LLMs): AI For Your PDFs

Large Language Models explained briefly

5.0 / 5 (0 votes)