Watching Neural Networks Learn

Summary

TLDRThis video explores the concept of function approximation in neural networks, emphasizing their role as universal function approximators. It delves into how neural networks learn by adjusting weights and biases to fit data points, using examples like curve fitting and image recognition. The video also discusses the challenges of high-dimensional problems and the 'curse of dimensionality,' comparing neural networks to other mathematical tools like Taylor and Fourier series for function approximation. It concludes by highlighting the potential of these methods for real-world applications and poses a challenge to the audience to improve the approximation of the Mandelbrot set.

Takeaways

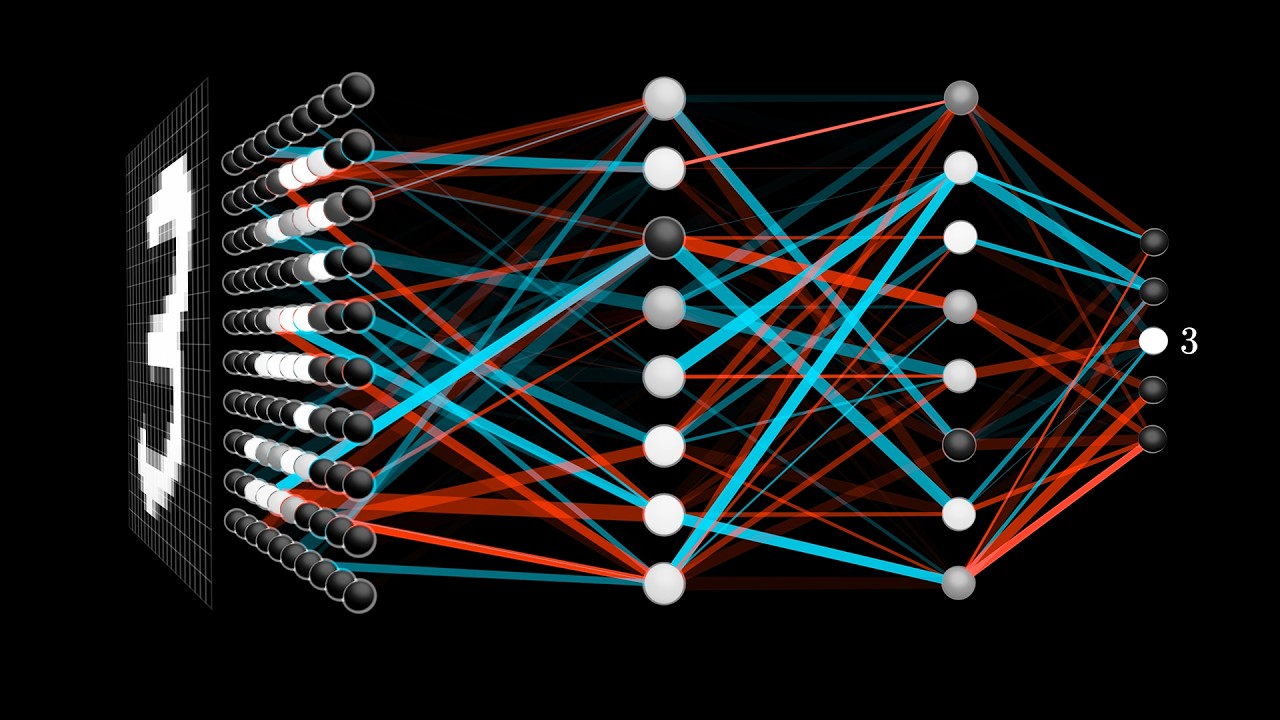

- 🧠 Neural networks are universal function approximators, capable of learning and modeling complex relationships in data.

- 🌐 Functions are fundamental to describing the world, with everything from sound to light being represented through mathematical functions.

- 📊 The goal of artificial intelligence is to create programs that can understand, model, and predict the world, often through self-learning functions.

- 🔍 Neural networks learn by adjusting weights and biases to minimize error between predicted and actual outputs, a process known as backpropagation.

- 📉 Activation functions like ReLU and leaky ReLU play a crucial role in shaping the output of neurons within neural networks.

- 🔢 Neural networks can handle both low-dimensional and high-dimensional data, although the complexity of learning increases with dimensionality.

- 📊 The Fourier series and Taylor series are mathematical tools that can be used to approximate functions and can be integrated into neural networks to improve performance.

- 🌀 The Mandelbrot set demonstrates the infinite complexity that can be captured even within low-dimensional functions, challenging neural networks to approximate it accurately.

- 📈 Normalizing data and reducing the learning rate are practical techniques for optimizing neural network training and improving approximation quality.

- 🚀 Despite the theoretical ability of neural networks to learn any function, practical limitations and the curse of dimensionality can affect their effectiveness in higher dimensions.

Q & A

Why are functions important in describing the world?

-Functions are important because they describe the world by representing relationships between numbers. Everything can be fundamentally described with numbers and the relationships between them, which we call functions. This allows us to understand, model, and predict the world around us.

What is the goal of artificial intelligence in the context of function approximation?

-The goal of artificial intelligence in function approximation is to create programs that can understand, model, and predict the world, or even have them write themselves. This involves building their own functions that can fit data points and accurately predict outputs for inputs not in the data set.

How does a neural network function as a universal function approximator?

-A neural network functions as a universal function approximator by adjusting its weights and biases through a training process to minimize error. It can fit any data set by bending its output to match the given inputs and outputs, effectively constructing any function.

What is the role of the activation function in a neural network?

-The activation function in a neural network defines the mathematical shape of a neuron. It determines how the neuron responds to different inputs by introducing non-linearity into the network, allowing it to learn and represent complex functions.

Why is backpropagation a crucial algorithm for training neural networks?

-Backpropagation is crucial for training neural networks because it efficiently computes the gradient of the loss function with respect to the weights, allowing the network to update its weights in a way that minimizes the loss, thus improving its predictions over time.

How does normalizing inputs improve the performance of a neural network?

-Normalizing inputs improves the performance of a neural network by scaling the values to a range that is easier for the network to deal with, such as -1 to 1. This makes the optimization process more stable and efficient, as the inputs are smaller and centered at zero.

What is the curse of dimensionality and how does it affect function approximation?

-The curse of dimensionality refers to the phenomenon where the volume of the input space increases so fast that the available data becomes sparse. This makes function approximation and machine learning tasks computationally impractical or impossible for higher-dimensional problems, as the number of computations needed grows exponentially with the dimensionality of the inputs.

How do Fourier features enhance the performance of a neural network in function approximation?

-Fourier features enhance the performance of a neural network by providing additional mathematical building blocks in the form of sine and cosine terms. These terms allow the network to approximate functions more effectively, especially in low-dimensional problems, by capturing the wave-like nature of the data.

What is the difference between a Taylor series and a Fourier series in the context of function approximation?

-In the context of function approximation, a Taylor series is an infinite sum of polynomial functions that approximate a function around a specific point, while a Fourier series is an infinite sum of sine and cosine functions that approximate a function within a given range of points. Both can be used to enhance neural networks by providing additional input features.

Why might using Fourier features in high-dimensional problems lead to overfitting?

-Using Fourier features in high-dimensional problems might lead to overfitting because the network can become too tailored to the specific data points, capturing noise and irregularities rather than the underlying function. This happens when the network has too many parameters relative to the amount of data, leading to a poor generalization to new, unseen data.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

Why Neural Networks can learn (almost) anything

O que é Rede Neural Artificial e como funciona | Pesquisador de IA explica | IA Descomplicada

What is Neural Network? (In Tamil) Neurons and Neural Networks

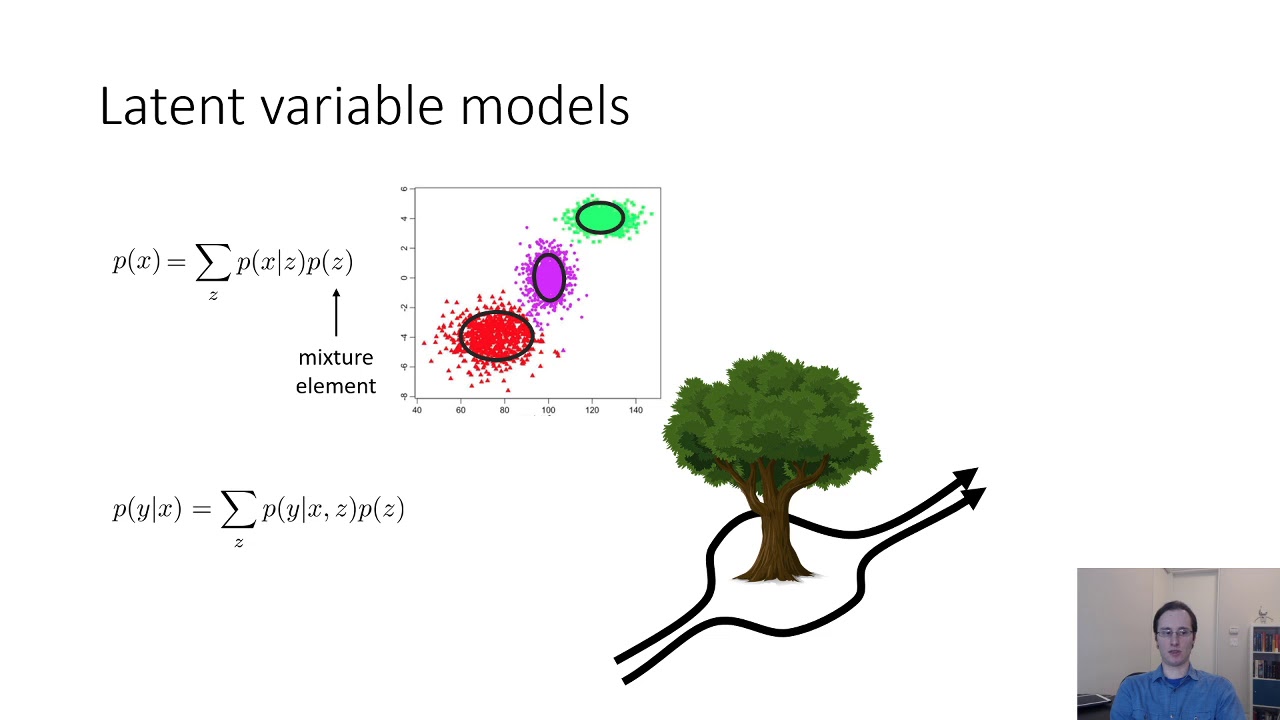

CS 285: Lecture 18, Variational Inference, Part 1

Week 5 -- Capsule 2 -- Training Neural Networks

But what is a neural network? | Chapter 1, Deep learning

5.0 / 5 (0 votes)