Backpropagation Part 1: Mengupdate Bobot Hidden Layer | Machine Learning 101 | Eps 15

Summary

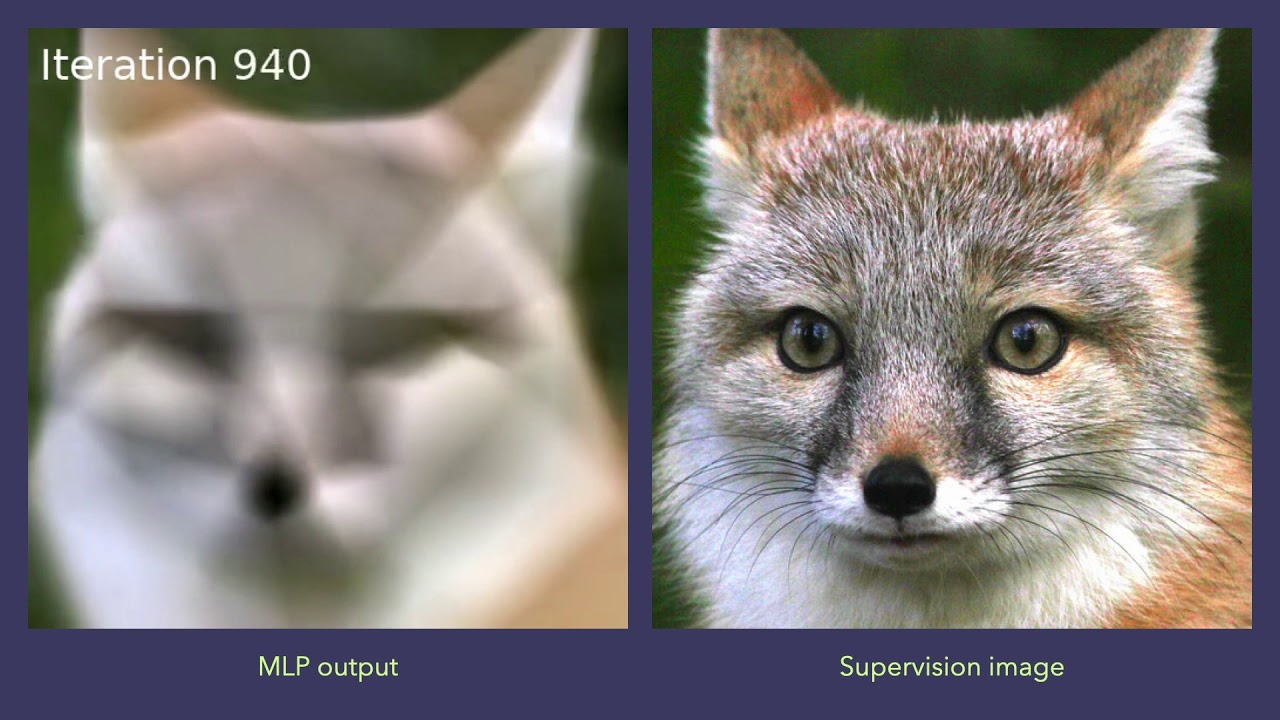

TLDRIn this video, the speaker provides an in-depth explanation of the backpropagation algorithm used in multilayer perceptrons (MLPs). The focus is on how weights are updated during training to minimize error. Starting with the feedforward process, the speaker explains how inputs are transformed into outputs through layers, followed by error calculation and propagation. Using matrix operations, the error is propagated backward to adjust weights in each layer. This essential process, crucial for training neural networks, is explained step-by-step, emphasizing the importance of linear algebra and matrix operations for implementing backpropagation from scratch.

Takeaways

- 😀 Backpropagation is a key process in training multilayer perceptrons (MLPs), helping adjust weights between layers to minimize errors.

- 😀 The error generated in the output layer is used to update the weights, and this process is propagated back through the hidden layers to update all weights.

- 😀 The core of backpropagation involves calculating delta weights, which are derived from the learning rate, error, and activations.

- 😀 Matrix operations (such as matrix multiplication and transposition) play a crucial role in updating the weights efficiently.

- 😀 The delta weight formula is derived by calculating how each weight in the network contributes to the final error, adjusting the weights accordingly.

- 😀 To compute the new weight, we use the formula: new weight = old weight + (learning rate * error * input).

- 😀 The weight update formula is influenced by both the error at the output layer and the inputs to the network.

- 😀 Understanding the process of backpropagation step-by-step is essential for implementing MLP from scratch without relying on external libraries like TensorFlow or Keras.

- 😀 The script also introduces an intuitive approach to backpropagation, helping learners grasp the mathematical reasoning behind weight updates.

- 😀 Learning matrix algebra, including vector and matrix multiplication, is important for understanding how errors propagate and influence weight updates in MLPs.

Q & A

What is the primary focus of this video in the Machine Learning 101 series?

-The video focuses on explaining the backpropagation algorithm in a multilayer perceptron (MLP) neural network, specifically how weights are updated through the process of backpropagation using matrix operations and error propagation.

What is feedforward propagation in the context of multilayer perceptrons?

-Feedforward propagation refers to the process of passing the input data through the network, from the input layer to the hidden layers, and finally to the output layer, with each layer applying a weighted sum followed by an activation function.

What is backpropagation and how does it work in training neural networks?

-Backpropagation is the process of adjusting the weights in a neural network by calculating the error at the output layer and propagating this error backward through the hidden layers. This allows the network to learn from its mistakes and update its weights accordingly to minimize the error.

How is error calculated in the backpropagation process?

-Error is calculated as the difference between the target value and the network's output. This error is then used to adjust the weights by applying the backpropagation algorithm.

What role do matrices play in the backpropagation process?

-Matrices are used to represent the weights, inputs, and activations in each layer of the network. Matrix operations, such as dot products and transpositions, allow for efficient computation of the weight updates during backpropagation.

What is the formula used to update the weights during backpropagation?

-The formula to update the weights is: `new weight = old weight + (learning rate × error × input)`. This adjusts the weights based on the error, input values, and the learning rate.

How does the learning rate influence the weight update process?

-The learning rate determines the size of the adjustment made to the weights. A higher learning rate leads to larger updates, which may speed up learning but can also cause overshooting, while a smaller learning rate leads to smaller, more gradual updates.

What is the significance of the error propagation from the output layer to the hidden layers?

-Error propagation from the output layer to the hidden layers is crucial because it allows the network to adjust the weights in the hidden layers, which directly influence the final output. Without this step, the network would not be able to learn from its mistakes and improve its predictions.

How does the chain rule apply to backpropagation?

-The chain rule is applied to backpropagation to compute the gradients of the error with respect to each weight in the network. By using the chain rule, the error is propagated backward through the network, allowing the weight updates to be calculated layer by layer.

What is the difference between the error at the output layer and the error at the hidden layer?

-The error at the output layer is the direct difference between the predicted output and the target value. The error at the hidden layer, however, is derived from the errors at the output layer and is used to adjust the weights between the hidden and output layers. It is propagated backward through the network to help adjust the weights in the hidden layers.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Feedforward Neural Networks and Backpropagation - Part 1

Introduction to Deep Learning - Part 2

Deep Learning(CS7015): Lec 2.1 Motivation from Biological Neurons

Why Are Chip Bags So Hard To Recycle? | World Wide Waste | Business Insider

Algoritma K-Means Analisis Cluster pada Data Mining Part 2

Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains

5.0 / 5 (0 votes)