Introduction to Deep Learning - Part 2

Summary

TLDRThis video is the second part of a series on deep learning, continuing from a previous introduction. The presenter, Avia, explains key concepts such as multilayer perceptrons, neural networks, and backpropagation, focusing on how complex data requires deep architectures with multiple hidden layers. The video highlights challenges like vanishing gradients in deep networks and emphasizes the need for more efficient learning algorithms like deep learning. It also mentions notable figures in AI research and suggests further reading, including a 2015 paper from Nature on deep learning.

Takeaways

- 😀 The concept of 'deepening' in neural networks is essential for handling complex data structures.

- 🤖 Multilayer perceptrons (MLPs) require more hidden layers as the complexity of the data increases.

- 📉 Backpropagation is vital for training neural networks, but it faces challenges like vanishing gradients in deep architectures.

- 🔍 Deep learning algorithms are necessary for handling large and complex datasets, offering more sophisticated learning capabilities.

- 💡 The architecture of a neural network should match the complexity of the data for effective training and classification.

- 👩🔬 Influential figures in deep learning research, such as Geoffrey Hinton, Yoshua Bengio, and Yann LeCun, have made significant contributions.

- 🧠 Deep learning algorithms help to address the limitations faced by traditional neural networks in complex problem-solving.

- 📚 The 2015 Nature paper on deep learning is a highly recommended resource for understanding foundational concepts in the field.

- 🌐 Deep learning has wide-ranging applications across various industries and research areas, making it a transformative technology.

- ⚙️ Proper selection of neural network architecture and learning algorithms is crucial for effective model performance.

Q & A

What is the focus of the video?

-The video focuses on explaining deep neural networks (DNNs), multilayer perceptrons (MLPs), and the backpropagation algorithm for training neural networks. It also highlights issues like the vanishing gradient problem in deep architectures.

What is the difference between a simple multilayer perceptron (MLP) and a deep neural network (DNN)?

-An MLP has at least one hidden layer, while a DNN has multiple hidden layers, making it suitable for complex data and tasks that require deeper levels of abstraction.

Why do deep architectures require more hidden layers?

-As data becomes more complex, deeper architectures are needed to capture intricate patterns and relationships within the data, enabling the network to classify or model the data with higher accuracy.

What is the backpropagation algorithm, and how does it work?

-Backpropagation is an algorithm used to train neural networks by updating the weights. It first computes the error by comparing the prediction with the target, then adjusts the weights by propagating the error backward through the network layers.

What problem arises when using backpropagation in deep neural networks?

-A common problem is the vanishing gradient, where the error signal diminishes as it is propagated through the layers, especially when using activation functions like the sigmoid. This causes the learning process to slow down or stall.

How does the vanishing gradient affect the learning process in deep neural networks?

-The vanishing gradient reduces the magnitude of weight updates, slowing down learning or even causing the network to stop learning altogether. As a result, deeper layers learn very slowly or not at all.

What is a suggested solution for the vanishing gradient problem?

-One solution is to use alternative learning algorithms and activation functions designed to mitigate the vanishing gradient issue, such as rectified linear units (ReLUs) or other advanced architectures.

Why is deep learning particularly suitable for complex and large datasets?

-Deep learning is ideal for complex and large datasets because deep architectures can extract and learn hierarchical features from the data, capturing high-level abstractions that simpler models cannot.

Why would using deep learning on simpler datasets be inefficient?

-For simple datasets like Iris, simpler models or MLPs with fewer layers can already achieve good results. Using deep learning would be overkill and unnecessarily increase computational costs without adding significant value.

What are some key figures in the development of deep learning mentioned in the video?

-Key figures include Geoffrey Hinton, Yoshua Bengio, and Ian Goodfellow. They have made significant contributions to the development of deep learning algorithms and architectures, such as generative adversarial networks (GANs).

What is the significance of the 2015 paper on deep learning mentioned in the video?

-The 2015 paper on deep learning, published in *Nature*, is significant because it introduced deep learning to a broader scientific audience, emphasizing its potential for solving complex problems across various fields, marking a turning point for the widespread adoption of the technology.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

СКРЫТЫЙ ЗЛОДЕЙ!?😱 Разбор 70 Часть 2🔥 Все СЕКРЕТЫ и Теории

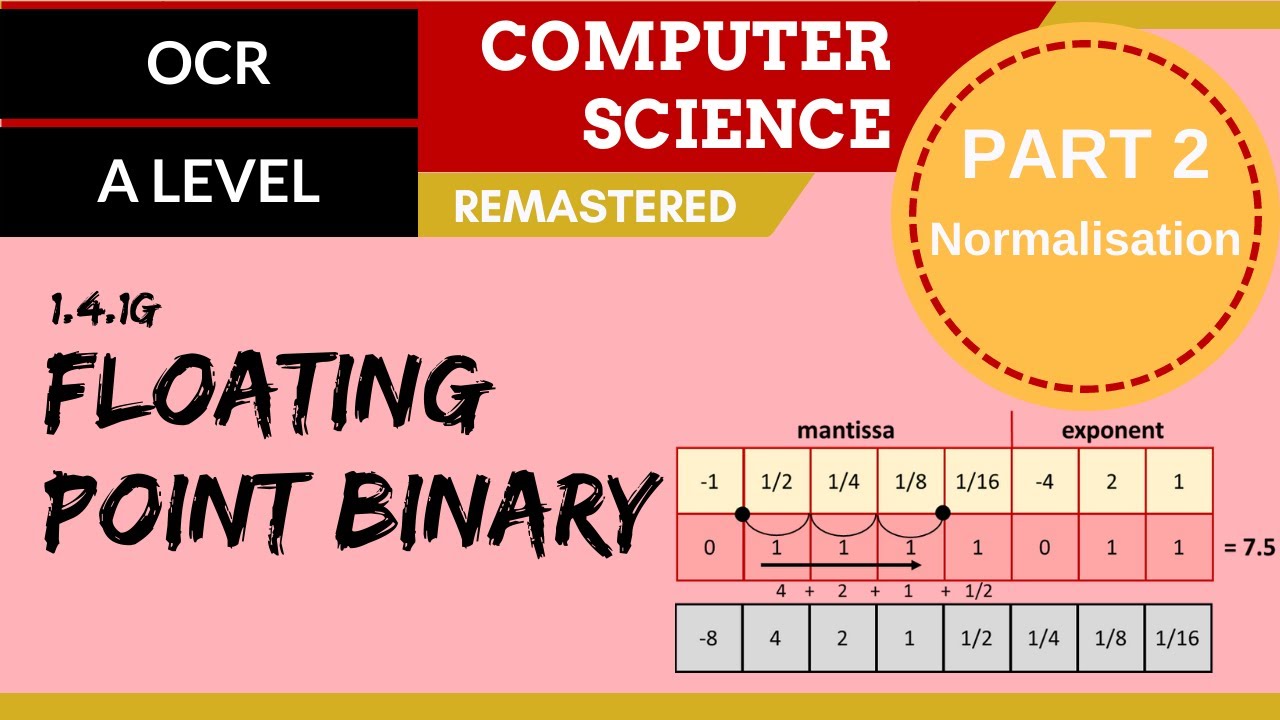

80. OCR A Level (H046-H446) SLR13 - 1.4 Floating point binary part 2 - Normalisation

【期間限定再公開】本編#100 “阪本の推し”モーニング娘。'23 野中美希が登場!【マユリカのうなげろりん!!】

How to Write an Essay: Conclusion Paragraph (with Worksheet)

Interpretation of the Urinalysis (Part 2) - The Dipstick

Konsep Cepat Memahami Deep Learning

5.0 / 5 (0 votes)