GCP Data Engineer Mock interview

Summary

TLDRThe speaker shares their four-year expertise with Google Cloud Platform (GCP), focusing on BigQuery for data warehousing. They delve into BigQuery's architecture, highlighting its columnar storage and separation of storage and compute. The discussion covers performance optimization strategies like partitioning, clustering, and SQL query enhancements. Cost-effective practices are also explored, including slot purchasing and archiving unused datasets in cloud storage. The conversation extends to managing multiple datasets across projects, the utility of materialized views for repetitive queries, and scheduling queries within the BigQuery console. The speaker addresses team access management, data pipeline creation with Airflow, and the difference between tasks and workflows in DAGs. They conclude with a hypothetical scenario of transferring data from an FTP server to GCS and then to BigQuery.

Takeaways

- 💡 The speaker has four years of experience, primarily with Google Cloud Platform (GCP) and BigQuery for data warehousing.

- 🔍 BigQuery is a columnar storage data warehousing tool that separates storage and compute, optimizing analytical functions.

- 💻 The speaker is proficient in SQL, DSA in Python, and is exploring Apache Spark on their own.

- 📈 To optimize performance in BigQuery, techniques such as partitioning, clustering, and using the WHERE clause effectively are discussed.

- 💼 For cost optimization in BigQuery, the speaker suggests purchasing slots in advance for known workloads to potentially get a discount.

- 🗄️ Unused tables in BigQuery can be archived in cloud storage to save on storage costs, with the speaker considering the use of information schema or cloud logs to identify them.

- 🔑 The speaker discusses managing multiple BigQuery datasets across different projects with appropriate permissions, highlighting the BigQuery reader role for limited access.

- 📊 Materialized views in BigQuery can save on costs by caching query results, reducing the need to re-execute queries for unchanged data.

- ⏰ Scheduling queries in BigQuery can be done through the console, with the speaker outlining the process for setting up schedules based on specific parameters.

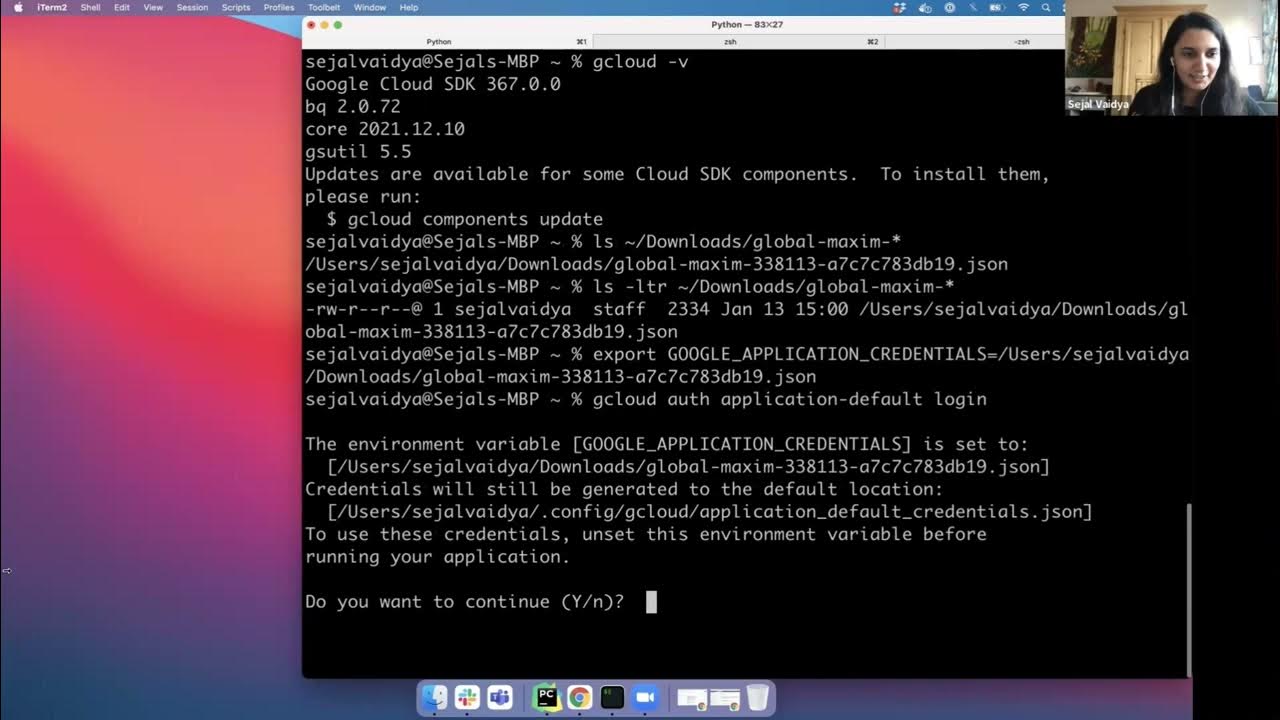

- 🛠️ The speaker has experience integrating BigQuery with other GCP services like Cloud Storage and Apache Airflow for data pipelines, but hasn't set up streaming pipelines.

- 📝 When creating a data pipeline with Airflow, the difference between a DAG (Directed Acyclic Graph) and tasks is highlighted, with tasks being part of the larger DAG workflow.

Q & A

What is the primary function of BigQuery as described in the transcript?

-BigQuery is a data warehousing tool used for analytical purposes, offering a columnar storage system that separates storage and compute, allowing for efficient data analysis.

How does BigQuery's columnar storage differ from traditional row-based storage?

-BigQuery's columnar storage allows for faster analytical processing by accessing only the specific columns needed for a query, rather than scanning entire rows as in traditional row-based storage.

What are some methods mentioned to optimize performance in BigQuery?

-Performance in BigQuery can be optimized through partitioning, clustering, and using the WHERE clause effectively to filter data, thus reducing the amount of data scanned.

How can one save costs when using BigQuery, as discussed in the transcript?

-Costs in BigQuery can be saved by purchasing slots in advance for known workloads, implementing partitioning and clustering to reduce data scanned, and archiving unused datasets to cheaper storage options like Cloud Storage.

What is the role of the 'information schema' in managing BigQuery datasets?

-The 'information schema' can be used to track when tables were last accessed, which can help in identifying unused datasets that could be archived to save costs.

How can one manage multiple BigQuery datasets across different projects?

-Managing multiple BigQuery datasets across projects can be done through proper permission settings, allowing users with the required permissions to access and query data across different environments like development, staging, and production.

What is a materialized view in BigQuery and how can it help in cost optimization?

-A materialized view in BigQuery is a pre-computed, saved result of a query that can be accessed quickly without re-executing the query. It can help in cost optimization by reducing computation for frequently run queries with unchanged data.

How can one schedule queries in BigQuery?

-Queries in BigQuery can be scheduled through the BigQuery console by specifying the query and the schedule parameters, such as frequency (daily, monthly), to automate the execution.

What is the difference between a task and a workflow (DAG) in Apache Airflow?

-In Apache Airflow, a task is an individual unit of work, while a workflow (DAG) is a collection of tasks arranged in a directed acyclic graph, where tasks can be dependent on each other.

Can you provide a basic example of how to write an Airflow DAG for transferring data from an FTP server to a GCS bucket and then to BigQuery?

-An Airflow DAG for this scenario would involve defining two tasks: one using the PythonOperator to fetch data from the FTP server and store it in a GCS bucket, and another using a transfer-specific operator to load the data from GCS into BigQuery.

What is the approximate size of the tables the speaker has worked with in their current BigQuery project?

-The speaker has worked with tables ranging from 2 GB to 1 TB in size, with around 70 to 80 tables in total in their current project.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Google Cloud Platform in 10 mins | GCP for beginners | Google Cloud Platform (GCP) tutorials

GCP Data Engineer Live Q&A for job readiness

DE Zoomcamp 1.3.1 - Introduction to Terraform Concepts & GCP Pre-Requisites

Cloud Providers Compared: A Comprehensive Guide to AWS, Azure, and GCP

Google Compute Engine Tutorial | Google Compute Services Overview | GCP Training | Edureka

Who Touched My GCP Project? Understanding the Principal Part in Cloud Audit Logs - Gabriel Fried

5.0 / 5 (0 votes)