StatQuest: K-nearest neighbors, Clearly Explained

Summary

TLDRIn this Stack Quest episode, the host introduces the K-nearest neighbors algorithm, a straightforward method for classifying data. Using a dataset of known cell types from an intestinal tumor, the process involves clustering the data, adding an unknown cell, and classifying it based on its proximity to known neighbors. The choice of 'K' is crucial, with smaller values being sensitive to outliers and larger values smoothing results but potentially diluting minority categories. The episode also touches on machine learning terminology and offers insights on selecting an optimal 'K' value through trial and validation.

Takeaways

- 📚 The script introduces the K nearest neighbors algorithm, a method used for classifying data.

- 🔍 It starts with a dataset of known categories, using an example of different cell types from an intestinal tumor.

- 📈 The data is clustered, in this case, using PCA (Principal Component Analysis).

- 📊 A new cell with an unknown category is added to the plot for classification.

- 🔑 The classification of the new cell is based on its nearest neighbors among the known categories.

- 👉 If K=1, the nearest neighbor's category defines the new cell's category.

- 📝 For K>1, the category with the majority vote among the K nearest neighbors is assigned to the new cell.

- 🎯 The script demonstrates the algorithm with examples, including scenarios where the new cell is between categories.

- 🗳️ The concept of 'voting' is used to decide the category when the new cell is close to multiple categories.

- 🌡️ The script also explains the application of the algorithm to heat maps, using hierarchical clustering as an example.

- 🔢 The choice of K is subjective and may require testing different values to find the most effective one.

- 🔧 Low values of K can be sensitive to noise and outliers, while high values may smooth over details but risk overshadowing smaller categories.

Q & A

What is the main topic discussed in the Stack Quest video?

-The main topic discussed in the Stack Quest video is the K nearest neighbors (KNN) algorithm, a method used for classifying data.

What is the purpose of using the K nearest neighbors algorithm?

-The purpose of using the K nearest neighbors algorithm is to classify new data points based on their similarity to known categories in a dataset.

What is the first step in using the KNN algorithm as described in the video?

-The first step is to start with a dataset that has known categories, such as different cell types from an intestinal tumor.

How is the data prepared before adding a new cell with an unknown category in the KNN algorithm?

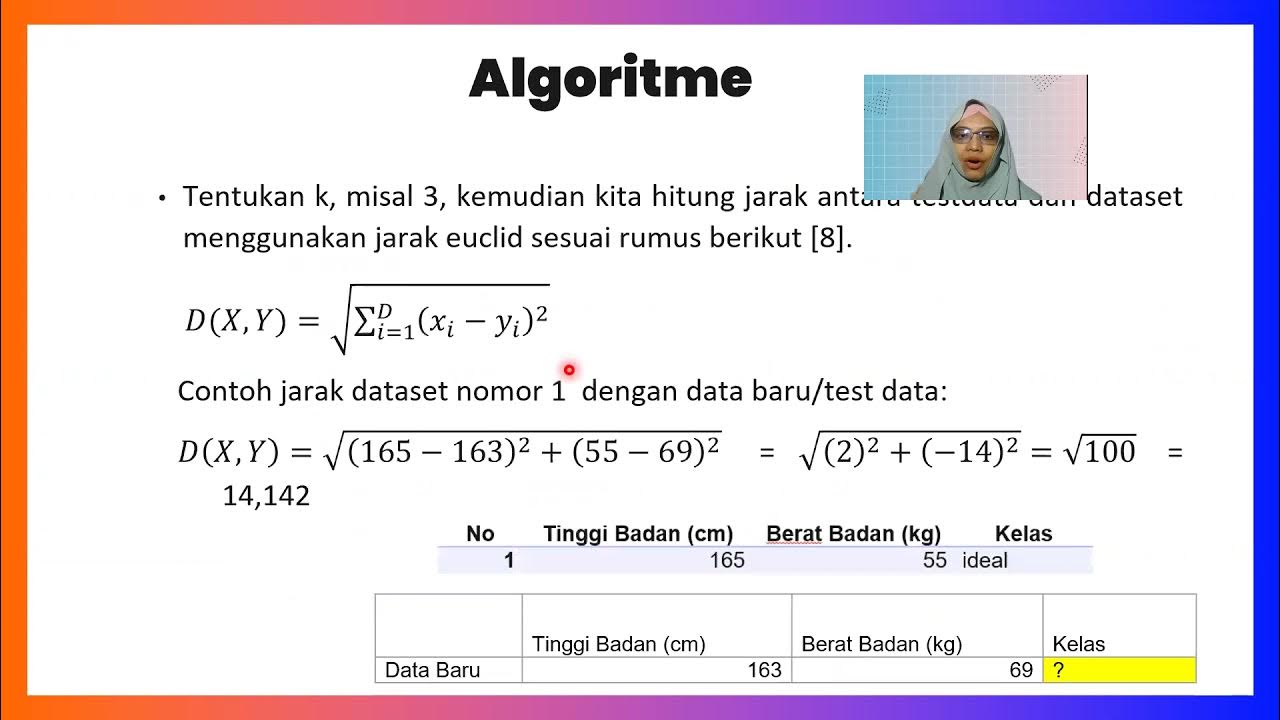

-The data is clustered, for example using PCA (Principal Component Analysis), to reduce dimensions and visualize the data effectively.

What is the process of classifying a new cell with an unknown category in the KNN algorithm?

-The new cell is classified by looking at the nearest annotated cells, or nearest neighbors, and assigning the category that gets the most votes among these neighbors.

What does 'K' represent in the K nearest neighbors algorithm?

-In the K nearest neighbors algorithm, 'K' represents the number of closest neighbors to consider when classifying a new data point.

How does the KNN algorithm handle a situation where the new cell is between two or more categories?

-If the new cell is between two or more categories, the algorithm takes a vote among the K nearest neighbors, and assigns the category that gets the most votes.

What is a potential issue with using a very low value for K in the KNN algorithm?

-Using a very low value for K, like 1 or 2, can be noisy and subject to the effects of outliers, potentially leading to inaccurate classifications.

What is a potential issue with using a very high value for K in the KNN algorithm?

-Using a very high value for K can smooth over the data too much, potentially causing a category with only a few samples to always be outvoted by larger categories.

What is the term used for the data with known categories used in the initial clustering in machine learning?

-The data with known categories used in the initial clustering is called 'training data' in machine learning.

How can one determine the best value for K in the KNN algorithm?

-There is no physical or biological way to determine the best value for K; one may have to try out a few values and assess how well the new categories match the known ones by pretending part of the training data is unknown.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Lecture 3.4 | KNN Algorithm In Machine Learning | K Nearest Neighbor | Classification | #mlt #knn

K-nearest Neighbors (KNN) in 3 min

K Nearest Neighbors | Intuitive explained | Machine Learning Basics

Python Exercise on kNN and PCA

StatQuest: K-means clustering

K-Nearest Neighbors Classifier_Medhanita Dewi Renanti

5.0 / 5 (0 votes)