RagTime: Your Digital Second Brain, by Phil Mui

Summary

TLDRThis video introduces an AI-powered knowledge extraction tool that acts as a 'second brain,' processing documents and URLs to provide up-to-date, accurate answers. It demonstrates the system's ability to chunk and embed text, retrieve context, and utilize corrective retrieval augmented generation (CRAG) for enhanced responses. The live demo showcases the tool's efficiency in handling large texts, like a financial statement PDF, and the architecture behind it, highlighting the potential for improved decision-making and a competitive edge.

Takeaways

- 🧠 The project aims to create an AI-powered knowledge extraction tool to augment human intelligence and memory.

- 🔍 It introduces a live system designed to accumulate knowledge without the need for users to first understand the material they are about to read.

- 📚 The system can process documents from URLs or uploads, using techniques like chunking, embedding, and context retrieval.

- 🔑 The script highlights 'Corrective Retrieval Augmented Generation' (CRAG), a technique gaining attention for enhancing AI responses.

- 💡 CRAG is presented as a 'second brain' that can provide up-to-date and accurate answers to queries, potentially offering a competitive advantage.

- 🌐 The system allows users to input URLs or upload files, which are then quickly processed for knowledge extraction.

- 📈 The backend processes documents by chunking and embedding, which can be interesting for developers to observe and understand.

- 🔎 The system includes an evaluator that uses CRAG to assess retrieved chunks and can invoke additional tools if more information is needed.

- 🛠️ The architecture diagram presented shows the workflow from query input to response, involving a query router, document processor, and various tools.

- 📈 The system uses a combination of technologies including embeddings, a quadtree database, web crawler, document processor, and AI response construction.

- 🤖 The response construction is handled by OpenAI, and the entire process is prototyped on a chainlet, indicating potential for further development and customization.

Q & A

What is the main purpose of the project discussed in the transcript?

-The project aims to develop an AI-powered knowledge extraction tool that can process information from URLs or document uploads, augmenting human intelligence and memory by providing quick and accurate answers to queries.

What is the acronym 'CRACK' mentioned in the transcript, and what does it stand for?

-CRACK stands for 'Corrective Retrieval Augmented Generation', a technique that has gained attention for improving the accuracy and relevance of AI-generated responses by incorporating additional information.

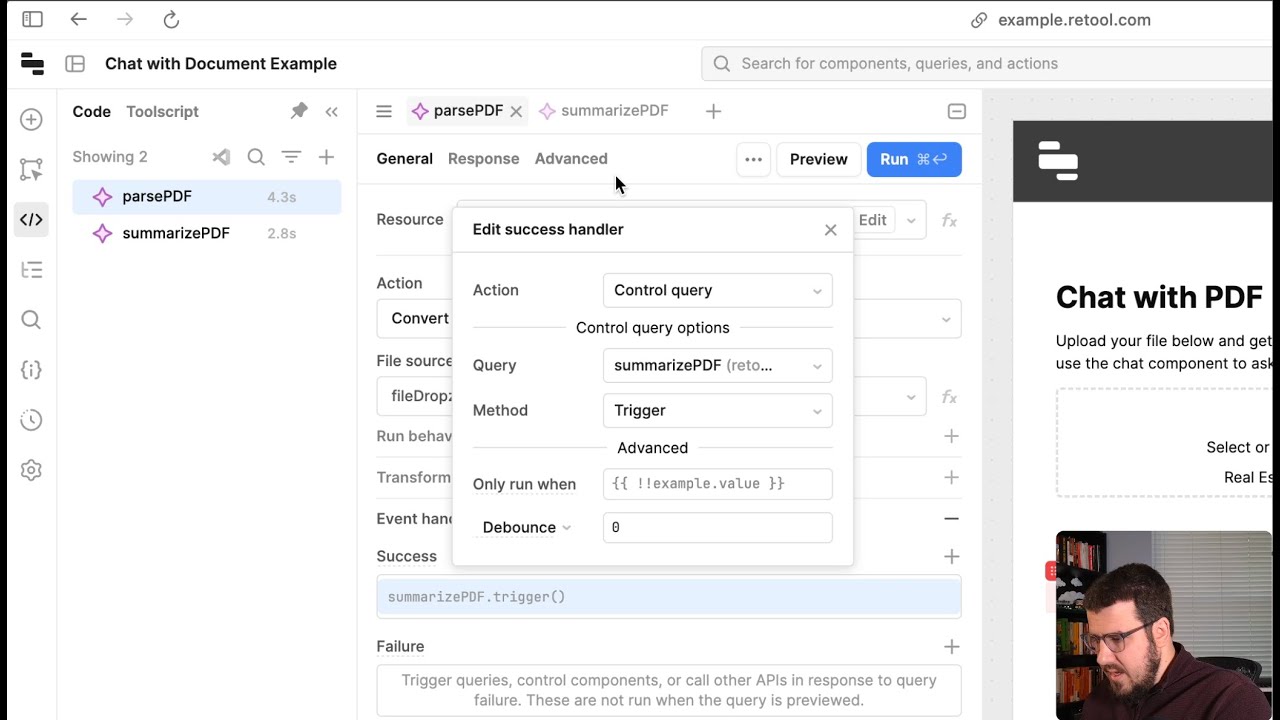

How does the system handle the processing of a document?

-The system processes a document by chunking the text, embedding it, and storing it in a database. It then uses this information to retrieve and evaluate contextually relevant chunks to answer user queries.

What is the role of the 'query router' in the system described?

-The query router determines the appropriate action for a given query, such as whether it requires web crawling or document processing, and directs the query to the correct component of the system.

What additional tools does the system use to enhance the answers provided by the AI?

-The system uses three additional tools to enhance answers: u.com, Google, and news sources. These tools help provide additional information when the retrieved chunks are deemed insufficient.

How does the system handle the input of a URL or a document?

-The user can enter a URL or upload a document, and the system will quickly process the content, chunk it, and embed it into the 'second brain' for knowledge accumulation and retrieval.

What is the 'second brain' mentioned in the transcript?

-The 'second brain' refers to the system's internal database where processed and chunked information from various documents and URLs is stored for quick retrieval and understanding.

What is the significance of the 'chunking' process in the context of this project?

-Chunking is the process of breaking down the text into smaller, manageable pieces that can be more easily processed, embedded, and retrieved for answering queries.

What is the technical stack used in the project as described in the transcript?

-The technical stack includes text embeddings (e.g., Text 3), a quadra database for storage, a web crawler from Spider.Cloud, a document processor like Lama Pars, and an evaluator using Llama Orchestrator. The responses are constructed using OpenAI.

What are some of the benefits of using this AI-powered knowledge extraction tool?

-The benefits include quickly finding accurate and up-to-date information, saving time and effort, and providing better decision-making support. It can also serve as a competitive advantage in professional settings.

What lessons were learned from the project, as mentioned in the transcript?

-Lessons learned include the potential of CRACK to add interesting and useful information, the need for better selection of tools for enhanced performance, and the limitations of using a free version of a service like Quadrant Cloud.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Perplexity AI: Полная инструкция | От новичка до про за 20 минут: Мастер-класс по Perplexity AI

Create a Highly Accurate Knowledge Base in Voiceflow using Tags API

【AI開発】CursorとVercel v0を使ったAI時代の爆速開発をしよう!! #AI #プログラミング #nextjs

Best AI tool for Literature Review 2024! All features of Consensus

Grand Finale AI Hackathon (S1) UC-4 | AI Automation with Tally and Python | CA Ramajayam Jaychandran

Build an AI app to extract PDF data

5.0 / 5 (0 votes)