K-nearest Neighbors (KNN) in 3 min

Summary

TLDRThis video explains the K-Nearest Neighbors (KNN) algorithm, an intuitive machine learning method for classification and regression. It starts with a simple concept: classifying a new point based on the labels of its nearest neighbors. The video covers key aspects like the importance of choosing the right K value, different distance metrics (e.g., Euclidean, Manhattan), and the challenges of high-dimensional data. While KNN is easy to understand and doesn't require a model to be built beforehand, it has limitations such as slow performance with large datasets and issues with dimensionality. It's an excellent starting point for beginners to grasp machine learning basics.

Takeaways

- 😀 Machine learning models have evolved from simple algorithms to complex systems like large language models, but understanding the basics is crucial for beginners.

- 😀 K-Nearest Neighbors (K-NN) is a simple yet powerful algorithm for classification that makes decisions based on the closest neighbors of a point.

- 😀 K-NN works by selecting a value for K (number of neighbors), finding the nearest points, and classifying the new point based on the most common label among them.

- 😀 Euclidean distance is the most common metric for measuring distance between points in K-NN, but other metrics like Manhattan or Minkowski distance may be more suitable depending on the data.

- 😀 K-NN is considered a 'lazy learner' because it does not build a model in advance but instead makes predictions based on stored data when needed.

- 😀 The choice of K value is critical for K-NN's accuracy; too large or too small a K can affect the model's performance.

- 😀 K-NN is highly intuitive and great for understanding classification concepts but has limitations, such as being slow with large datasets and being sensitive to the choice of K.

- 😀 The 'curse of dimensionality' occurs when the number of features increases, making the concept of distance less meaningful and affecting K-NN’s performance.

- 😀 Techniques like dimensionality reduction can help improve K-NN’s performance when dealing with high-dimensional data.

- 😀 Despite its limitations, K-NN remains a great starting point for beginners in machine learning because of its simplicity and intuitive decision-making process.

- 😀 The simplest approach to classification often involves looking at a point's nearest neighbors to make an informed decision, demonstrating that sometimes the basics are the most effective.

Q & A

What is the main concept behind the K-Nearest Neighbors (KNN) algorithm?

-KNN is an intuitive machine learning algorithm that classifies a new data point based on the majority label of its nearest neighbors. It makes decisions by examining the closest points to the new data point and assigning it the most common label among them.

How does KNN determine the nearest neighbors?

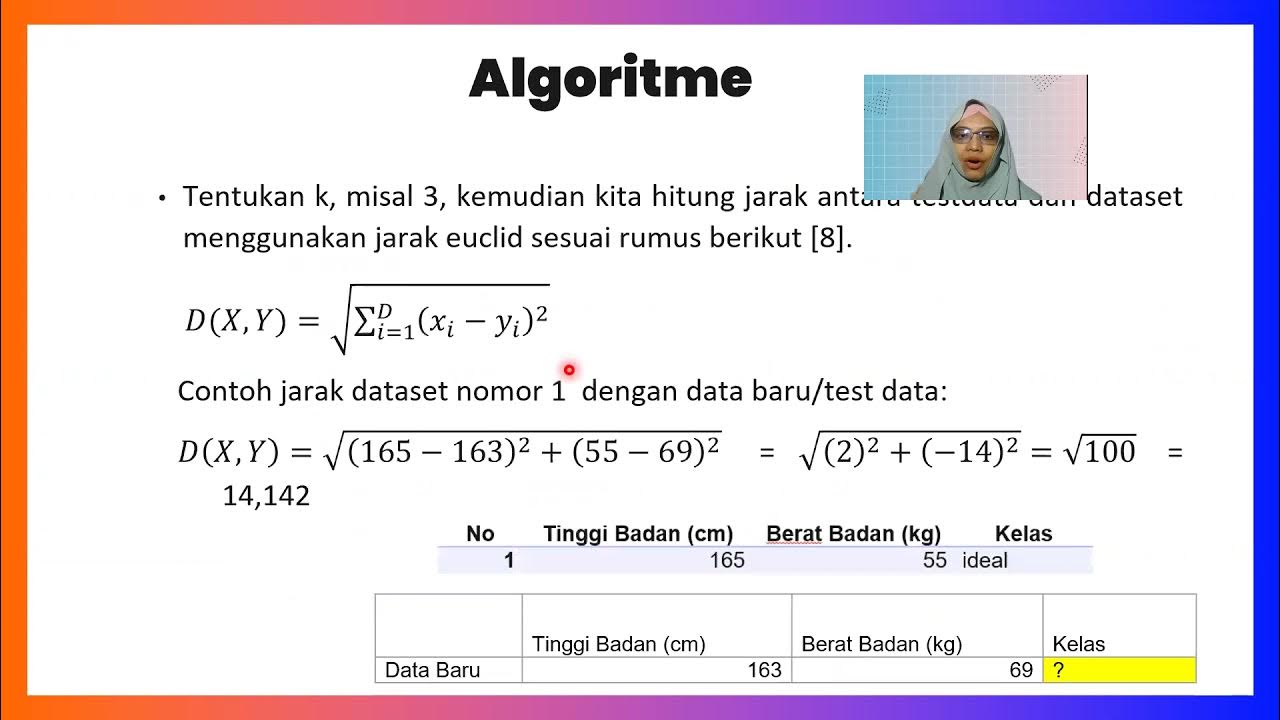

-KNN determines the nearest neighbors by measuring the distance between the new data point and all other points in the dataset using a distance metric, like Euclidean distance. The K closest points are selected, and the most frequent label among these neighbors is assigned to the new point.

What is the significance of the 'K' value in KNN?

-The 'K' value represents the number of nearest neighbors to consider when classifying a new point. Choosing an appropriate K is crucial for model accuracy, as a small K may lead to overfitting, and a large K may smooth out important distinctions in the data.

What are some distance metrics used in KNN?

-The most commonly used distance metric in KNN is the Euclidean distance, which calculates the straight-line distance between points. Other metrics include Manhattan distance and Minkowski distance, which can be more appropriate depending on the data.

Why is KNN considered a lazy learner?

-KNN is a lazy learner because it does not build a model in advance. Instead, it stores the entire dataset and makes predictions only when a query is made, which can be computationally expensive for large datasets.

Can KNN be used for regression problems?

-Yes, KNN can also be applied to regression problems. In this case, instead of classifying the new point based on the majority label, the algorithm predicts a continuous value based on the average of the labels of the nearest neighbors.

What is the curse of dimensionality in KNN?

-The curse of dimensionality refers to the problem KNN faces as the number of features in the dataset increases. As dimensions grow, the concept of 'nearness' becomes less meaningful, making it harder to find relevant neighbors, which negatively impacts the algorithm’s performance.

How can the curse of dimensionality be mitigated in KNN?

-One common technique to mitigate the curse of dimensionality is dimensionality reduction, which reduces the number of features in the data, making the distance between points more meaningful and improving the performance of KNN.

What are the limitations of KNN?

-KNN has several limitations, including its computational inefficiency with large datasets, the need for careful selection of the K value, and its susceptibility to the curse of dimensionality. It can also be sensitive to irrelevant features, which may impact performance.

Why is KNN considered an effective starting point for beginners in machine learning?

-KNN is considered an effective starting point because of its simplicity and intuitive nature. It allows beginners to easily understand key concepts of classification, such as the idea of decision boundaries and how nearby data points influence the classification of new data.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Konsep Algoritma KNN (K-Nearest Neigbors) dan Tips Menentukan Nilai K

K Nearest Neighbors | Intuitive explained | Machine Learning Basics

Lecture 3.4 | KNN Algorithm In Machine Learning | K Nearest Neighbor | Classification | #mlt #knn

K-Nearest Neighbors Classifier_Medhanita Dewi Renanti

All Learning Algorithms Explained in 14 Minutes

Python Exercise on kNN and PCA

5.0 / 5 (0 votes)