Perceptron Rule to design XOR Logic Gate Solved Example ANN Machine Learning by Mahesh Huddar

Summary

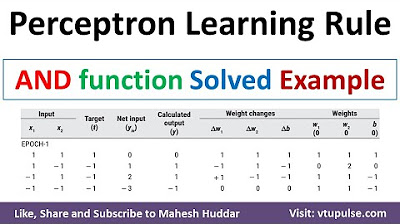

TLDRIn this video, the process of using the perceptron rule to design an XOR gate is explained in detail. The XOR gate, known for being non-linearly separable, cannot be solved with a simple perceptron. Instead, a multi-layer perceptron approach is used, involving intermediary perceptrons (Z1 and Z2) to compute the final output. The video covers how to initialize weights, calculate net inputs, and update weights based on the difference between target and output, ultimately achieving the correct XOR function. The concept is clarified with step-by-step calculations and practical demonstrations.

Takeaways

- 😀 The XOR gate is a logical gate where the output is high only when exactly one of the inputs is high.

- 😀 XOR data is non-linearly separable, meaning it cannot be classified using a single linear line or perceptron.

- 😀 The perceptron rule involves breaking the XOR gate into two intermediary perceptrons (Z1 and Z2) to handle non-linearity.

- 😀 Z1 represents the operation 'X1 AND NOT X2' and Z2 represents 'NOT X1 AND X2'.

- 😀 After calculating Z1 and Z2, the final XOR output is obtained by combining them with the OR operation.

- 😀 Initializing weights (W11, W21, W12, W22) and the learning rate (1.5) is essential for training the perceptrons.

- 😀 The perceptron learning rule updates weights based on the difference between the target and the calculated output.

- 😀 Weight updates are performed iteratively for each input combination using the equation: `w_ij = w_ij + η * (T - O) * X_i`.

- 😀 A threshold value (e.g., 1) is used to decide whether the output is activated (high) or not based on the net input.

- 😀 The training process continues until the perceptrons correctly classify all input combinations (0 0, 0 1, 1 0, 1 1).

- 😀 After training, the final weights (W11, W21, W12, W22, V1, V2) allow the perceptrons to correctly implement the XOR gate functionality.

Q & A

What is the main objective of the video?

-The main objective of the video is to explain how to use the perceptron rule to design an XOR gate.

Why is it not possible to use a simple perceptron rule for XOR gate design?

-It is not possible to use a simple perceptron rule for XOR gate design because the data is non-linearly separable, meaning it cannot be classified with a single straight line.

What is the equation used to implement the XOR gate in the video?

-The equation used to implement the XOR gate is: y = x1 negation of x2 + negation of x1 x2.

How are Z1 and Z2 related to the perceptron rule in the XOR gate design?

-Z1 and Z2 are intermediary perceptrons that help to calculate the final output Y by combining them. Z1 is related to the expression x1 negation of x2, and Z2 corresponds to negation of x1 x2.

What is the role of the threshold in the perceptron activation function?

-The threshold in the perceptron activation function is used to compare the net input. If the net input is greater than or equal to the threshold, the output is 1; otherwise, the output is 0.

What are the initial values used for the weights, threshold, and learning rate in the example?

-In the example, the weights (W11 and W21) are initialized to 1, the threshold is set to 1, and the learning rate is set to 1.5.

What happens during weight updates when the target output and calculated output do not match?

-When the target output and the calculated output do not match, the weights are updated using the weight update rule: w_ij = w_ij + learning rate * (T - O) * x_i, where T is the target, O is the calculated output, and x_i is the input.

What is the significance of the Z2 perceptron in the XOR gate design?

-Z2 is responsible for the part of the XOR gate that involves the negation of x1 and x2 (i.e., x1 bar x2). It helps to correctly classify inputs based on this logic.

How does the OR operation between Z1 and Z2 affect the final output Y?

-The OR operation between Z1 and Z2 ensures that the final output Y is high (1) if either Z1 or Z2 is high (1), which corresponds to the behavior of an XOR gate.

What are the final weight values and their significance in the perceptron model for the XOR gate?

-The final weight values are: W11 = 1, W21 = -0.5, W12 = -0.5, W22 = 1, and V1 = V2 = 1. These values allow the perceptron to correctly classify the XOR gate outputs for all possible inputs.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Why Single Layered Perceptron Cannot Realize XOR Gate

Logisim // 2-finding the TRUTH TABLE of a Circuit

81. OCR GCSE (J277) 2.4 Simple logic diagrams

Mengenal si GRU

11. Implement AND function using perceptron networks for bipolar inputs and targets by Mahesh Huddar

Ep 026: Introduction to Combinational Logic

5.0 / 5 (0 votes)