Naive Bayes, Clearly Explained!!!

Summary

TLDRIn this video, Josh Starburns introduces the concept of Naive Bayes classification, focusing on the Multinomial Naive Bayes Classifier. He explains its application in spam detection by calculating probabilities based on word frequency in normal and spam messages. Josh also covers key concepts like prior probability, likelihood, and how to overcome common issues like zero probabilities using smoothing techniques. Despite its simplicity, Naive Bayes proves highly effective in classifying messages. The video concludes with a discussion on why Naive Bayes is considered 'naive' and highlights additional resources for further study.

Takeaways

- 🤖 Naive Bayes is a classification technique used to predict outcomes based on word probabilities.

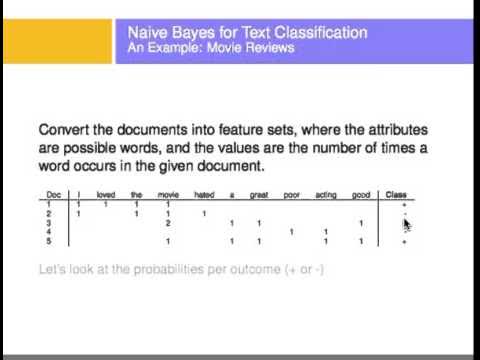

- 📊 A common version of Naive Bayes is the multinomial classifier, which is useful for text classification like spam filtering.

- 📈 The classifier works by creating histograms of words from normal and spam messages to calculate word probabilities.

- 📝 Probabilities of words appearing in normal or spam messages are used to predict if a new message is spam.

- 💡 Likelihoods and probabilities are interchangeable terms in Naive Bayes when dealing with discrete words.

- ⚖️ A prior probability is an initial guess about how likely a message is to be normal or spam, based on training data.

- 🔄 The Naive Bayes classifier treats word order as irrelevant, simplifying the problem but ignoring language structure.

- 🛠 A common problem, zero probability, is resolved by adding a count to each word's occurrences, known as 'smoothing'.

- 🚨 Naive Bayes is considered 'naive' because it assumes all features (words) are independent, ignoring relationships between them.

- 📚 Despite its simplicity, Naive Bayes often performs well in real-world applications, especially for tasks like spam filtering.

Q & A

What is the main focus of the video?

-The video explains the Naive Bayes classifier, with a focus on the multinomial Naive Bayes version. It discusses how to use it for classifying messages as spam or normal.

What is the difference between multinomial Naive Bayes and Gaussian Naive Bayes?

-Multinomial Naive Bayes is commonly used for text classification, while Gaussian Naive Bayes is used for continuous data like weight or height. The video focuses on the former and mentions that Gaussian Naive Bayes will be covered in a follow-up video.

How does Naive Bayes classify messages as normal or spam?

-Naive Bayes calculates the likelihood of each word in a message being present in either normal or spam messages, multiplies these probabilities by initial guesses (priors) for each class, and compares the results to decide whether the message is normal or spam.

What is a 'prior probability' in the context of Naive Bayes?

-A prior probability is the initial guess about the likelihood of a message being normal or spam, regardless of the words it contains. It is typically estimated from the training data.

Why is Naive Bayes considered 'naive'?

-Naive Bayes is considered 'naive' because it assumes that all features (words in this case) are independent of each other. In reality, words often have relationships and specific orders that affect meaning, but Naive Bayes ignores this.

What problem arises when calculating probabilities for words not in the training data, and how is it solved?

-If a word like 'lunch' does not appear in the training data, its probability becomes zero, which leads to incorrect classifications. This problem is solved by adding a small count (known as 'smoothing' or 'Laplace correction') to every word in the histograms.

How does Naive Bayes handle repeated words in a message?

-Naive Bayes considers the frequency of words. For example, if a word like 'money' appears multiple times in a message, it increases the likelihood of the message being spam if 'money' is more common in spam messages.

What are 'likelihoods' in the Naive Bayes algorithm?

-Likelihoods are the calculated probabilities of observing specific words in either normal or spam messages. In this context, likelihoods and probabilities can be used interchangeably.

How does Naive Bayes perform compared to other machine learning algorithms?

-Even though Naive Bayes makes simplistic assumptions, it often performs surprisingly well for tasks like spam detection. It tends to have high bias due to its naivety but low variance, meaning it can be robust in practice.

What is the purpose of the alpha parameter in Naive Bayes?

-The alpha parameter is used for smoothing to prevent zero probabilities when a word doesn't appear in the training data. In this video, alpha is set to 1, meaning one count is added to each word in the histograms.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Machine Learning: Multinomial Naive Bayes Classifier with SK-Learn Demonstration

Naive Bayes dengan Python & Google Colabs | Machine Learning untuk Pemula

Naive Bayes classifier: A friendly approach

Tutorial 47- Bayes' Theorem| Conditional Probability- Machine Learning

Text Classification Using Naive Bayes

Klasifikasi dengan Algoritma Naive Bayes Classifier

5.0 / 5 (0 votes)