XGBoost's Most Important Hyperparameters

Summary

TLDRThe video script discusses configuring an XGBoost model by tuning hyperparameters that influence tree structure, model weights, and learning rate. It emphasizes the balance between weak trees and subsequent corrections, regularization to prevent overfitting, and handling imbalanced data. The speaker suggests using libraries like Hyperopt for efficient hyperparameter tuning and shares insights on dealing with overfitting by adjusting parameters incrementally, highlighting the trade-offs in machine learning.

Takeaways

- 🌳 **Tree Structure Control**: Hyperparameters are used to manage the depth of trees and the number of samples per node, creating 'weak trees' that don't go very deep.

- 🔒 **Regularization**: Certain hyperparameters help in reducing the model's focus on specific columns, thus preventing overfitting.

- 📊 **Handling Imbalanced Data**: There are hyperparameters that can adjust model or classification weights to deal with imbalanced datasets.

- 🌳 **Number of Trees**: The quantity of trees or estimators in an XGBoost model is a key hyperparameter that influences the model's performance and prediction time.

- 🏌️♂️ **Learning Rate**: Similar to golfing, the learning rate determines the 'power' of each tree's impact on the model, with lower rates often leading to better performance.

- 🔄 **Trade-offs and Balances**: In machine learning, there are always trade-offs to consider, such as model complexity versus prediction speed.

- 🔧 **Model Tuning**: XGBoost tends to overfit slightly out of the box, but with tuning, it can perform better than many other models.

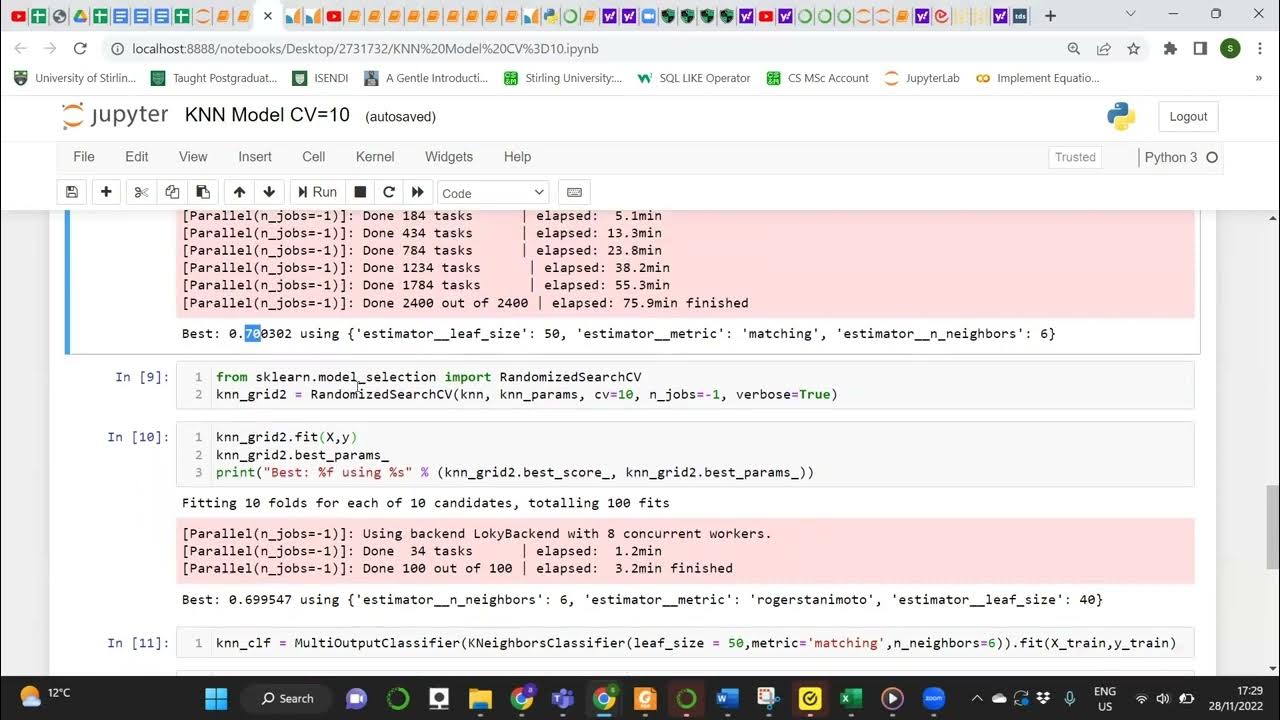

- 🔎 **Hyperparameter Tuning Tools**: Libraries like Hyperopt can be used for efficient hyperparameter tuning, employing Bayesian modeling to find optimal settings.

- 📈 **Stepwise Tuning**: A practical approach to tuning involves adjusting one set of hyperparameters at a time, which can save time and still improve model performance.

- ⏱️ **Time and Resource Considerations**: The choice of tuning method can depend on the resources available, with options ranging from grid search to more sophisticated methods like Hyperopt.

Q & A

What are hyperparameters in the context of configuring an XGBoost model?

-Hyperparameters in the context of configuring an XGBoost model are adjustable parameters that control the learning process and model behavior. They determine aspects like the tree structure, regularization, model weights, and the number of trees or estimators.

Why are weak trees preferred in XGBoost?

-Weak trees are preferred in XGBoost because they don't go very deep, which allows subsequent trees to correct their predictions. This process helps in reducing overfitting and improving the model's generalization.

How do you control the depth of trees in an XGBoost model?

-The depth of trees in an XGBoost model is controlled by setting the 'max_depth' hyperparameter, which determines how deep each tree in the model can grow.

What is the purpose of regularization hyperparameters in XGBoost?

-Regularization hyperparameters in XGBoost are used to prevent overfitting by penalizing complex models. They can make the model pay less attention to certain columns, thus controlling the model's complexity.

How can you handle imbalanced data using XGBoost hyperparameters?

-Imbalanced data can be handled in XGBoost by adjusting the 'sample_weight' or 'class_weight' hyperparameters, which allow the model to assign different weights to different classes or samples, thus addressing the imbalance.

What is the relationship between the number of trees and the learning rate in XGBoost?

-The number of trees, also known as estimators, determines how many times the model will update its predictions. The learning rate, on the other hand, controls the step size at each update. A smaller learning rate with more trees can lead to a more accurate model but may require more computation time.

How does the learning rate in XGBoost relate to the golfing metaphor mentioned in the script?

-In the golfing metaphor, the learning rate is likened to the force with which one hits the golf ball. A lower learning rate is like hitting the ball more gently and consistently, which can prevent overshooting the target, analogous to avoiding overfitting in a model.

What are the trade-offs involved in increasing the number of trees in an XGBoost model?

-Increasing the number of trees in an XGBoost model can lead to a more accurate model as it allows for more iterations to refine predictions. However, it can also increase the computation time and memory usage, potentially leading to longer prediction times.

Why might XGBoost slightly overfit out of the box, and how can this be addressed?

-XGBoost might slightly overfit out of the box due to its powerful learning capabilities and default hyperparameter settings. This can be addressed by tuning hyperparameters such as 'max_depth', 'learning_rate', and 'n_estimators', or by using techniques like cross-validation and regularization.

What is the role of the hyperopt library in tuning XGBoost hyperparameters?

-The hyperopt library is used for hyperparameter optimization in XGBoost. It employs Bayesian modeling to intelligently select hyperparameter values from specified distributions, balancing exploration and exploitation to find optimal model settings.

What is stepwise tuning and how can it be used to optimize XGBoost hyperparameters efficiently?

-Stepwise tuning is a method where hyperparameters are adjusted one group at a time, starting with those that have the most significant impact on the model, such as tree structure parameters. This method can save time by focusing on one aspect of the model at a time, potentially reaching a satisfactory model performance with less computational effort.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Xgboost Regression In-Depth Intuition Explained- Machine Learning Algorithms 🔥🔥🔥🔥

Time Series Forecasting with XGBoost - Use python and machine learning to predict energy consumption

INTRO TO BIG DATA AND AI MEET 13

Gaussian Processes

Unit 1.4 | The First Machine Learning Classifier | Part 2 | Making Predictions

Deep Learning(CS7015): Lec 2.3 Perceptrons

5.0 / 5 (0 votes)