What is Isomap (Isometric Mapping) in Machine Learning?

Summary

TLDRIsomap, a machine learning technique for dimensionality reduction, simplifies complex high-dimensional data into a lower-dimensional space while preserving its intrinsic geometry. By constructing a neighborhood network and calculating geodesic distances, it unfolds the data like a crumpled paper, revealing true distances. This ability to capture nonlinear structures makes isomap invaluable for fields like image processing, bioinformatics, and psychology, offering a powerful tool to interpret complex data.

Takeaways

- 🧠 Isomap is a technique in machine learning used for dimensionality reduction, simplifying high-dimensional data sets into lower-dimensional ones.

- 🎯 The primary goal of Isomap is to preserve geodesic distances, the shortest paths, when projecting data from high to lower dimensions.

- 📜 Isomap's process is likened to unfolding a crumpled piece of paper to reveal the true straight-line distances between points.

- 🌐 It captures the intrinsic geometry of the data by creating a neighborhood network where each point connects to its nearest neighbors.

- 🔢 Isomap calculates the shortest path between all pairs of points, determining the geodesic distances essential for dimensionality reduction.

- 📉 It uses these geodesic distances to create a lower-dimensional embedding that maintains the original data's geometric relationships.

- 📈 Isomap is particularly effective at capturing nonlinear structures within the data, unlike linear methods such as principal component analysis.

- 🌟 The ability to reduce dimensions while preserving geometric relationships makes Isomap a powerful tool in fields like image processing, bioinformatics, and psychology.

- 🔑 Isomap's unique capability to handle complex, high-dimensional data makes it invaluable for various applications.

- 🔍 The technique helps in making sense of complex data by unraveling its underlying simplicity within the realm of machine learning.

- 🌱 Isomap represents a demystified approach to understanding and working with high-dimensional data in a simplified manner.

Q & A

What is isomap in the context of machine learning?

-Isomap, short for isometric mapping, is a technique used in machine learning for dimensionality reduction. It simplifies high-dimensional data sets into lower-dimensional ones while preserving the intrinsic geometric relationships of the data.

Why is dimensionality reduction necessary in machine learning?

-Dimensionality reduction is necessary because visualizing and analyzing high-dimensional data can be extremely difficult or impossible. It helps in making the data more manageable and comprehensible, and can also improve the performance of machine learning algorithms.

How does isomap preserve the geodesic distances when projecting data to a lower-dimensional space?

-Isomap preserves geodesic distances by first building a neighborhood network where each point connects to its nearest neighbors. It then calculates the shortest paths, or geodesic distances, between all pairs of points and uses these distances to create a lower-dimensional embedding that maintains the original data's geometric relationships.

What is the analogy used in the script to explain the concept of isomap?

-The script uses the analogy of unfolding a crumpled piece of paper to explain isomap. When the paper is crumpled, the straight-line distance between two points is less than the actual path along the paper's surface. Unfolding the paper reveals the true path distance, similar to how isomap unfolds the high-dimensional data to reveal the true distances in a lower-dimensional space.

What is the difference between isomap and linear dimensionality reduction methods like PCA?

-Isomap is capable of capturing nonlinear structures within the data, unlike linear methods like Principal Component Analysis (PCA). This unique ability of isomap makes it particularly effective for datasets with complex, nonlinear geometric structures.

In which fields can isomap be applied effectively?

-Isomap can be applied effectively in various fields, including image processing, bioinformatics, and psychology, among others. Its ability to preserve the original data's geometric relationships makes it a powerful tool for analyzing complex, high-dimensional data.

How does isomap build the neighborhood network for high-dimensional data?

-Isomap builds the neighborhood network by connecting each point in the high-dimensional space to its nearest neighbors. This network represents the data structure in the high-dimensional space and is crucial for calculating the geodesic distances.

What is the final step in the isomap process after calculating geodesic distances?

-The final step in the isomap process is to use the calculated geodesic distances to create a lower-dimensional embedding. This embedding captures the intrinsic geometry of the data and allows for the visualization and analysis of the high-dimensional data in a reduced space.

What is the significance of capturing the intrinsic geometry of data in machine learning?

-Capturing the intrinsic geometry of data is significant because it allows machine learning algorithms to understand and work with the complex structures within the data. This can lead to better performance and more accurate results in tasks such as classification, clustering, and visualization.

How does isomap handle the challenge of visualizing high-dimensional data?

-Isomap addresses the challenge of visualizing high-dimensional data by reducing it to a lower-dimensional space while preserving the geodesic distances. This makes it possible to visualize and analyze the data in a more comprehensible form.

What is the main advantage of isomap over other dimensionality reduction techniques?

-The main advantage of isomap is its ability to effectively capture and preserve the nonlinear structures and intrinsic geometric relationships of high-dimensional data, which is something that linear techniques struggle with.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

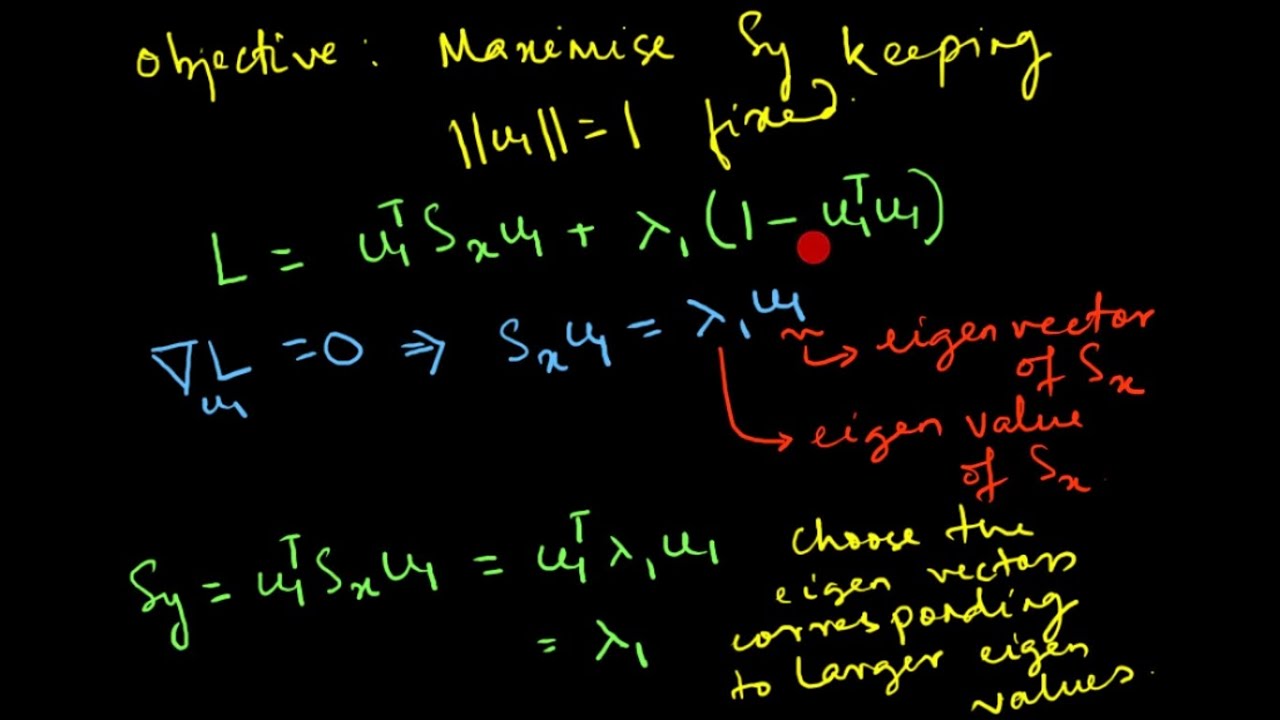

Principal Component Analysis (PCA) : Mathematical Derivation

Practical Intro to NLP 26: Theory - Data Visualization and Dimensionality Reduction

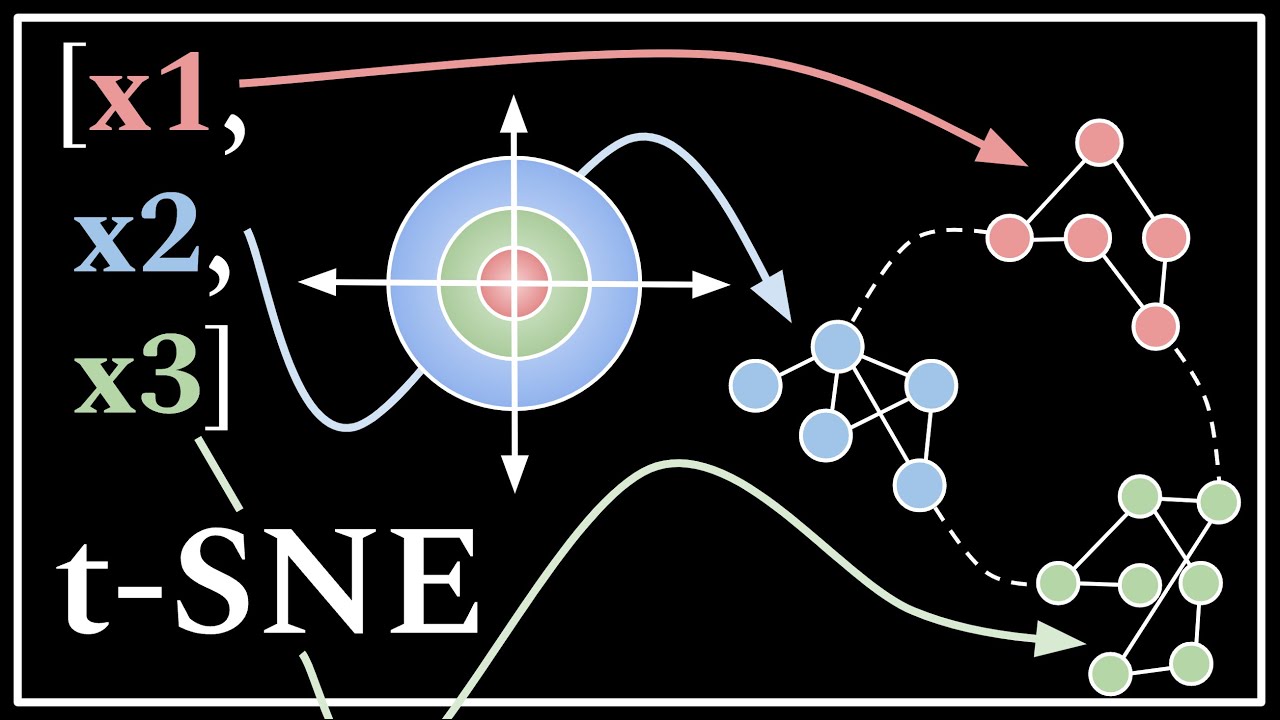

t-SNE Simply Explained

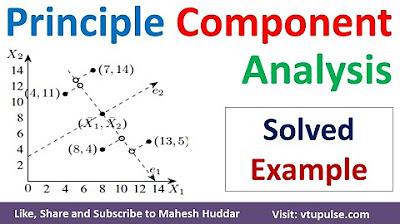

1 Principal Component Analysis | PCA | Dimensionality Reduction in Machine Learning by Mahesh Huddar

#7 Machine Learning Specialization [Course 1, Week 1, Lesson 2]

UMAP: Mathematical Details (clearly explained!!!)

5.0 / 5 (0 votes)