How to Do A/B Testing: 15 Steps for the Perfect Split Test

Summary

TLDRThis video script offers an insightful guide on A/B Testing, a powerful tool for businesses to optimize content and enhance key performance indicators such as engagement and sales. It explains the process, from choosing test items and determining sample size to analyzing data for statistically significant results. The script highlights common mistakes to avoid and emphasizes the importance of testing single elements for reliable outcomes. With examples and a mention of HubSpot's free A/B Testing Kit, it provides a practical approach to making data-driven decisions to boost business performance.

Takeaways

- 🔍 A/B Testing is a method to compare two versions of an element to determine which performs better for business goals.

- 📈 It helps in optimizing content to increase engagement, sales, clickthrough rates, and other key performance indicators.

- 🧪 Think of A/B Testing as a marketing experiment where you split the audience to test different versions of the same element.

- 🎯 Common goals for A/B Testing include increasing website traffic from emails, improving conversion rates, and reducing bounce rates.

- 🛠️ HubSpot offers a free A/B Testing Kit with guides and templates to assist in the testing process.

- 📝 The first step in A/B Testing is choosing the appropriate test items that will impact the identified goal.

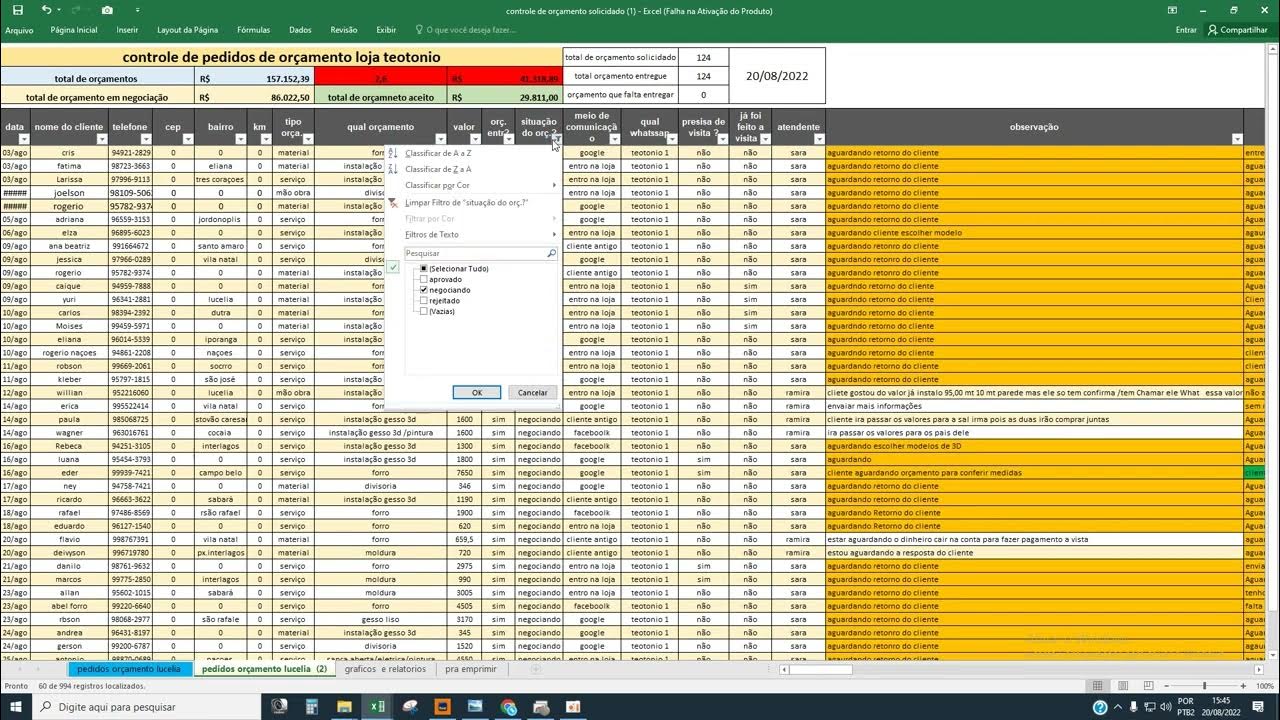

- 📉 Determining a sufficient sample size is crucial to ensure the results are not skewed and are statistically significant.

- 🔄 It's important to test only one element at a time to avoid unreliable results and to identify the exact impact of changes.

- 📊 Analyzing the data after the test helps in planning and making informed changes for future improvements.

- ❗ Common mistakes in A/B Testing include testing more than one variable at once, having a small sample size, and making changes before the test is over.

- 📈 A/B Testing is accessible to businesses of all sizes and offers a data-supported way to find low-cost methods for growth.

Q & A

What is A/B Testing?

-A/B Testing is a marketing experiment where you split your audience to test two different versions of the same element, such as an email subject line, website font, or call to action placement, to determine which version performs better.

How does A/B Testing benefit a business?

-A/B Testing benefits a business by helping to better understand customer habits and behaviors, allowing for content optimization that can increase engagement, sales, clickthrough rates, and other key performance indicators.

What is the purpose of testing different elements in A/B Testing?

-The purpose is to determine which version of the tested element performs better in achieving the set goals, such as increasing website traffic, conversion rates, or reducing bounce rates.

What is the importance of sample size in A/B Testing?

-Sample size is crucial in A/B Testing to ensure the results are not skewed and are statistically significant. A larger sample size provides more reliable data to make informed decisions.

Why is it important to test only one element at a time in A/B Testing?

-Testing only one element at a time ensures that the results are reliable and that you can accurately determine which specific element impacted the outcome. Testing multiple elements can yield unclear results.

What is the significance of statistical significance in A/B Testing?

-Statistical significance, typically aimed at 90% or higher, indicates that the results have a definitive winner and did not occur by chance, providing confidence in the decision-making process.

How does A/B Testing apply to an E-commerce business?

-For E-commerce businesses, A/B Testing can help find what kind of product images attract customers or what checkout designs reduce cart abandonment rates, ultimately optimizing the user experience for increased sales.

What is the first step in approaching an A/B Test according to the script?

-The first step is choosing the appropriate test items by listing out elements that will impact the identified goal, such as testing different versions of a landing page for conversion rates.

What is the role of the HubSpot A/B Testing Kit in the A/B Testing process?

-The HubSpot A/B Testing Kit provides a how-to guide and templates for conducting A/B Tests, including tools for calculating sample size and determining statistical significance.

What are some common mistakes to avoid when conducting A/B Tests?

-Common mistakes include testing more than one variable at a time, only testing minor changes, having too small of a sample size, making changes before the test is over, and only running a test once without replication.

How can A/B Testing help in optimizing a business's email strategy?

-A/B Testing can help optimize email strategies by testing different elements such as subject lines, sender names, email formats, layout, and timing to determine what increases open rates and clickthrough rates.

What is the recommended approach for analyzing A/B Test results?

-The recommended approach is to focus on the goal metric, use tools like the HubSpot A/B testing calculator to determine statistical significance, and make decisions based on clear winners.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)