How Far Can We Scale AI? Gen 3, Claude 3.5 Sonnet and AI Hype

Summary

TLDRThe script discusses the rapid advancements in AI video generation, exemplified by models like Runway Gen 3 and the anticipated Sora from Open AI. It raises questions about the reliability of AI leaders and the scalability of language models, highlighting Claude 3.5 Sonic's capabilities and the incremental improvements in AI. The potential for AI in fields like drug discovery is mentioned, with a cautionary note on separating AI hype from reality and the unpredictable impact of scaling and new research.

Takeaways

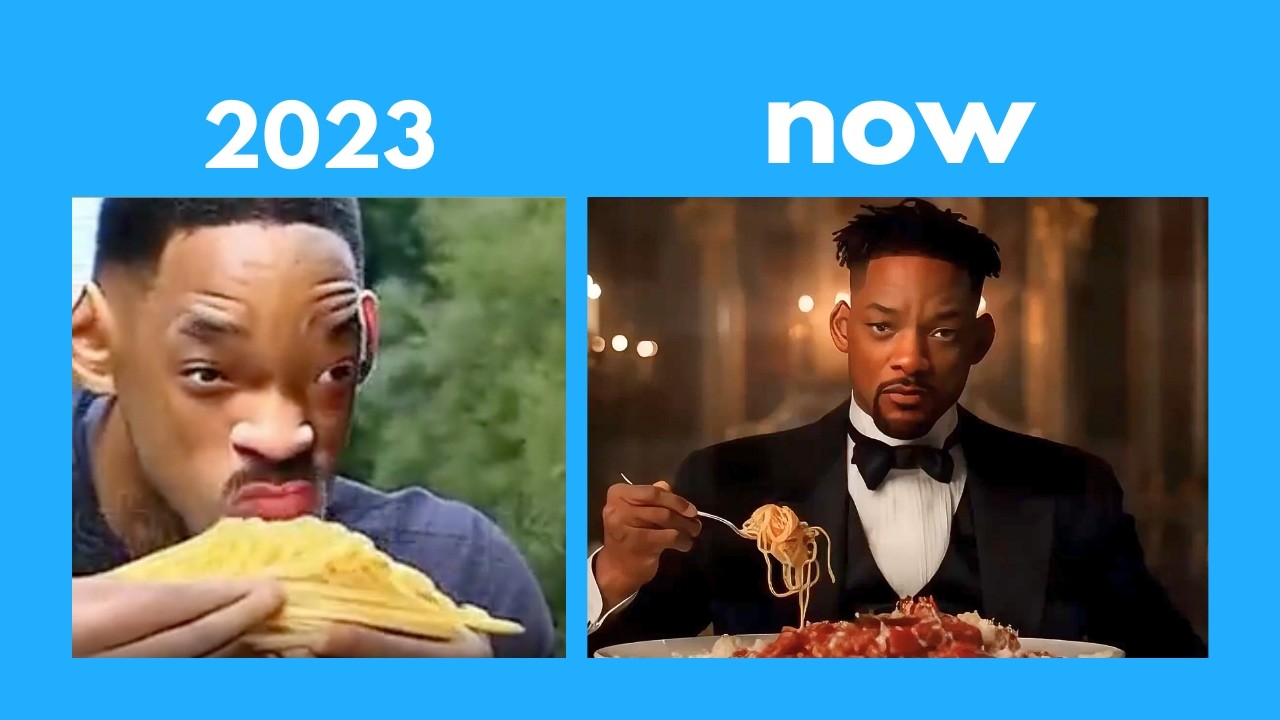

- 🌐 AI video generation is rapidly advancing and becoming more accessible, with models like Runway Gen 3 and Sora promising highly realistic outputs despite training on a small fraction of available video data.

- 🎥 The Luma dream machine offers an engaging way to experiment with AI-generated images, allowing users to interpolate between two images or generate new ones.

- 📈 There is skepticism about the continuous scaling of language models, with concerns about whether increased scale will necessarily lead to more accurate or reliable AI.

- 🤖 The release of advanced voice models, such as OpenAI's real-time advanced voice model, has been delayed to improve content detection and refusal capabilities.

- 📊 Benchmark results for AI models like Claude 3.5 Sonic show improvements with increased compute, but the gains are incremental and not proportional to the scale.

- 🧠 The potential of AI in fields like biology and drug discovery is being discussed, with some suggesting AI could accelerate the rate of discoveries, though this is speculative.

- 🔍 There is a call for caution in interpreting benchmark results and a recognition that AI models still struggle with basic tasks, indicating that scale alone may not solve all issues.

- 📚 The script highlights the importance of metacognition in AI development, suggesting that understanding how to think about problems is as crucial as scaling computational power.

- 🤝 Open source AI models are seen as a way to encourage innovation and allow for diverse applications, contrasting with the idea of a single 'true AI'.

- 🚧 There is acknowledgment from AI leaders that the field is moving fast, and there is a need to ensure that understanding keeps pace with the capabilities of AI models.

- 🔮 Predictions about the future of AI are made with caution, acknowledging the many unknowns and the difficulty in forecasting exact outcomes or timelines for AI advancements.

Q & A

What is the current state of AI video generation technology?

-AI video generation technology is rapidly advancing, with models such as Runway Gen 3 becoming more accessible and generating increasingly realistic outputs. However, these models are likely trained on less than 1% of available video data, indicating that future generations could become even more realistic in a relatively short time.

What is the significance of the Luma Dream Machine in AI image generation?

-The Luma Dream Machine is a tool for AI image generation that allows users to create images or interpolate between two real ones. It represents a fun and engaging way for users to experiment with AI-generated visuals while waiting for the release of more advanced models.

What is the status of the video generation model called Sora from Open AI?

-Sora is a highly anticipated video generation model from Open AI, which is considered to be the most promising in its field. However, it is still under development, and comparisons with other models like Runway Gen 3 show that it may have benefits from larger scale training and compute resources.

Why is the release of the real-time advanced voice mode from Open AI delayed?

-The release of the real-time advanced voice mode from Open AI has been delayed to improve the model's ability to detect and refuse certain content. It also addresses concerns about the model occasionally producing inaccurate or unreliable outputs.

What are some of the limitations of scaling AI models?

-While scaling AI models can lead to improvements, it does not necessarily solve all problems. For example, even with more data and compute, models may still struggle with basic tasks or produce hallucinations in language generation. The hope is that scaling will eventually lead to more accurate and reliable AI, but there is skepticism about whether this will fully address current limitations.

What is the current performance of Claude 3.5 Sonic from Anthropic compared to other language models?

-Claude 3.5 Sonic is a language model from Anthropic that is free, fast, and in certain domains, more capable than comparable language models. It shows improvements in basic mathematical ability and general knowledge compared to models like GPT-40 and Gemini 1.5 Pro from Google, but the differences are not as significant as the scale of compute might suggest.

What is the concept of 'metacognition' in the context of AI development?

-Metacognition in AI refers to the ability of models to understand how to think about a problem in a broad sense, to assess the importance of an answer, and to use external tools to check their answers. It represents a significant frontier in AI development, moving beyond simple scaling to more human-like thinking processes.

What are some of the challenges in accurately assessing the capabilities of AI models?

-Assessing the capabilities of AI models is challenging due to the limitations and flaws in benchmark tests, which may not accurately reflect real-world performance. Additionally, models may struggle with basic tasks despite high scores on benchmarks, indicating a need for more nuanced evaluation methods.

How do AI lab leaders view the potential of AI in fields like biology and drug discovery?

-Some AI lab leaders, such as those from Anthropic, see the potential for AI to significantly impact fields like biology and drug discovery, possibly leading to new discoveries and even cures for diseases. However, these views are sometimes seen as overly optimistic or 'hype' by others in the industry.

What is the current sentiment regarding the hype around AI capabilities and scaling?

-There is a growing sentiment that the hype around AI capabilities and scaling may have gone too far, with some industry insiders expressing skepticism about the pace of progress and the reliability of benchmark results. The challenge is to separate hype from reality and to manage expectations about what AI can and cannot do.

What are the potential future developments in AI model scaling and algorithmic improvements?

-Future developments in AI are expected to include scaling to models with billions of parameters and continued improvements in algorithms and chip technology. If these advancements continue at their current pace, there is a possibility that by 2025-2027, AI models could surpass human capabilities in many areas.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

AI News: Brace Yourself for the Coming AI Storm!

AI Video Tools Are Exploding. These Are the Best

Elon Musk CHANGES AGI Deadline..Googles Stunning New AI TOOL, Realistic Text To Video, and More

AI News: Everything You Missed This Week!

Edward Snowden, SpaceX, ChatGPT, Joe Rogan, Mamba-2, Tooncraft & More

Hollywood is so over: The INSANE progress of AI videos

5.0 / 5 (0 votes)