Elon Musk CHANGES AGI Deadline..Googles Stunning New AI TOOL, Realistic Text To Video, and More

Summary

TLDRThe video script discusses pivotal developments in AI, highlighting Google DeepMind's advancements in video-to-audio technology, creating realistic soundtracks for silent clips. It also touches on Google's shift from research to AI product development, facing challenges like researcher attrition. The script explores AI's role in content creation with TikTok's Symphony, Meta's open-source models, and Runway's Gen 3 Alpha for photorealistic video generation. It concludes with Hedra's character generation and Elon Musk's vision for Tesla's AI advancements, predicting AGI by 2026.

Takeaways

- 🧠 Google DeepMind's video to audio generative technology can add sound effects to silent video clips, enhancing the viewing experience with synchronized audio.

- 🎥 Google showcased the capabilities of their AI model with examples including a wolf howling at the moon and a drummer on stage, demonstrating high-quality audio-video synchronization.

- 🔄 Google's AI advancements have led to a shift from a research lab to an AI product factory, indicating a strategic move towards commercializing their AI technologies.

- 💡 Google is facing internal challenges with a 'brain drain' as talented researchers leave for other companies, seeking faster product development and deployment.

- 📈 TikTok introduces Symphony, an AI suite designed to enhance content creation by blending human creativity with AI efficiency, aiming to streamline the video production process.

- 🌐 Meta contributes to the open-source community by releasing numerous AI models and datasets, fostering innovation and collaboration in the AI field.

- 🔊 Meta's audio seal is a new technique for watermarking audio segments, potentially identifying AI-generated speech within longer audio clips.

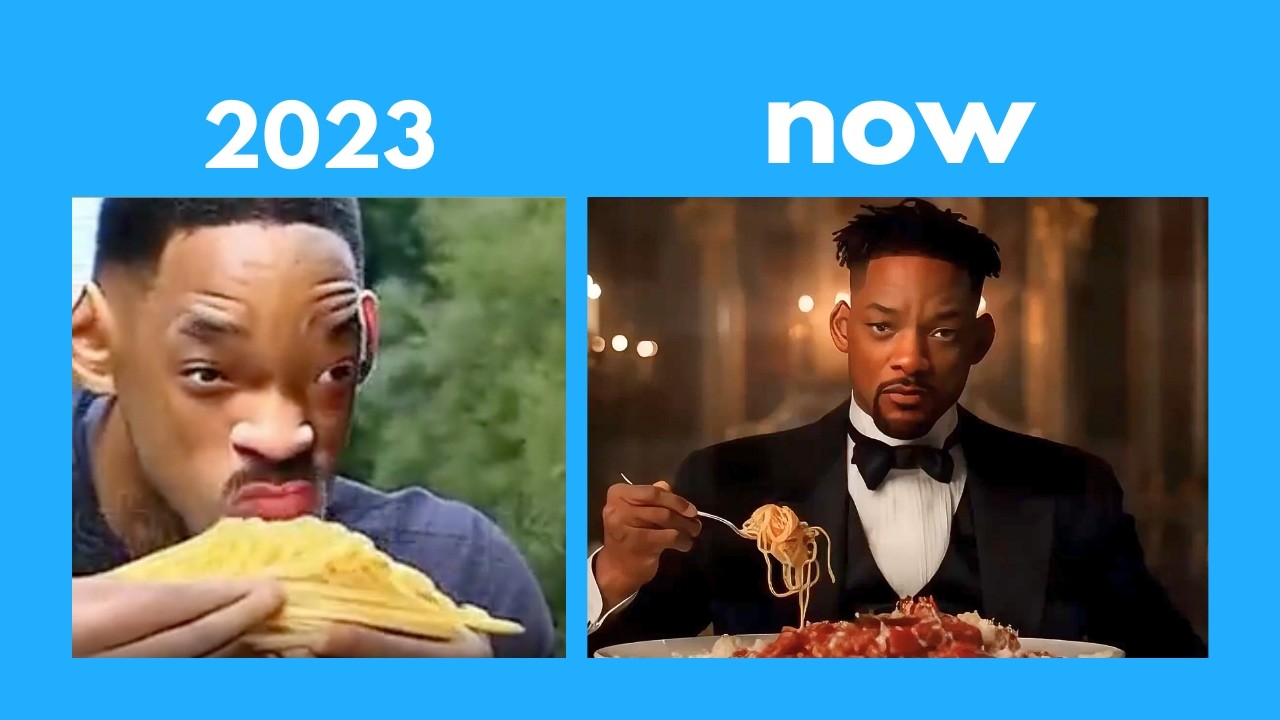

- 🎨 Runway introduces Gen 3 Alpha, a text-to-video model that generates highly realistic videos, including photorealistic humans, pushing the boundaries of AI-generated content.

- 🤖 Hedra Labs' Character One is a foundation model for headshot generation that can create emotionally reactive characters, opening new possibilities for AI in storytelling and acting.

- 🚗 Elon Musk discusses Tesla's future with AI, including the integration of advanced AI systems into vehicles and the potential for AGI (Artificial General Intelligence) by 2026.

- 🤖 The development of AI humanoid robots like Tesla's Optimus is expected to bring a level of abundance in goods and services, potentially transforming various industries.

Q & A

What is the main update from Google DeepMind regarding video to audio generative technology?

-Google DeepMind has shared progress on their video to audio generative technology, which can add sound effects to silent video clips, matching the acoustics of the scene and accompanying onscreen action.

Can you provide examples of the audio effects generated by Google's new model?

-Examples include a wolf howling at the moon, a slow mellow harmonica playing as the sun sets on the prairie, jellyfish pulsating underwater, and a drummer on stage at a concert with flashing lights and a cheering crowd.

How does Google's generative audio technology work?

-Google's technology uses video pixels and text prompts to generate rich soundtracks, allowing it to create audio that matches the video content effectively.

What is the significance of the drummer on stage example in Google's demonstration?

-The drummer on stage example is significant because it shows the model's ability to sync the audio hits with the actual onscreen actions, indicating a high level of coordination between visual and audio elements.

What is Google's recent shift from a research lab to an AI product factory?

-Google has made a major shift from focusing on research to producing AI products, which reflects a change in strategy from foundational research to commercializing their breakthroughs.

Why have some researchers left Google DeepMind?

-Some researchers have left Google DeepMind due to frustrations with the company's pace of product release and a perceived lack of focus on shipping features to the masses.

What is TikTok's Symphony, and how does it help content creators?

-Symphony is TikTok's new creative AI suite designed to elevate content creation by blending human imagination with AI-powered efficiency, helping users make better videos and analyze trends for effective content ideas.

What is Meta's contribution to the open-source AI community?

-Meta has released a large number of open models and datasets, including multi-token prediction models, Meta Chameleon for image and text reasoning, Meta Audio Seal for audio watermarking, and Prism for diverse geographic and cultural features.

What is Runway's Gen 3 Alpha, and how does it advance video generation?

-Runway's Gen 3 Alpha is a text-to-video model trained on new infrastructure for large-scale multimodal training, offering highly realistic video generation, especially with photorealistic humans.

What is Hedra Labs' Character One, and what does it enable?

-Hedra Labs' Character One is a foundation model that enables reliable headshot generation and storytelling with emotionally reactive characters, allowing next-level content creation.

What are Elon Musk's predictions regarding Tesla's AI advancements?

-Elon Musk predicts that Tesla will achieve AGI, or Artificial General Intelligence, by 2026 at the latest, enabling humanoid robots to perform a wide range of tasks with a high level of intelligence.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Massive AI News: Google TAKES THE LEAD! LLama 4 Details Revealed , Humanoid Robots Get Better

【2024年注目】期待の動画生成AIサービス 7選

Deepfakes Explained: How they're made, how to spot them & are they dangerous? | Explained

AI News: GPT 5, Cerebras Voice, Claude 500K Context, Home Robot

AI NEWS Google's AI Leveling Up | Mystic v2, Pliny and AI Movies...

Hollywood is so over: The INSANE progress of AI videos

5.0 / 5 (0 votes)