Directional Motion Detection and Tracking using Webcam in TouchDesigner #touchdesigner #tutorial

Summary

TLDRIn this video, Dan demonstrates how to use optical flow with a webcam to detect movement direction, creating job channels for triggering events based on left, right, up, and down movements. This technique is ideal for interactive installations or controlling visuals without the need for sensors. Dan guides viewers through the process, from setting up the optical flow to refining the output for a clean direction signal, and suggests adjusting parameters based on lighting conditions. The tutorial also promotes Dan's Patreon for additional resources and components.

Takeaways

- 🎥 Dan is demonstrating how to use a webcam with optical flow to track movement for triggering events in a design software.

- 👐 The process does not require special sensors, making it accessible for those without them.

- 🔍 Optical flow is used to determine the direction of movement for each pixel in the webcam's incoming texture.

- 📏 The script explains how to isolate the left-right movement by focusing on the red channel and using a reorder node.

- ↔️ A threshold node is used to separate the movement into positive (right) and negative (left) directions.

- 🔢 Analyze nodes are utilized to count the number of pixels moving in each direction, which is then summed up.

- 📉 To reduce noise, a trail node is introduced to smooth out the data, making the movement detection more reliable.

- 📊 A logic chop is used to define bounds that determine when the movement is considered significant enough to trigger an action.

- 🔄 The final direction is determined by comparing the count of positive and negative pixels, with a sine function used to simplify the output to -1 or 1.

- 🛠️ The setup can be adjusted with parameters such as the optical flow threshold and bounds to optimize performance under different lighting conditions.

- 🔄 The same method can be applied to the green channel to detect up and down movements, with the final output being a combination of both directional movements.

Q & A

What is the main purpose of the component being built in the video?

-The main purpose of the component is to detect movement direction using optical flow from a webcam input and generate job channels that can trigger events based on the movement direction.

What is Optical Flow and how does it help in this context?

-Optical Flow is a method that determines the direction of movement for each pixel of an incoming texture. It helps by providing the direction of movement for the objects within the webcam's view, which can be used to trigger events or control visuals.

How can the optical flow component be accessed in the video?

-The optical flow component can be accessed by going to the tools palette, pressing Ctrl+B, and selecting 'Optical Flow' from the list.

What does the Optical Flow component provide in terms of movement detection?

-The Optical Flow component provides the direction of movement for each pixel, encoded in red and green channels, where red indicates left-right movement and green indicates up-down movement.

How does the video script describe the process of isolating movement direction?

-The script describes isolating the movement direction by using a reorder node to focus on the red channel for left-right movement, applying a threshold to separate negative and positive pixel values indicating direction, and then analyzing the count of these pixels to determine the overall movement direction.

What is the purpose of the threshold node in the script?

-The threshold node is used to filter out pixels based on their movement direction, separating the pixels that indicate movement to the left (negative values) from those indicating movement to the right (positive values).

How are the counts of positive and negative pixels used to determine the overall movement direction?

-The counts of positive and negative pixels are converted to chop data and then subtracted from each other to get a value that indicates the overall movement direction, with positive values suggesting rightward movement and negative values suggesting leftward movement.

What is the role of the trail node in the script?

-The trail node is used to smooth out the data and reduce noise, making the movement direction signal more reliable and easier to interpret.

How can the final direction signal be cleaned up for a more reliable output?

-The final direction signal can be cleaned up by using a logic chop to define bounds that filter out noise, and by applying a blur node to smooth the data before processing.

What is the significance of the sine function in determining the final direction?

-The sine function is used to convert the direction signal into a simple -1 or 1 value, where -1 represents leftward movement and 1 represents rightward movement, simplifying the output for use in controlling visuals or triggering events.

How can the optical flow threshold be adjusted to improve the component's performance?

-The optical flow threshold can be adjusted to exclude minor movements or to focus on more significant movement, helping to reduce noise and improve the reliability of the direction signal.

What is the suggested method for controlling the circle's movement in the video?

-The circle's movement is controlled by using a speed drop with a limited range between -0.5 and 0.5, and by offsetting the direction signal to move the circle left or right based on the detected movement.

How can the component's parameters be customized for different lighting conditions?

-The parameters that may need to be adjusted for different lighting conditions include the optical flow threshold, the bounds for noise filtering, and potentially the lambda value in the blur node, although its specific function is not detailed in the script.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Playing an Audio File - MetaSound Tutorials for Unreal Engine 5

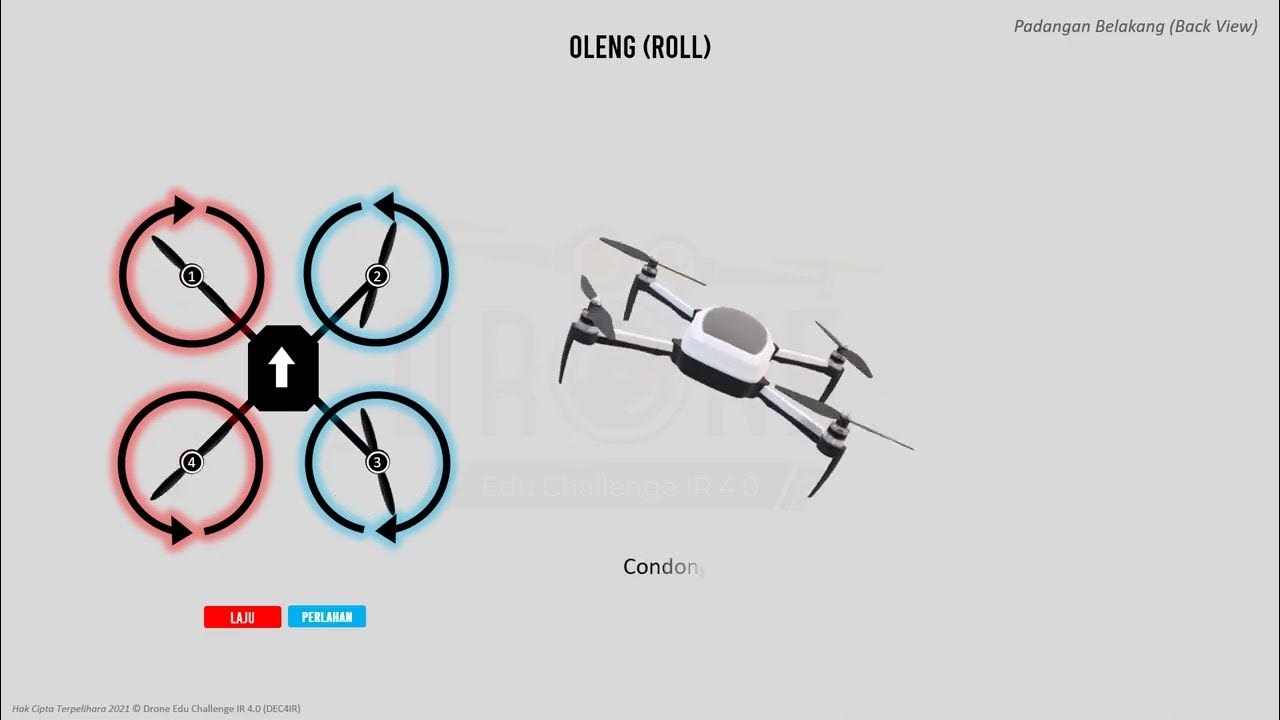

MODUL 03 (SEK. MENENGAH) - PRINSIP PENERBANGAN DRON

10 GERAKAN DASAR TARI BALI YANG HARUS DIKUASAI

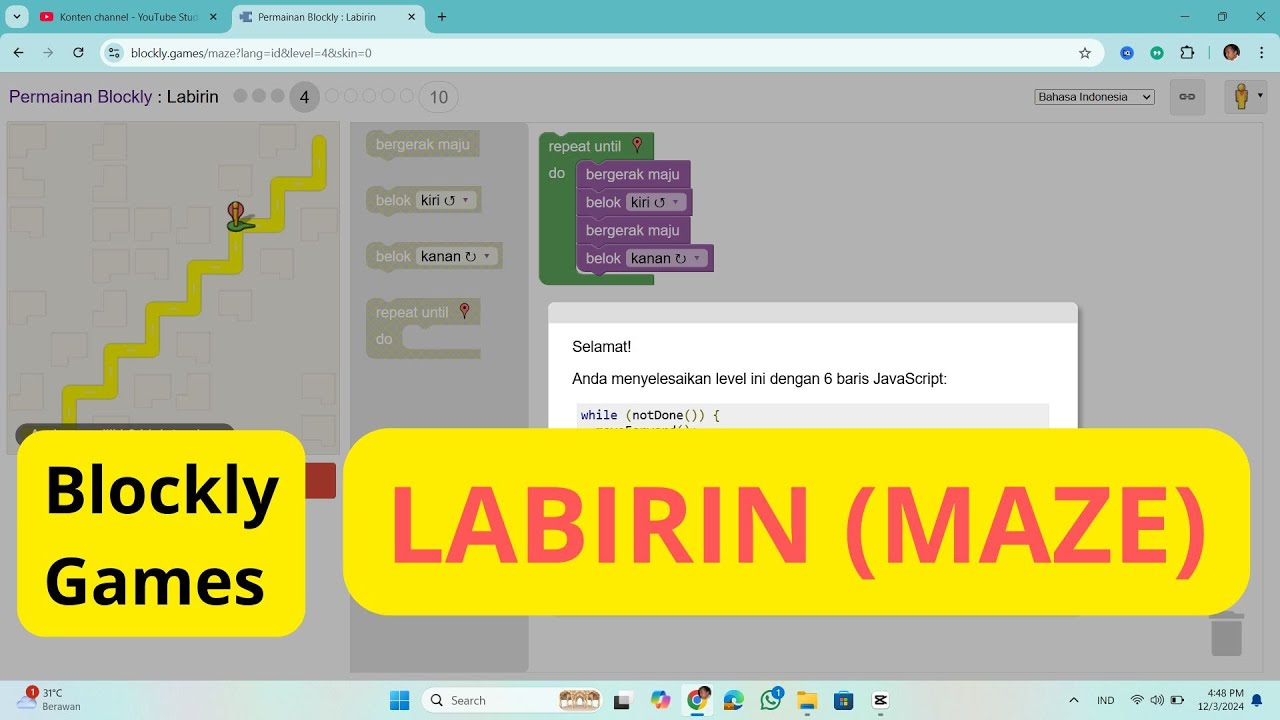

Blockly Games #2 : Belajar Coding untuk Anak SD SMP - Labirin

Time & Price Algorithmic Trading: Order Flow

Simulating A Brute Force Attack & Investigating With Microsoft Sentinel

5.0 / 5 (0 votes)