DQN | Deep Q-Network (DQN) Architecture | DQN Explained

Summary

TLDRDeep Q Networks (DQN) combine Q-learning with deep neural networks, enabling reinforcement learning to tackle complex tasks with high-dimensional state spaces. DQN's key components include neural networks for predicting Q-values, experience replay to reuse past interactions, and target networks for stable learning. With advancements like Double DQN, Dueling DQN, and Prioritized Experience Replay, DQN offers more efficient and stable training. Experience replay boosts sample efficiency and reduces instability, while target networks stabilize training and improve convergence. This combination makes DQN a powerful tool for solving dynamic problems, from gaming to robotics.

Takeaways

- 😀 DQN combines traditional Q-learning with deep neural networks to handle complex environments, such as video games and robot control.

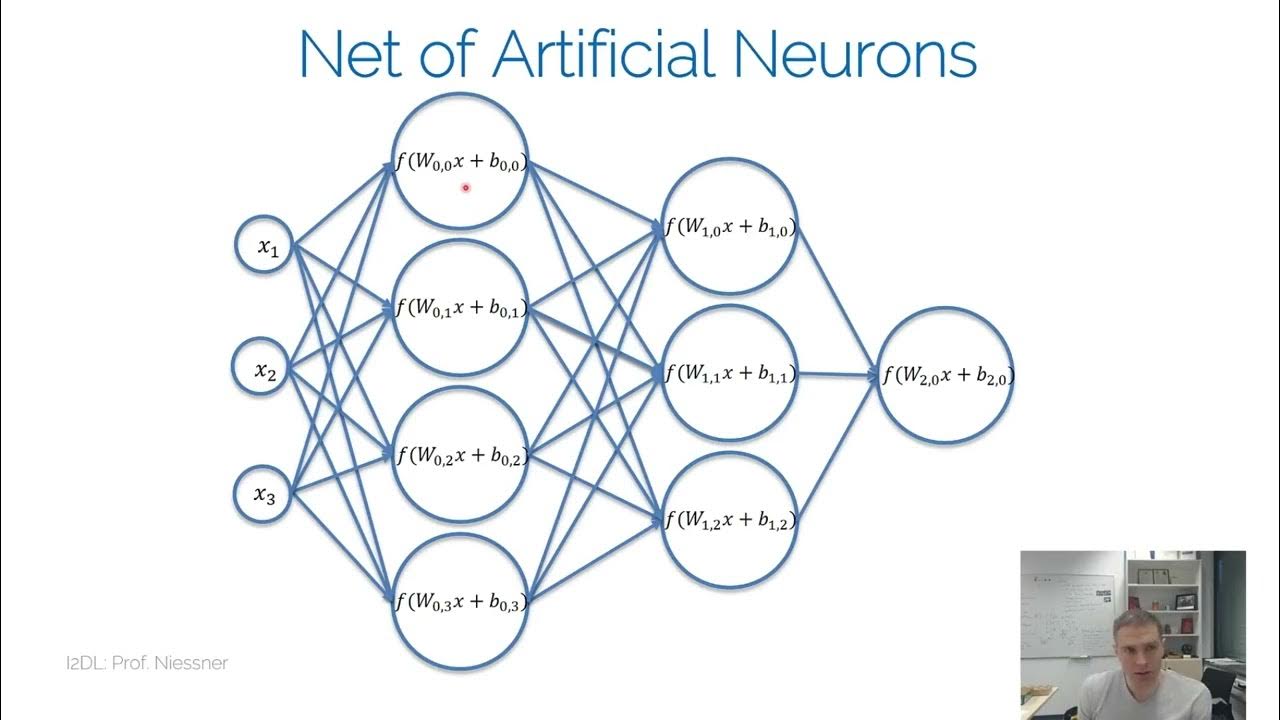

- 😀 The core of DQN is a neural network that outputs Q-values for each possible action based on the current state.

- 😀 Experience replay stores the agent's past experiences (state, action, reward, next state) in a buffer for future learning, enhancing training efficiency.

- 😀 DQN uses a target network to provide stable Q-value targets, updated less frequently to reduce instability during training.

- 😀 The DQN process involves interacting with the environment, storing experiences, training the neural network, and improving the policy over time.

- 😀 One of the key benefits of DQN is its ability to learn directly from raw data (e.g., images) without manual feature extraction.

- 😀 Experience replay increases sample efficiency by allowing the agent to learn from both past and new experiences.

- 😀 Experience replay helps break temporal correlations in data, making the training process more stable and ensuring diverse learning.

- 😀 The target network stabilizes the learning process by decoupling the target value calculation from the Q-network's updates, preventing oscillations.

- 😀 Double DQN, Dueling DQN, and Prioritized Experience Replay are advanced versions of DQN that address overestimation, action value splitting, and prioritize more informative experiences respectively.

- 😀 In summary, DQN integrates deep learning with reinforcement learning techniques to solve complex, high-dimensional tasks more efficiently and stably.

Q & A

What is a Deep Q Network (DQN)?

-A Deep Q Network (DQN) is a reinforcement learning algorithm that combines traditional Q-learning with deep neural networks, enabling it to handle complex, high-dimensional tasks like video games or robot control.

How does DQN process raw data like images?

-DQN processes raw data like images using convolutional layers that extract features and fully connected layers that output Q-values for each possible action.

What role does experience replay play in DQN?

-Experience replay stores past experiences (state-action-reward-next state tuples) in a memory buffer. It allows the agent to reuse these experiences for training, improving efficiency, reducing instability, and breaking temporal correlations.

How does the target network in DQN help stabilize training?

-The target network in DQN is a copy of the main network that is updated less frequently. It provides stable Q-value targets, reducing instability and preventing wild oscillations during training.

What is the epsilon-greedy policy in DQN?

-The epsilon-greedy policy is used to balance exploration and exploitation. With probability epsilon, the agent explores by choosing a random action, and with probability 1-epsilon, it exploits its knowledge by selecting the action with the highest predicted Q-value.

How does DQN minimize the temporal difference error?

-DQN minimizes the temporal difference (TD) error by computing the difference between the predicted Q-value and the target Q-value, which is derived using the Bellman equation. This error is then minimized during training.

What are the main advantages of using experience replay?

-Experience replay improves sample efficiency, breaks temporal correlations, stabilizes learning, mitigates catastrophic forgetting, improves generalization, and facilitates mini-batch updates, making learning faster and more efficient.

How does Double DQN improve upon the original DQN?

-Double DQN addresses the problem of Q-value overestimation by separating action selection from value estimation, ensuring that the selected action is evaluated using a different network, which results in more accurate Q-values.

What is the purpose of the dueling architecture in Dueling DQN?

-In Dueling DQN, the network is split into two parts: one estimates the overall value of a state, and the other estimates the relative value of actions. This helps the agent focus on important actions and improves decision-making efficiency.

What is prioritized experience replay and why is it useful?

-Prioritized experience replay prioritizes experiences based on how much they deviate from current predictions, ensuring that the agent learns more from experiences with higher learning potential, leading to faster and more effective training.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)