Topic 3D - Multilayer Neural Networks

Summary

TLDRThis video introduces the concept of multi-layer neural networks, expanding on the basics of perceptrons. It explains how these networks consist of an input layer, multiple hidden layers, and an output layer, with inputs passing through each layer to compute results. Unlike single perceptrons, the hidden layers’ desired outputs are not directly observable, making them a 'black box.' The video covers the training process using the perceptron algorithm, detailing how weights are adjusted based on errors. It also highlights the advantages of solving complex, non-linear problems and the challenges of increased computational demands during training.

Takeaways

- 😀 Multi-layer neural networks consist of multiple layers: input, hidden, and output layers, which are crucial for solving complex tasks.

- 😀 Hidden layers in a multi-layer network act as 'black boxes' because their internal operations are not directly observable.

- 😀 Unlike a perceptron, which can only handle linearly separable problems, multi-layer networks can learn complex, non-linear patterns.

- 😀 A multi-layer neural network can have multiple hidden layers, with commercial networks sometimes utilizing three or more hidden layers.

- 😀 Each layer in a multi-layer network contains computational neurons that receive and process inputs via weighted connections.

- 😀 The training process involves calculating outputs for each neuron, comparing them to desired results, and adjusting the weights using an error-correction process.

- 😀 Training involves adjusting weights based on the difference between the actual and desired output, with a learning rate influencing the magnitude of these adjustments.

- 😀 The example in the script demonstrates how input data is processed, errors are calculated, and weights are updated over iterations to minimize those errors.

- 😀 The main advantage of multi-layer neural networks is their ability to solve non-linearly separable problems, expanding their application potential.

- 😀 One disadvantage of multi-layer networks is the long training time required, as more layers and neurons increase the computational complexity.

- 😀 The higher computational power required by multi-layer networks compared to single-layer perceptrons is a significant challenge in practical applications.

Q & A

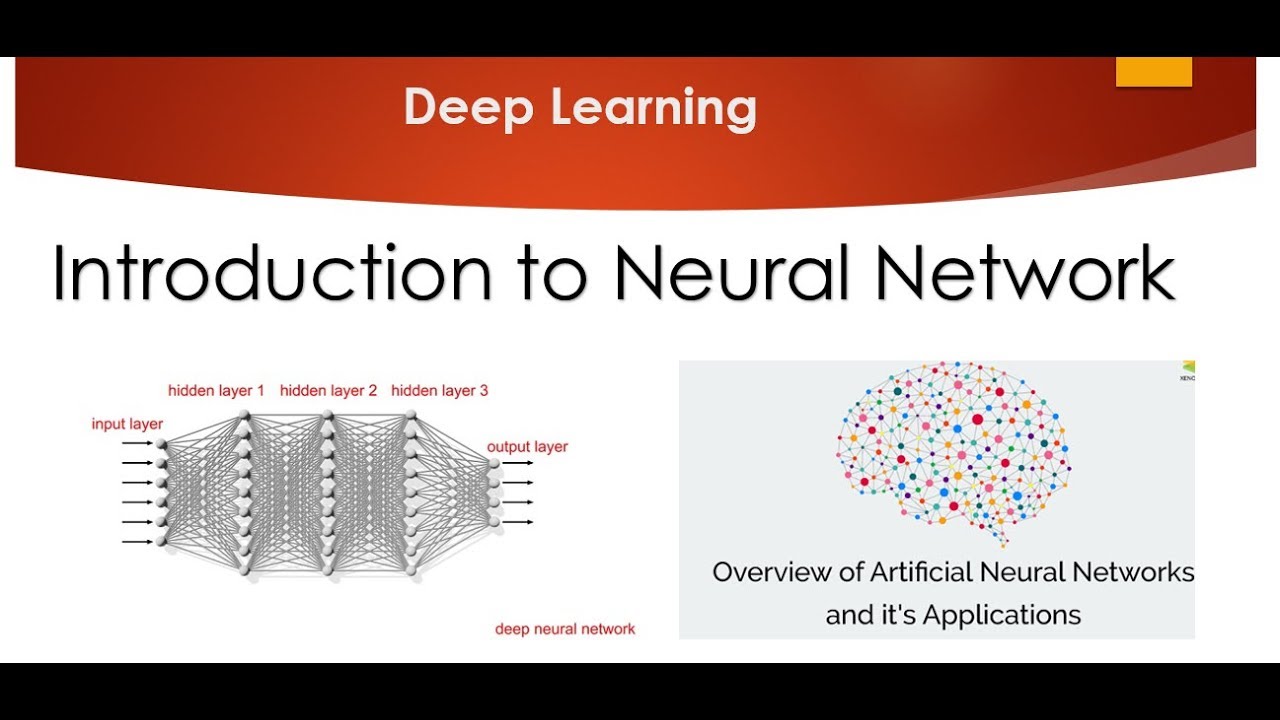

What is a multi-layer neural network?

-A multi-layer neural network consists of an input layer, one or more hidden layers, and an output layer. It is an extension of the perceptron model, where the information flows forward through the layers, and the hidden layers are not visible, making them 'black boxes.'

How does the multi-layer neural network differ from a single perceptron?

-In a single perceptron, there is one layer of neurons with inputs and an output. In contrast, a multi-layer neural network includes additional hidden layers that process information in a more complex manner, allowing the network to solve non-linear problems.

What role do the hidden layers play in a multi-layer neural network?

-Hidden layers in a multi-layer neural network process inputs through computational neurons, but their outputs are not directly observable. These layers enable the network to solve more complex, non-linear tasks, as the desired output for these layers is not known directly.

What is the importance of weights in a multi-layer neural network?

-Weights determine the strength of the connections between neurons in different layers. They are adjusted during the training process to minimize errors and allow the network to learn the desired patterns from the input data.

How are weights updated during training in a neural network?

-Weights are updated using algorithms such as the perceptron learning rule, which adjusts the weights based on the difference between the network's output and the desired output. The adjustments are influenced by the learning rate, input values, and the calculated error.

What is the significance of the learning rate in neural network training?

-The learning rate controls how much the weights are adjusted during each iteration of training. A higher learning rate results in larger updates, while a lower rate leads to smaller, more gradual changes.

What challenges does a multi-layer neural network face compared to a single perceptron?

-The main challenges of multi-layer neural networks include longer training times, higher computational power requirements, and more complex models, as compared to a single perceptron, which is simpler and faster but less capable of solving complex, non-linear problems.

Why is the hidden layer often referred to as a 'black box' in neural networks?

-The hidden layer is considered a 'black box' because its desired output is not directly visible, making it difficult to observe how it contributes to the final result. Only the final output layer is directly observed in terms of its ability to approximate the desired results.

What are the typical uses of multi-layer neural networks in practical applications?

-Multi-layer neural networks are used in tasks such as image recognition, speech recognition, and natural language processing, where the problem involves non-linear separability and requires complex pattern recognition that simple perceptrons cannot handle.

What are the advantages and disadvantages of multi-layer neural networks?

-The advantages of multi-layer neural networks include their ability to solve complex, non-linear problems that cannot be handled by simpler models like perceptrons. The disadvantages include long training times and high computational requirements, especially as the number of layers increases.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)