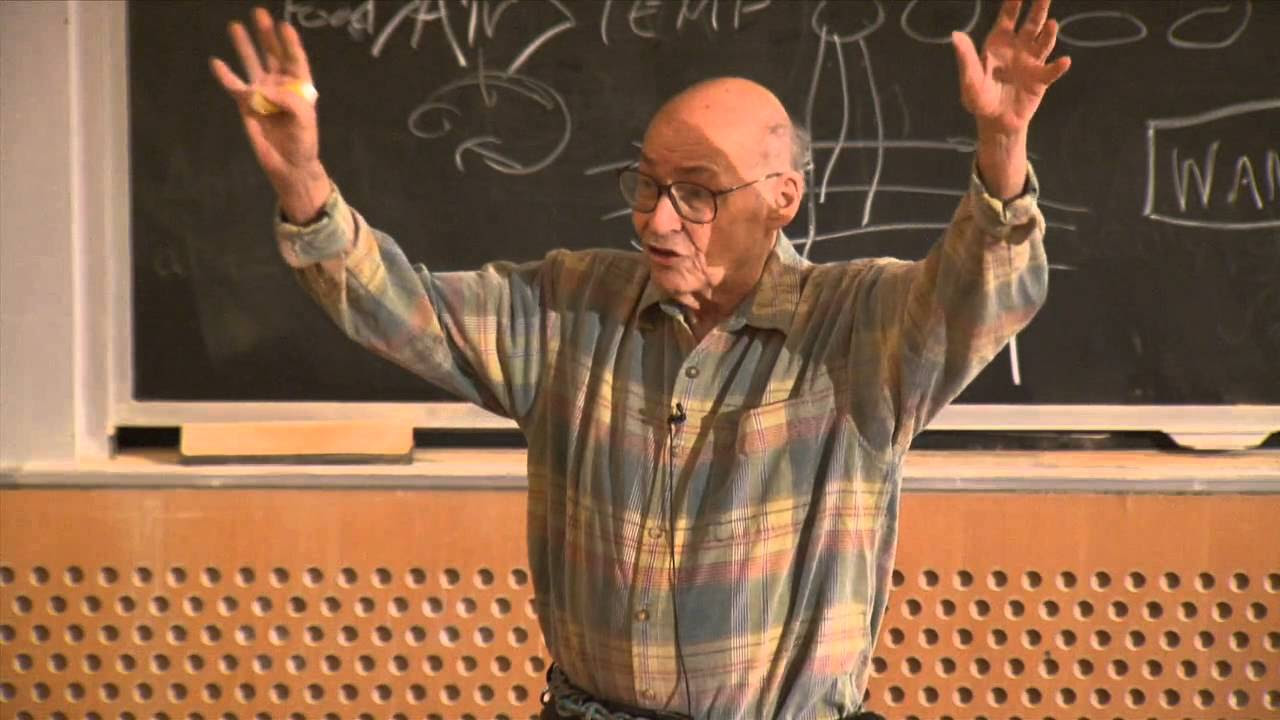

Geoffrey Hinton | Ilya's AI tutor Talent and intuition have already led to today’s large AI models

Summary

TLDR这段视频脚本记录了一位研究者在英格兰的科研单位经历,以及他在卡内基梅隆大学和爱丁堡大学从事人工智能研究的回忆。他讲述了自己对脑科学、哲学和人工智能的探索,以及与Terry Sejnowski和Peter Brown等人的合作。讨论了神经网络、深度学习、语言模型和多模态学习的发展,以及GPU在神经网络训练中的重要性。他还分享了对人工智能未来的看法,包括模型的推理能力和创造力。

Takeaways

- 🍺 在英国的研究单位,晚上六点大家都会去酒吧喝一杯。

- 🔬 在卡内基梅隆,周六晚上实验室里充满了学生,他们相信自己的工作会改变计算机科学的未来。

- 🧠 剑桥的脑研究课程让人失望,只讲述了神经元如何传导动作电位。

- 📚 读了Donald Hebb和John von Neumann的书后,对AI产生了兴趣。

- 🤔 脑学习的方式并非通过逻辑推理,而是通过修改神经网络中的连接权重。

- 👨🏫 与Terry Sejnowski的合作研究Boltzmann机器最为令人兴奋。

- 🎤 Peter Brown教会了我很多关于语音识别和隐藏马尔可夫模型的知识。

- 🤝 Ilia展示了出色的直觉,他认为只要模型足够大,就会表现得更好。

- 💡 大规模数据和计算使得新的AI算法得以实现。

- 📈 多模态模型将会更加高效,并能更好地理解空间关系。

Q & A

为什么在英格兰的周六晚上9点,实验室里会有很多学生?

-在英格兰的实验室里,学生们之所以在周六晚上9点还聚集在实验室,是因为他们相信他们正在进行的工作是未来,他们所做的下一步将会改变计算机科学的方向。

为什么作者最初在剑桥学习生理学时感到失望?

-作者在剑桥学习生理学时感到失望,因为他们所教授的只是关于神经元如何传导动作电位,而这并没有解释大脑是如何工作的。

作者最终为何选择了去爱丁堡学习人工智能?

-作者选择去爱丁堡学习人工智能是因为他发现至少在人工智能领域,他可以通过模拟来测试理论,这比他在剑桥学习生理学和哲学时的经历要有趣得多。

Donald Hebb的哪本书对作者产生了影响?

-Donald Hebb的书中关于学习如何改变神经元网络中的连接强度的内容对作者产生了很大的影响。

作者在卡内基梅隆大学的主要合作伙伴是谁,他们一起研究了什么?

-作者在卡内基梅隆大学的主要合作伙伴是Terry Sejnowski,他们一起研究了玻尔兹曼机,这是一种他们认为能够解释大脑工作方式的模型。

Peter Brown教授给作者带来了哪些影响?

-Peter Brown教授不仅在统计学方面给予了作者很多指导,还向作者介绍了隐马尔可夫模型,这些对作者后来的研究有着深远的影响。

Ilya Sutskever在作者的办公室提出了什么有洞察力的问题?

-Ilya Sutskever提出了关于为什么不将梯度直接给一个优化函数的问题,这个问题后来花费了他们好几年时间去思考。

为什么作者认为大型神经网络模型能够超越其训练数据?

-作者认为大型神经网络模型能够超越其训练数据,因为它们可以从错误标记的数据中学习并得出更好的结果,这表明它们能够识别训练数据的错误并进行自我修正。

作者如何看待当前的神经网络模型在多模态学习方面的潜力?

-作者认为当神经网络模型能够处理图像、视频和声音等多模态数据时,它们将更好地理解空间关系,从而在理解对象和进行创造性推理方面变得更加强大。

为什么作者认为使用GPU进行神经网络训练是一个好主意?

-作者认为使用GPU进行神经网络训练是一个好主意,因为GPU擅长进行矩阵乘法,这是神经网络训练中的基本操作,使用GPU可以显著加快训练速度。

作者如何看待数字计算与模拟计算在未来的发展?

-作者认为虽然模拟计算可能更接近大脑的工作方式,但数字计算在知识共享和效率方面具有优势,因此数字系统可能在未来的发展中占据主导地位。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)