Naive Bayes: Text Classification Example

Summary

TLDRIn this video, the concept of Naive Bayes text classification with Laplace smoothing is explained through a practical example. The script demonstrates how text data, specifically Chinese and Japanese documents, are classified based on word features. Key concepts like calculating class probabilities, conditional probabilities for words, and Laplace smoothing to handle zero-frequency issues are highlighted. The video also walks through how these calculations are applied to a test document to determine its class. This approach allows for a deeper understanding of both categorical and text classification methods.

Takeaways

- 😀 The script demonstrates how to apply Naive Bayes for text classification with Laplace smoothing.

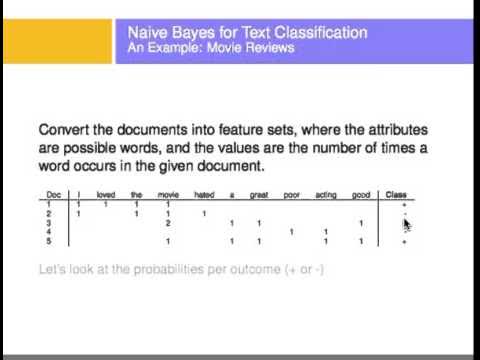

- 😀 It uses a simple dataset of Chinese and Japanese documents, where the words themselves act as features.

- 😀 The classification task is to predict whether a given document is Chinese (C) or Japanese (J).

- 😀 P(C) is calculated as the probability of a Chinese document occurring, based on the number of Chinese and Japanese documents in the training set.

- 😀 The script walks through calculating conditional probabilities such as P(Chinese | C) and P(Chinese | J) for both classes.

- 😀 Laplace smoothing is applied to avoid zero probabilities when certain words don't appear in a class, ensuring meaningful values are calculated.

- 😀 The calculation of the probabilities includes adding 1 (the smoothing factor) to the count of word occurrences and adjusting for the total number of words in the class.

- 😀 The cardinality of the feature set (distinct words) is used to adjust the probabilities during the smoothing process.

- 😀 The script explains how to calculate the final prediction by multiplying prior probabilities and the calculated conditional probabilities for each word in the test document.

- 😀 The final decision is based on comparing the probability values for each class (Chinese and Japanese), with the higher value determining the class of the document.

- 😀 The example highlights the importance of Laplace smoothing in Naive Bayes for preventing the impact of zero values on the classification result.

Q & A

What is the main topic of the video?

-The main topic of the video is a text classification example using the Naive Bayes classifier with Laplace smoothing. It demonstrates how to apply Naive Bayes to text data and compares categorical data classification to text classification.

What types of data are being compared in the video?

-The video compares categorical data classification (e.g., using features like Outlook, humidity, temperature) with text classification, where the features are the words in documents (Chinese and Japanese).

What does Laplace smoothing prevent in Naive Bayes text classification?

-Laplace smoothing prevents the issue of zero probabilities when a word appears in the test data but not in the training data. It ensures that all words have a non-zero probability, making the model more robust.

How are prior probabilities (P(C) and P(J)) calculated in the example?

-Prior probabilities are calculated based on the frequency of each class in the training dataset. For example, P(C) is the probability of a document being classified as Chinese, calculated as the ratio of Chinese documents to total documents. Similarly, P(J) is the probability of a document being classified as Japanese.

What is the significance of the cardinality of X in the calculation?

-The cardinality of X refers to the number of distinct words across all documents in the training dataset. This value is used in the Laplace smoothing formula to adjust the probability calculations and prevent zero probabilities for unseen words.

Why is it necessary to calculate P(word | class) for each word in the test document?

-Calculating P(word | class) for each word in the test document allows us to determine the likelihood of the document belonging to each class (e.g., Chinese or Japanese) based on the occurrence of words. This helps in making an informed prediction about the document's class.

What happens when a word does not occur in a particular class during training?

-When a word does not occur in a particular class during training, its conditional probability would be zero. Laplace smoothing is used to add a small constant (usually 1) to prevent this and ensure that the word has a non-zero probability, even if it was not seen in that class during training.

How does the Naive Bayes classifier make a prediction for the class of a document?

-The Naive Bayes classifier calculates the posterior probability for each class given the document's words. It multiplies the prior probability of the class with the conditional probabilities of each word given the class. The class with the highest posterior probability is selected as the predicted class.

What is the final prediction in the example, and how is it determined?

-The final prediction in the example is class 'C' (Chinese). This is determined by calculating the product of prior probabilities and conditional probabilities for each class and comparing the resulting values. The class with the higher probability is selected.

What is the purpose of using Laplace smoothing in this Naive Bayes example?

-The purpose of using Laplace smoothing is to ensure that all words, including those not seen in the training data for a particular class, have a non-zero probability. This improves the classifier's performance by preventing zero probability issues, which would otherwise hinder the prediction process.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Naive Bayes, Clearly Explained!!!

Naive Bayes dengan Python & Google Colabs | Machine Learning untuk Pemula

Text Classification Using Naive Bayes

Klasifikasi dengan Algoritma Naive Bayes Classifier

Machine Learning: Multinomial Naive Bayes Classifier with SK-Learn Demonstration

Naive Bayes classifier: A friendly approach

5.0 / 5 (0 votes)