Optimize Your AI - Quantization Explained

Summary

TLDRThis video explains how quantization allows massive AI models to run on basic hardware, such as small laptops, by reducing memory usage. It covers key concepts like Q2, Q4, and Q8 quantization and their impact on model size and performance. The video also introduces 'context quantization' to save memory and optimize AI models further. Viewers are shown how to configure settings to make large models more efficient and how to balance precision and memory usage to run AI on even limited systems like a Raspberry Pi. Practical tips are provided for selecting the right quantization for specific needs.

Takeaways

- 😀 Quantization is a clever trick that allows running massive AI models on basic hardware by reducing memory usage.

- 😀 Q2, Q4, and Q8 are quantization levels that represent different precision settings for AI models, balancing memory and performance.

- 😀 AI models typically use 32-bit precision, which consumes a lot of memory, but quantization reduces memory requirements by using less precise values.

- 😀 A 7 billion parameter model at full precision requires 28 GB of RAM, making it difficult to run on standard gaming PCs.

- 😀 Quantization can be thought of as choosing a ruler with different units of measurement, where smaller units (Q2) use less memory but lose precision.

- 😀 Context quantization is a new feature that reduces memory usage by quantizing the conversation history (token context).

- 😀 Enabling Flash Attention and setting KV cache quantization (e.g., Q8) can significantly reduce memory usage in AI models.

- 😀 A model with 32k context and Flash Attention enabled can save up to 7 GB of memory compared to the default settings.

- 😀 Models with different quantization settings (Q2, Q4, Q8) perform differently, and the optimal setting depends on the specific task and hardware.

- 😀 Starting with a Q4 KM model and optimizing based on your use case is the recommended approach to run AI models on limited hardware, like a laptop.

- 😀 The key to running large AI models efficiently on basic hardware is finding the right balance between precision and performance through quantization.

Q & A

What is quantization in the context of AI models?

-Quantization is the process of reducing the precision of the numbers (parameters) in an AI model. Instead of using 32-bit precision, lower precision formats like Q8, Q4, and Q2 can be used, reducing memory requirements while still maintaining reasonable performance.

Why is quantization important for running large AI models on basic hardware?

-Large AI models require vast amounts of memory to run. Quantization reduces the memory usage by lowering the precision of the model's parameters, making it possible to run these models on less powerful hardware like personal laptops or even Raspberry Pi devices.

What are the differences between Q2, Q4, and Q8 quantization?

-Q8 has moderate precision, balancing memory savings and accuracy. Q4 offers further memory savings but with more reduced precision, while Q2 has the lowest precision, offering maximum memory savings but potentially less accuracy.

How does the Q4 model impact memory usage?

-The Q4 model reduces memory usage by lowering the precision of the model's parameters. This enables large models to be run on hardware with less RAM by using 4-bit precision instead of the typical 32-bit.

What is the role of context quantization in AI models?

-Context quantization helps manage the memory required to store conversation history. As models become capable of handling large amounts of context (such as entire books), this technique optimizes how much memory is consumed by this stored information.

What is flash attention and how does it affect memory usage?

-Flash attention is a feature that optimizes memory usage during the attention mechanism of an AI model. Enabling flash attention can significantly reduce memory usage, especially when handling larger contexts or running complex models.

How does enabling flash attention affect model performance?

-Enabling flash attention can reduce memory usage by optimizing how attention mechanisms are handled. This can lead to improved performance, particularly in reducing memory load, though the actual speed and efficiency gains can vary depending on the specific model.

How does Q8 KV cache quantization impact memory usage?

-Q8 KV cache quantization reduces memory usage by lowering the precision of the key-value cache used for context storage. This can save significant memory when running models with large context windows, making it more efficient to handle long conversation histories.

What’s the best approach for selecting the right quantization for a specific project?

-The best approach is to start with a Q4 model, as it provides a good balance between memory savings and accuracy. Then, test the model with your specific use case. If performance is satisfactory, you can try using Q2 for even lower memory usage, or move to Q8 for better precision if needed.

Why might a Q2 model work just as well for most tasks despite having lower precision?

-A Q2 model may work just as well for many tasks because not all applications require the highest level of precision. By reducing the memory usage significantly, Q2 models can provide efficiency without sacrificing too much performance in everyday tasks.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Quantization: How LLMs survive in low precision

DeepSeek on Apple Silicon in depth | 4 MacBooks Tested

1-Bit LLM: The Most Efficient LLM Possible?

Exo: Run your own AI cluster at home by Mohamed Baioumy

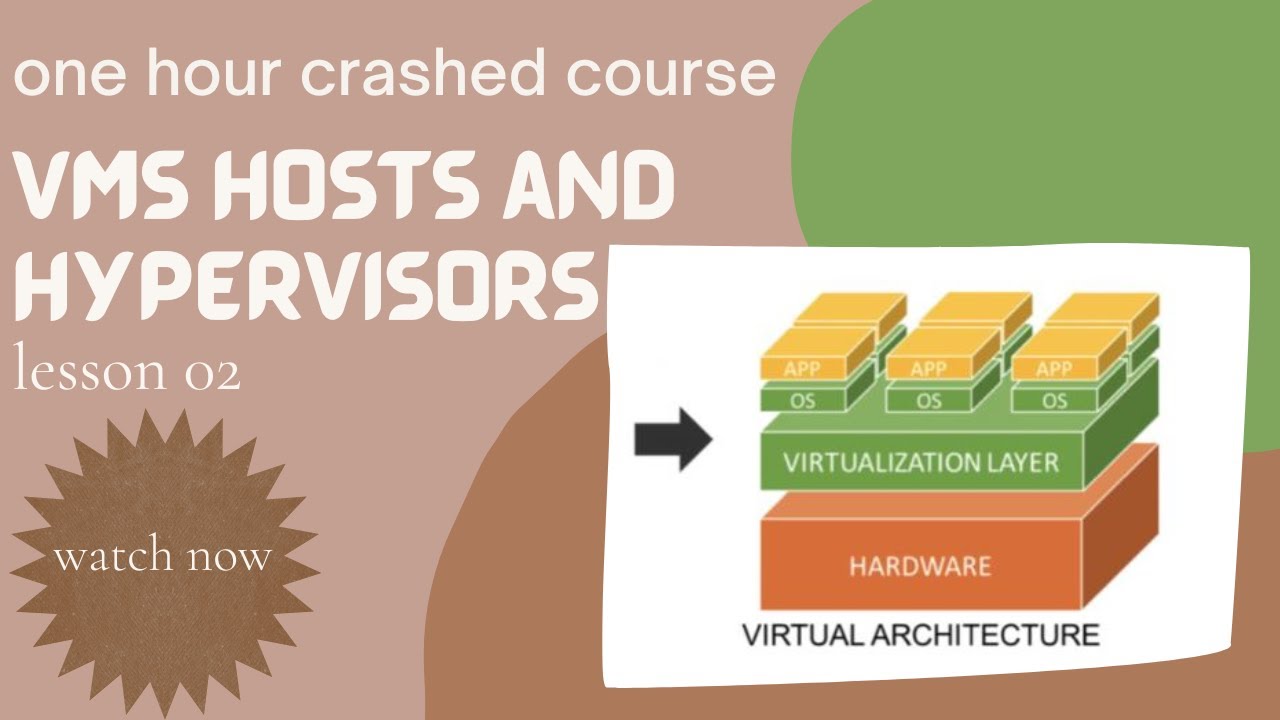

02 VMs Hosts and Hypervisors || Virtualization #host

What is LoRA? Low-Rank Adaptation for finetuning LLMs EXPLAINED

5.0 / 5 (0 votes)